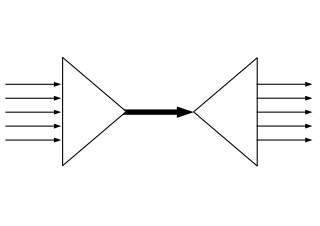

In telecommunications and computer networks, multiplexing is a method by which multiple analog or digital signals are combined into one signal over a shared medium. The aim is to share a scarce resource. For example, in telecommunications, several telephone calls may be carried using one wire. Multiplexing originated in telegraphy in the 1870s, and is now widely applied in communications. In telephony, George Owen Squier is credited with the development of telephone carrier multiplexing in 1910.

Speech synthesis is the artificial production of human speech. A computer system used for this purpose is called a speech computer or speech synthesizer, and can be implemented in software or hardware products. A text-to-speech (TTS) system converts normal language text into speech; other systems render symbolic linguistic representations like phonetic transcriptions into speech.

Data acquisition is the process of sampling signals that measure real world physical conditions and converting the resulting samples into digital numeric values that can be manipulated by a computer. Data acquisition systems, abbreviated by the initialisms DAS,DAQ, or DAU, typically convert analog waveforms into digital values for processing. The components of data acquisition systems include:

A digital audio workstation (DAW) is an electronic device or application software used for recording, editing and producing audio files. DAWs come in a wide variety of configurations from a single software program on a laptop, to an integrated stand-alone unit, all the way to a highly complex configuration of numerous components controlled by a central computer. Regardless of configuration, modern DAWs have a central interface that allows the user to alter and mix multiple recordings and tracks into a final produced piece.

Laboratory Virtual Instrument Engineering Workbench (LabVIEW) is a system-design platform and development environment for a visual programming language from National Instruments.

Secure voice is a term in cryptography for the encryption of voice communication over a range of communication types such as radio, telephone or IP.

Haskins Laboratories, Inc. is an independent 501(c) non-profit corporation, founded in 1935 and located in New Haven, Connecticut, since 1970. Upon moving to New Haven, Haskins entered in to formal affiliation agreements with both Yale University and the University of Connecticut; it remains fully independent, administratively and financially, of both Yale and UConn. Haskins is a multidisciplinary and international community of researchers which conducts basic research on spoken and written language. A guiding perspective of their research is to view speech and language as emerging from biological processes, including those of adaptation, response to stimuli, and conspecific interaction. The Laboratories has a long history of technological and theoretical innovation, from creating systems of rules for speech synthesis and development of an early working prototype of a reading machine for the blind to developing the landmark concept of phonemic awareness as the critical preparation for learning to read an alphabetic writing system.

ESL Incorporated, or Electromagnetic Systems Laboratory, was a subsidiary of TRW, a high technology firm in the United States that was engaged in software design, systems analysis and hardware development for the strategic reconnaissance marketplace. Founded in January 1964 in Palo Alto, California, the company was initially entirely privately capitalized by its employees. One of the company founders and original chief executive was William J. Perry, who eventually became United States Secretary of Defense under President Bill Clinton. Another company founder was Joe Edwin Armstrong. ESL was a leader in developing strategic signal processing systems and a prominent supplier of tactical reconnaissance and direction-finding systems to the U. S. military. These systems provided integrated real-time intelligence.

Philip E. Rubin is an American cognitive scientist, technologist, and science administrator known for raising the visibility of behavioral and cognitive science, neuroscience, and ethical issues related to science, technology, and medicine, at a national level. His research career is noted for his theoretical contributions and pioneering technological developments, starting in the 1970s, related to speech synthesis and speech production, including articulatory synthesis and sinewave synthesis, and their use in studying complex temporal events, particularly understanding the biological bases of speech and language.

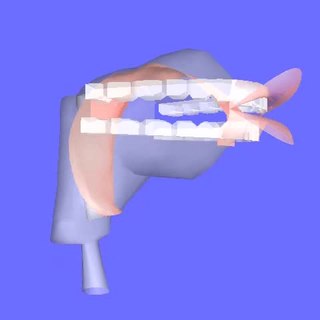

Sinewave synthesis, or sine wave speech, is a technique for synthesizing speech by replacing the formants with pure tone whistles. The first sinewave synthesis program (SWS) for the automatic creation of stimuli for perceptual experiments was developed by Philip Rubin at Haskins Laboratories in the 1970s. This program was subsequently used by Robert Remez, Philip Rubin, David Pisoni, and other colleagues to show that listeners can perceive continuous speech without traditional speech cues, i.e., pitch, stress, and intonation. This work paved the way for a view of speech as a dynamic pattern of trajectories through articulatory-acoustic space.

The pattern playback is an early talking device that was built by Dr. Franklin S. Cooper and his colleagues, including John M. Borst and Caryl Haskins, at Haskins Laboratories in the late 1940s and completed in 1950. There were several different versions of this hardware device. Only one currently survives. The machine converts pictures of the acoustic patterns of speech in the form of a spectrogram back into sound. Using this device, Alvin Liberman, Frank Cooper, and Pierre Delattre were able to discover acoustic cues for the perception of phonetic segments. This research was fundamental to the development of modern techniques of speech synthesis, reading machines for the blind, the study of speech perception and speech recognition, and the development of the motor theory of speech perception.

Articulatory synthesis refers to computational techniques for synthesizing speech based on models of the human vocal tract and the articulation processes occurring there. The shape of the vocal tract can be controlled in a number of ways which usually involves modifying the position of the speech articulators, such as the tongue, jaw, and lips. Speech is created by digitally simulating the flow of air through the representation of the vocal tract.

Articulatory phonology is a linguistic theory originally proposed in 1986 by Catherine Browman of Haskins Laboratories and Louis M. Goldstein of Yale University and Haskins. The theory identifies theoretical discrepancies between phonetics and phonology and aims to unify the two by treating them as low- and high-dimensional descriptions of a single system.

Franklin Seaney Cooper was an American physicist and inventor who was a pioneer in speech research.

Catherine Phebe Browman was an American linguist and speech scientist. She received her Ph.D. in linguistics from the University of California, Los Angeles (UCLA) in 1978. Browman was a research scientist at Bell Laboratories in New Jersey (1967–1972). While at Bell Laboratories, she was known for her work on speech synthesis using demisyllables. She later worked as researcher at Haskins Laboratories in New Haven, Connecticut (1982–1998). She was best known for developing, with Louis Goldstein, of the theory of articulatory phonology, a gesture-based approach to phonological and phonetic structure. The theoretical approach is incorporated in a computational model that generates speech from a gesturally-specified lexicon. Browman was made an honorary member of the Association for Laboratory Phonology.

PowerLab is a data acquisition system developed by ADInstruments comprising hardware and software and designed for use in life science research and teaching applications. It is commonly used in physiology, pharmacology, biomedical engineering, sports/exercise studies and psychophysiology laboratories to record and analyse physiological signals from human or animal subjects or from isolated organs. The system consists of an input device connected to a Microsoft Windows or Mac OS computer using a USB cable and LabChart software which is supplied with the PowerLab and provides the recording, display and analysis functions. The use of PowerLab and supplementary ADInstruments products have been demonstrated on the Journal of Visualised Experiments.

The Biopac Student Lab is a proprietary teaching device and method introduced in 1995 as a digital replacement for aging chart recorders and oscilloscopes that were widely used in undergraduate teaching laboratories prior to that time. It is manufactured by BIOPAC Systems, Inc., of Goleta, California. The advent of low cost personal computers meant that older analog technologies could be replaced with powerful and less expensive computerized alternatives.

TNSDL stands for TeleNokia Specification and Description Language. TNSDL is based on the ITU-T SDL-88 language. It is used exclusively at Nokia Networks, primarily for developing applications for telephone exchanges.

DADiSP is a numerical computing environment developed by DSP Development Corporation which allows one to display and manipulate data series, matrices and images with an interface similar to a spreadsheet. DADiSP is used in the study of signal processing, numerical analysis, statistical and physiological data processing.

Two closely related terms, Low Frequency Analyzer and Recorder and Low Frequency Analysis and Recording bearing the acronym LOFAR, deal with the equipment and process respectively for presenting a visual spectrum representation of low frequency sounds in a time–frequency analysis. The process was originally applied to fixed surveillance passive antisubmarine sonar systems and later to sonobuoy and other systems. Originally the analysis was electromechanical and the display was produced on electrostatic recording paper, a Lofargram, with stronger frequencies presented as lines against background noise. The analysis migrated to digital and both analysis and display were digital after a major system consolidation into centralized processing centers during the 1990s.