In cryptography, a hard-core predicate of a one-way function f is a predicate b (i.e., a function whose output is a single bit) which is easy to compute (as a function of x) but is hard to compute given f(x). In formal terms, there is no probabilistic polynomial-time (PPT) algorithm that computes b(x) from f(x) with probability significantly greater than one half over random choice of x. [1] :34 In other words, if x is drawn uniformly at random, then given f(x), any PPT adversary can only distinguish the hard-core bit b(x) and a uniformly random bit with negligible advantage over the length of x. [2]

Cryptography or cryptology is the practice and study of techniques for secure communication in the presence of third parties called adversaries. More generally, cryptography is about constructing and analyzing protocols that prevent third parties or the public from reading private messages; various aspects in information security such as data confidentiality, data integrity, authentication, and non-repudiation are central to modern cryptography. Modern cryptography exists at the intersection of the disciplines of mathematics, computer science, electrical engineering, communication science, and physics. Applications of cryptography include electronic commerce, chip-based payment cards, digital currencies, computer passwords, and military communications.

In computer science, a one-way function is a function that is easy to compute on every input, but hard to invert given the image of a random input. Here, "easy" and "hard" are to be understood in the sense of computational complexity theory, specifically the theory of polynomial time problems. Not being one-to-one is not considered sufficient of a function for it to be called one-way.

In cryptography, an adversary's advantage is a measure of how successfully it can attack a cryptographic algorithm, by distinguishing it from an idealized version of that type of algorithm. Note that in this context, the "adversary" is itself an algorithm and not a person. A cryptographic algorithm is considered secure if no adversary has a non-negligible advantage, subject to specified bounds on the adversary's computational resources. "Negligible" usually means "within O(2−p)" where p is a security parameter associated with the algorithm. For example, p might be the number of bits in a block cipher's key.

A hard-core function can be defined similarly. That is, if x is chosen uniformly at random, then given f(x), any PPT algorithm can only distinguish the hard-core function value h(x) and uniformly random bits of length |h(x)| with negligible advantage over the length of x. [3] [4]

A hard-core predicate captures "in a concentrated sense" the hardness of inverting f.

While a one-way function is hard to invert, there are no guarantees about the feasibility of computing partial information about the preimage c from the image f(x). For instance, while RSA is conjectured to be a one-way function, the Jacobi symbol of the preimage can be easily computed from that of the image. [1] :121

The Jacobi symbol is a generalization of the Legendre symbol. Introduced by Jacobi in 1837, it is of theoretical interest in modular arithmetic and other branches of number theory, but its main use is in computational number theory, especially primality testing and integer factorization; these in turn are important in cryptography.

It is clear that if a one-to-one function has a hard-core predicate, then it must be one way. Oded Goldreich and Leonid Levin (1989) showed how every one-way function can be trivially modified to obtain a one-way function that has a specific hard-core predicate. [5] Let f be a one-way function. Define g(x,r) = (f(x), r) where the length of r is the same as that of x. Let xj denote the jth bit of x and rj the jth bit of r. Then

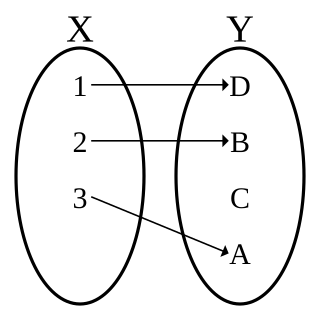

In mathematics, an injective function or injection or one-to-one function is a function that preserves distinctness: it never maps distinct elements of its domain to the same element of its codomain. In other words, every element of the function's codomain is the image of at most one element of its domain. The term one-to-one function must not be confused with one-to-one correspondence, which uniquely maps all elements in both domain and codomain to each other.

Oded Goldreich is a professor of Computer Science at the Faculty of Mathematics and Computer Science of Weizmann Institute of Science, Israel. His research interests lie within the theory of computation and are, specifically, the interplay of randomness and computation, the foundations of cryptography, and computational complexity theory. He won the Knuth Prize in 2017.

Leonid Anatolievich Levin is a Soviet-American computer scientist.

is a hard core predicate of g. Note that b(x, r) = <x, r> where <·, ·> denotes the standard inner product on the vector space (Z2)n. This predicate is hard-core due to computational issues; that is, it is not hard to compute because g(x, r) is information theoretically lossy. Rather, if there exists an algorithm that computes this predicate efficiently, then there is another algorithm that can invert f efficiently.

In linear algebra, an inner product space is a vector space with an additional structure called an inner product. This additional structure associates each pair of vectors in the space with a scalar quantity known as the inner product of the vectors. Inner products allow the rigorous introduction of intuitive geometrical notions such as the length of a vector or the angle between two vectors. They also provide the means of defining orthogonality between vectors. Inner product spaces generalize Euclidean spaces to vector spaces of any dimension, and are studied in functional analysis. The first usage of the concept of a vector space with an inner product is due to Giuseppe Peano, in 1898.

A vector space is a collection of objects called vectors, which may be added together and multiplied ("scaled") by numbers, called scalars. Scalars are often taken to be real numbers, but there are also vector spaces with scalar multiplication by complex numbers, rational numbers, or generally any field. The operations of vector addition and scalar multiplication must satisfy certain requirements, called axioms, listed below.

Information theory studies the quantification, storage, and communication of information. It was originally proposed by Claude Shannon in 1948 to find fundamental limits on signal processing and communication operations such as data compression, in a landmark paper entitled "A Mathematical Theory of Communication". Applications of fundamental topics of information theory include lossless data compression, lossy data compression, and channel coding. Its impact has been crucial to the success of the Voyager missions to deep space, the invention of the compact disc, the feasibility of mobile phones, the development of the Internet, the study of linguistics and of human perception, the understanding of black holes, and numerous other fields.

A similar construction yields a hard-core function with O(log |x|) output bits. Suppose f is a strong one-way function. Define g(x, r) = (f(x), r) where |r| = 2|x|. Choose a length function l(n) = O(log n) s.t. l(n) ≤ n. Let

Then h(x, r) := b1(x, r) b2(x, r) ... bl(|x|)(x, r) is a hard-core function with output length l(|x|). [6]

It is sometimes the case that an actual bit of the input x is hard-core. For example, every single bit of inputs to the RSA function is a hard-core predicate of RSA and blocks of O(log |x|) bits of x are indistinguishable from random bit strings in polynomial time (under the assumption that the RSA function is hard to invert). [7]

Hard-core predicates give a way to construct a pseudorandom generator from any one-way permutation. If b is a hard-core predicate of a one-way permutation f, and s is a random seed, then

is a pseudorandom bit sequence, where fn means the n-th iteration of applying f on s, and b is the generated hard-core bit by each round n. [1] :132

Hard-core predicates of trapdoor one-way permutations (known as trapdoor predicates) can be used to construct semantically secure public-key encryption schemes. [1] :129