Neural networks are a branch of machine learning models that are built using the principles of neuronal organization found in the biological neural networks constituting animal brains.

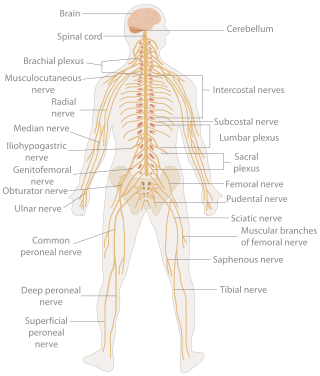

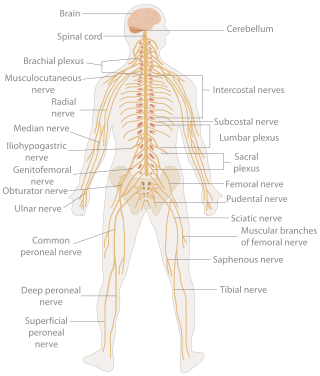

In biology, the nervous system is the highly complex part of an animal that coordinates its actions and sensory information by transmitting signals to and from different parts of its body. The nervous system detects environmental changes that impact the body, then works in tandem with the endocrine system to respond to such events. Nervous tissue first arose in wormlike organisms about 550 to 600 million years ago. In vertebrates, it consists of two main parts, the central nervous system (CNS) and the peripheral nervous system (PNS). The CNS consists of the brain and spinal cord. The PNS consists mainly of nerves, which are enclosed bundles of the long fibers, or axons, that connect the CNS to every other part of the body. Nerves that transmit signals from the brain are called motor nerves or efferent nerves, while those nerves that transmit information from the body to the CNS are called sensory nerves or afferent. Spinal nerves are mixed nerves that serve both functions. The PNS is divided into three separate subsystems, the somatic, autonomic, and enteric nervous systems. Somatic nerves mediate voluntary movement. The autonomic nervous system is further subdivided into the sympathetic and the parasympathetic nervous systems. The sympathetic nervous system is activated in cases of emergencies to mobilize energy, while the parasympathetic nervous system is activated when organisms are in a relaxed state. The enteric nervous system functions to control the gastrointestinal system. Both autonomic and enteric nervous systems function involuntarily. Nerves that exit from the cranium are called cranial nerves while those exiting from the spinal cord are called spinal nerves.

Norse is a demonym for Norsemen, a Medieval North Germanic ethnolinguistic group ancestral to modern Scandinavians, defined as speakers of Old Norse from about the 9th to the 13th centuries.

Network, networking and networked may refer to:

Computational neuroscience is a branch of neuroscience which employs mathematics, computer science, theoretical analysis and abstractions of the brain to understand the principles that govern the development, structure, physiology and cognitive abilities of the nervous system.

Walter Harry Pitts, Jr. was an American logician who worked in the field of computational neuroscience. He proposed landmark theoretical formulations of neural activity and generative processes that influenced diverse fields such as cognitive sciences and psychology, philosophy, neurosciences, computer science, artificial neural networks, cybernetics and artificial intelligence, together with what has come to be known as the generative sciences. He is best remembered for having written along with Warren McCulloch, a seminal paper in scientific history, titled "A Logical Calculus of Ideas Immanent in Nervous Activity" (1943). This paper proposed the first mathematical model of a neural network. The unit of this model, a simple formalized neuron, is still the standard of reference in the field of neural networks. It is often called a McCulloch–Pitts neuron. Prior to that paper, he formalized his ideas regarding the fundamental steps to building a Turing machine in "The Bulletin of Mathematical Biophysics" in an essay titled "Some observations on the simple neuron circuit".

The olfactory epithelium is a specialized epithelial tissue inside the nasal cavity that is involved in smell. In humans, it measures 5 cm2 (0.78 sq in) and lies on the roof of the nasal cavity about 7 cm (2.8 in) above and behind the nostrils. The olfactory epithelium is the part of the olfactory system directly responsible for detecting odors.

Terrence Joseph Sejnowski is the Francis Crick Professor at the Salk Institute for Biological Studies where he directs the Computational Neurobiology Laboratory and is the director of the Crick-Jacobs center for theoretical and computational biology. He has performed pioneering research in neural networks and computational neuroscience.

A neural circuit is a population of neurons interconnected by synapses to carry out a specific function when activated. Multiple neural circuits interconnect with one another to form large scale brain networks.

A neural network, also called a neuronal network, is an interconnected population of neurons. Biological neural networks are studied to understand the organization and functioning of nervous systems.

Neural oscillations, or brainwaves, are rhythmic or repetitive patterns of neural activity in the central nervous system. Neural tissue can generate oscillatory activity in many ways, driven either by mechanisms within individual neurons or by interactions between neurons. In individual neurons, oscillations can appear either as oscillations in membrane potential or as rhythmic patterns of action potentials, which then produce oscillatory activation of post-synaptic neurons. At the level of neural ensembles, synchronized activity of large numbers of neurons can give rise to macroscopic oscillations, which can be observed in an electroencephalogram. Oscillatory activity in groups of neurons generally arises from feedback connections between the neurons that result in the synchronization of their firing patterns. The interaction between neurons can give rise to oscillations at a different frequency than the firing frequency of individual neurons. A well-known example of macroscopic neural oscillations is alpha activity.

Pulse-coupled networks or pulse-coupled neural networks (PCNNs) are neural models proposed by modeling a cat's visual cortex, and developed for high-performance biomimetic image processing.

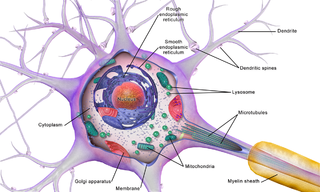

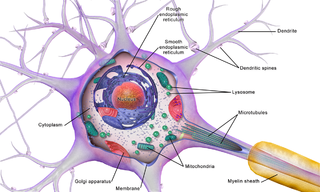

Neuron is one of the primary cell types in the nervous system.

An echo state network (ESN) is a type of reservoir computer that uses a recurrent neural network with a sparsely connected hidden layer. The connectivity and weights of hidden neurons are fixed and randomly assigned. The weights of output neurons can be learned so that the network can produce or reproduce specific temporal patterns. The main interest of this network is that although its behavior is non-linear, the only weights that are modified during training are for the synapses that connect the hidden neurons to output neurons. Thus, the error function is quadratic with respect to the parameter vector and can be differentiated easily to a linear system.

Spiking neural networks (SNNs) are artificial neural networks (ANN) that more closely mimic natural neural networks. In addition to neuronal and synaptic state, SNNs incorporate the concept of time into their operating model. The idea is that neurons in the SNN do not transmit information at each propagation cycle, but rather transmit information only when a membrane potential—an intrinsic quality of the neuron related to its membrane electrical charge—reaches a specific value, called the threshold. When the membrane potential reaches the threshold, the neuron fires, and generates a signal that travels to other neurons which, in turn, increase or decrease their potentials in response to this signal. A neuron model that fires at the moment of threshold crossing is also called a spiking neuron model.

Convolutional neural network (CNN) is a regularized type of feed-forward neural network that learns feature engineering by itself via filters optimization. Vanishing gradients and exploding gradients, seen during backpropagation in earlier neural networks, are prevented by using regularized weights over fewer connections. For example, for each neuron in the fully-connected layer 10,000 weights would be required for processing an image sized 100 × 100 pixels. However, applying cascaded convolution kernels, only 25 neurons are required to process 5x5-sized tiles. Higher-layer features are extracted from wider context windows, compared to lower-layer features.

Brain cells make up the functional tissue of the brain. The rest of the brain tissue is structural or connective called the stroma which includes blood vessels. The two main types of cells in the brain are neurons, also known as nerve cells, and glial cells, also known as neuroglia.