Bessel functions, first defined by the mathematician Daniel Bernoulli and then generalized by Friedrich Bessel, are canonical solutions y(x) of Bessel's differential equation

In the mathematical field of numerical analysis, interpolation is a type of estimation, a method of constructing (finding) new data points based on the range of a discrete set of known data points.

In the mathematical subfield of numerical analysis, a B-spline or basis spline is a spline function that has minimal support with respect to a given degree, smoothness, and domain partition. Any spline function of given degree can be expressed as a linear combination of B-splines of that degree. Cardinal B-splines have knots that are equidistant from each other. B-splines can be used for curve-fitting and numerical differentiation of experimental data.

In numerical analysis, Newton's method, also known as the Newton–Raphson method, named after Isaac Newton and Joseph Raphson, is a root-finding algorithm which produces successively better approximations to the roots of a real-valued function. The most basic version starts with a single-variable function f defined for a real variable x, the function's derivative f ′, and an initial guess x0 for a root of f. If the function satisfies sufficient assumptions and the initial guess is close, then

In calculus, Taylor's theorem gives an approximation of a k-times differentiable function around a given point by a polynomial of degree k, called the kth-order Taylor polynomial. For a smooth function, the Taylor polynomial is the truncation at the order k of the Taylor series of the function. The first-order Taylor polynomial is the linear approximation of the function, and the second-order Taylor polynomial is often referred to as the quadratic approximation. There are several versions of Taylor's theorem, some giving explicit estimates of the approximation error of the function by its Taylor polynomial.

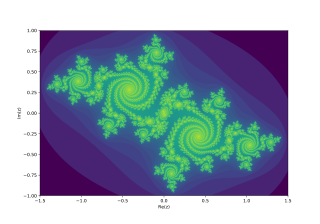

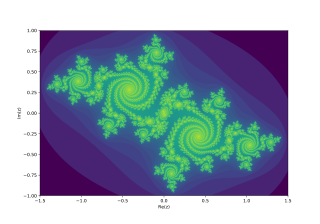

In the context of complex dynamics, a topic of mathematics, the Julia set and the Fatou set are two complementary sets defined from a function. Informally, the Fatou set of the function consists of values with the property that all nearby values behave similarly under repeated iteration of the function, and the Julia set consists of values such that an arbitrarily small perturbation can cause drastic changes in the sequence of iterated function values. Thus the behavior of the function on the Fatou set is "regular", while on the Julia set its behavior is "chaotic".

Texture mapping is a method for defining high frequency detail, surface texture, or color information on a computer-generated graphic or 3D model. The original technique was pioneered by Edwin Catmull in 1974.

In mathematics and signal processing, the Z-transform converts a discrete-time signal, which is a sequence of real or complex numbers, into a complex frequency-domain representation.

In probability theory and statistics, the gamma distribution is a two-parameter family of continuous probability distributions. The exponential distribution, Erlang distribution, and chi-square distribution are special cases of the gamma distribution. There are two different parameterizations in common use:

- With a shape parameter k and a scale parameter θ.

- With a shape parameter α = k and an inverse scale parameter β = 1/θ, called a rate parameter.

In algebra, the partial fraction decomposition or partial fraction expansion of a rational fraction is an operation that consists of expressing the fraction as a sum of a polynomial and one or several fractions with a simpler denominator.

In control theory and signal processing, a linear, time-invariant system is said to be minimum-phase if the system and its inverse are causal and stable.

This article describes periodic points of some complex quadratic maps. A map is a formula for computing a value of a variable based on its own previous value or values; a quadratic map is one that involves the previous value raised to the powers one and two; and a complex map is one in which the variable and the parameters are complex numbers. A periodic point of a map is a value of the variable that occurs repeatedly after intervals of a fixed length.

In mathematics, trigonometric interpolation is interpolation with trigonometric polynomials. Interpolation is the process of finding a function which goes through some given data points. For trigonometric interpolation, this function has to be a trigonometric polynomial, that is, a sum of sines and cosines of given periods. This form is especially suited for interpolation of periodic functions.

In signal processing, a comb filter is a filter implemented by adding a delayed version of a signal to itself, causing constructive and destructive interference. The frequency response of a comb filter consists of a series of regularly spaced notches, giving the appearance of a comb.

Stochastic approximation methods are a family of iterative methods typically used for root-finding problems or for optimization problems. The recursive update rules of stochastic approximation methods can be used, among other things, for solving linear systems when the collected data is corrupted by noise, or for approximating extreme values of functions which cannot be computed directly, but only estimated via noisy observations.

In the mathematical field of numerical analysis, monotone cubic interpolation is a variant of cubic interpolation that preserves monotonicity of the data set being interpolated.

In mathematics, auxiliary functions are an important construction in transcendental number theory. They are functions that appear in most proofs in this area of mathematics and that have specific, desirable properties, such as taking the value zero for many arguments, or having a zero of high order at some point.

The Jenkins–Traub algorithm for polynomial zeros is a fast globally convergent iterative polynomial root-finding method published in 1970 by Michael A. Jenkins and Joseph F. Traub. They gave two variants, one for general polynomials with complex coefficients, commonly known as the "CPOLY" algorithm, and a more complicated variant for the special case of polynomials with real coefficients, commonly known as the "RPOLY" algorithm. The latter is "practically a standard in black-box polynomial root-finders".

In mathematics, and more specifically in numerical analysis, Householder's methods are a class of root-finding algorithms that are used for functions of one real variable with continuous derivatives up to some order d + 1. Each of these methods is characterized by the number d, which is known as the order of the method. The algorithm is iterative and has a rate of convergence of d + 1.

Sidi's generalized secant method is a root-finding algorithm, that is, a numerical method for solving equations of the form . The method was published by Avram Sidi.