MPEG-4 is a group of international standards for the compression of digital audio and visual data, multimedia systems, and file storage formats. It was originally introduced in late 1998 as a group of audio and video coding formats and related technology agreed upon by the ISO/IEC Moving Picture Experts Group (MPEG) under the formal standard ISO/IEC 14496 – Coding of audio-visual objects. Uses of MPEG-4 include compression of audiovisual data for Internet video and CD distribution, voice and broadcast television applications. The MPEG-4 standard was developed by a group led by Touradj Ebrahimi and Fernando Pereira.

MPEG-7 is a multimedia content description standard. It was standardized in ISO/IEC 15938. This description will be associated with the content itself, to allow fast and efficient searching for material that is of interest to the user. MPEG-7 is formally called Multimedia Content Description Interface. Thus, it is not a standard which deals with the actual encoding of moving pictures and audio, like MPEG-1, MPEG-2 and MPEG-4. It uses XML to store metadata, and can be attached to timecode in order to tag particular events, or synchronise lyrics to a song, for example.

An image retrieval system is a computer system used for browsing, searching and retrieving images from a large database of digital images. Most traditional and common methods of image retrieval utilize some method of adding metadata such as captioning, keywords, title or descriptions to the images so that retrieval can be performed over the annotation words. Manual image annotation is time-consuming, laborious and expensive; to address this, there has been a large amount of research done on automatic image annotation. Additionally, the increase in social web applications and the semantic web have inspired the development of several web-based image annotation tools.

In digital image processing and computer vision, image segmentation is the process of partitioning a digital image into multiple image segments, also known as image regions or image objects. The goal of segmentation is to simplify and/or change the representation of an image into something that is more meaningful and easier to analyze. Image segmentation is typically used to locate objects and boundaries in images. More precisely, image segmentation is the process of assigning a label to every pixel in an image such that pixels with the same label share certain characteristics.

Content-based image retrieval, also known as query by image content (QBIC) and content-based visual information retrieval (CBVIR), is the application of computer vision techniques to the image retrieval problem, that is, the problem of searching for digital images in large databases. Content-based image retrieval is opposed to traditional concept-based approaches.

The scale-invariant feature transform (SIFT) is a computer vision algorithm to detect, describe, and match local features in images, invented by David Lowe in 1999. Applications include object recognition, robotic mapping and navigation, image stitching, 3D modeling, gesture recognition, video tracking, individual identification of wildlife and match moving.

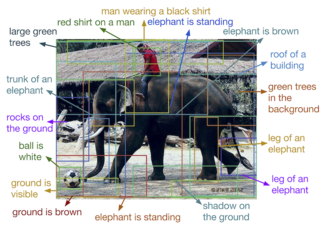

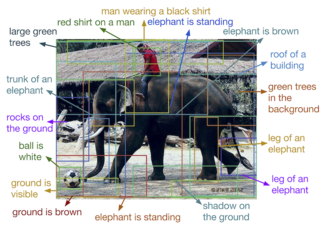

Automatic image annotation is the process by which a computer system automatically assigns metadata in the form of captioning or keywords to a digital image. This application of computer vision techniques is used in image retrieval systems to organize and locate images of interest from a database.

MPEG-4 Part 11Scene description and application engine was published as ISO/IEC 14496-11 in 2005. MPEG-4 Part 11 is also known as BIFS, XMT, MPEG-J. It defines:

Digital Item is the basic unit of transaction in the MPEG-21 framework. It is a structured digital object, including a standard representation, identification and metadata.

The following outline is provided as an overview of and topical guide to computer vision:

Multimedia search enables information search using queries in multiple data types including text and other multimedia formats. Multimedia search can be implemented through multimodal search interfaces, i.e., interfaces that allow to submit search queries not only as textual requests, but also through other media. We can distinguish two methodologies in multimedia search:

MPEG-4 Part 14 or MP4 is a digital multimedia container format most commonly used to store video and audio, but it can also be used to store other data such as subtitles and still images. Like most modern container formats, it allows streaming over the Internet. The only filename extension for MPEG-4 Part 14 files as defined by the specification is .mp4. MPEG-4 Part 14 is a standard specified as a part of MPEG-4.

Computer audition (CA) or machine listening is the general field of study of algorithms and systems for audio interpretation by machines. Since the notion of what it means for a machine to "hear" is very broad and somewhat vague, computer audition attempts to bring together several disciplines that originally dealt with specific problems or had a concrete application in mind. The engineer Paris Smaragdis, interviewed in Technology Review, talks about these systems — "software that uses sound to locate people moving through rooms, monitor machinery for impending breakdowns, or activate traffic cameras to record accidents."

AXMEDIS is a set of European Union digital content standards, initially created as a research project running from 2004 to 2008 partially supported by the European Commission under the Information Society Technologies programme of the Sixth Framework Programme (FP6). It stands for "Automating Production of Cross Media Content for Multi-channel Distribution". Now it is distributed as a framework, and is still being maintained and improved. A large part of the framework is under open source licensing. The AXMEDIS framework includes a set of tools, models, test cases, documents, etc. supporting the production and distribution of cross media content.

In computer vision, the bag-of-words model sometimes called bag-of-visual-words model can be applied to image classification or retrieval, by treating image features as words. In document classification, a bag of words is a sparse vector of occurrence counts of words; that is, a sparse histogram over the vocabulary. In computer vision, a bag of visual words is a vector of occurrence counts of a vocabulary of local image features.

Object recognition – technology in the field of computer vision for finding and identifying objects in an image or video sequence. Humans recognize a multitude of objects in images with little effort, despite the fact that the image of the objects may vary somewhat in different view points, in many different sizes and scales or even when they are translated or rotated. Objects can even be recognized when they are partially obstructed from view. This task is still a challenge for computer vision systems. Many approaches to the task have been implemented over multiple decades.

The ISO base media file format (ISOBMFF) is a container file format that defines a general structure for files that contain time-based multimedia data such as video and audio. It is standardized in ISO/IEC 14496-12, a.k.a. MPEG-4 Part 12, and was formerly also published as ISO/IEC 15444-12, a.k.a. JPEG 2000 Part 12.

Multimedia information retrieval is a research discipline of computer science that aims at extracting semantic information from multimedia data sources. Data sources include directly perceivable media such as audio, image and video, indirectly perceivable sources such as text, semantic descriptions, biosignals as well as not perceivable sources such as bioinformation, stock prices, etc. The methodology of MMIR can be organized in three groups:

- Methods for the summarization of media content. The result of feature extraction is a description.

- Methods for the filtering of media descriptions

- Methods for the categorization of media descriptions into classes.

A Multimedia database (MMDB) is a collection of related for multimedia data. The multimedia data include one or more primary media data types such as text, images, graphic objects animation sequences, audio and video.

A 3D Content Retrieval system is a computer system for browsing, searching and retrieving three dimensional digital contents from a large database of digital images. The most original way of doing 3D content retrieval uses methods to add description text to 3D content files such as the content file name, link text, and the web page title so that related 3D content can be found through text retrieval. Because of the inefficiency of manually annotating 3D files, researchers have investigated ways to automate the annotation process and provide a unified standard to create text descriptions for 3D contents. Moreover, the increase in 3D content has demanded and inspired more advanced ways to retrieve 3D information. Thus, shape matching methods for 3D content retrieval have become popular. Shape matching retrieval is based on techniques that compare and contrast similarities between 3D models.