Related Research Articles

In statistics, survey sampling describes the process of selecting a sample of elements from a target population to conduct a survey. The term "survey" may refer to many different types or techniques of observation. In survey sampling it most often involves a questionnaire used to measure the characteristics and/or attitudes of people. Different ways of contacting members of a sample once they have been selected is the subject of survey data collection. The purpose of sampling is to reduce the cost and/or the amount of work that it would take to survey the entire target population. A survey that measures the entire target population is called a census. A sample refers to a group or section of a population from which information is to be obtained.

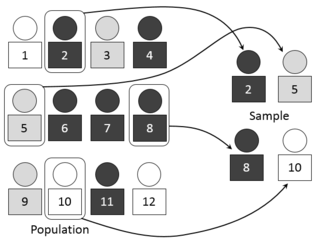

In statistics, quality assurance, and survey methodology, sampling is the selection of a subset or a statistical sample of individuals from within a statistical population to estimate characteristics of the whole population. The subset is meant to reflect the whole population and statisticians attempt to collect samples that are representative of the population. Sampling has lower costs and faster data collection compared to recording data from the entire population, and thus, it can provide insights in cases where it is infeasible to measure an entire population.

In the field of human factors and ergonomics, human reliability is the probability that a human performs a task to a sufficient standard. Reliability of humans can be affected by many factors such as age, physical health, mental state, attitude, emotions, personal propensity for certain mistakes, and cognitive biases.

Reliability engineering is a sub-discipline of systems engineering that emphasizes the ability of equipment to function without failure. Reliability is defined as the probability that a product, system, or service will perform its intended function adequately for a specified period of time, OR will operate in a defined environment without failure. Reliability is closely related to availability, which is typically described as the ability of a component or system to function at a specified moment or interval of time.

In statistics, inter-rater reliability is the degree of agreement among independent observers who rate, code, or assess the same phenomenon.

Calibrated probability assessments are subjective probabilities assigned by individuals who have been trained to assess probabilities in a way that historically represents their uncertainty. For example, when a person has calibrated a situation and says they are "80% confident" in each of 100 predictions they made, they will get about 80% of them correct. Likewise, they will be right 90% of the time they say they are 90% certain, and so on.

The wisdom of the crowd is the collective opinion of a diverse and independent group of individuals rather than that of a single expert. This process, while not new to the Information Age, has been pushed into the mainstream spotlight by social information sites such as Quora, Reddit, Stack Exchange, Wikipedia, Yahoo! Answers, and other web resources which rely on collective human knowledge. An explanation for this phenomenon is that there is idiosyncratic noise associated with each individual judgment, and taking the average over a large number of responses will go some way toward canceling the effect of this noise.

Group decision-making is a situation faced when individuals collectively make a choice from the alternatives before them. The decision is then no longer attributable to any single individual who is a member of the group. This is because all the individuals and social group processes such as social influence contribute to the outcome. The decisions made by groups are often different from those made by individuals. In workplace settings, collaborative decision-making is one of the most successful models to generate buy-in from other stakeholders, build consensus, and encourage creativity. According to the idea of synergy, decisions made collectively also tend to be more effective than decisions made by a single individual. In this vein, certain collaborative arrangements have the potential to generate better net performance outcomes than individuals acting on their own. Under normal everyday conditions, collaborative or group decision-making would often be preferred and would generate more benefits than individual decision-making when there is the time for proper deliberation, discussion, and dialogue. This can be achieved through the use of committee, teams, groups, partnerships, or other collaborative social processes.

Human Cognitive Reliability Correlation (HCR) is a technique used in the field of Human Reliability Assessment (HRA), for the purposes of evaluating the probability of a human error occurring throughout the completion of a specific task. From such analyses measures can then be taken to reduce the likelihood of errors occurring within a system and therefore lead to an improvement in the overall levels of safety. There exist three primary reasons for conducting an HRA; error identification, error quantification and error reduction. As there exist a number of techniques used for such purposes, they can be split into one of two classifications; first generation techniques and second generation techniques. First generation techniques work on the basis of the simple dichotomy of ‘fits/doesn’t fit’ in the matching of the error situation in context with related error identification and quantification and second generation techniques are more theory based in their assessment and quantification of errors. HRA techniques have been utilised in a range of industries including healthcare, engineering, nuclear, transportation and business sector; each technique has varying uses within different disciplines.

Tecnica Empirica Stima Errori Operatori (TESEO) is a technique in the field of Human reliability Assessment (HRA), that evaluates the probability of a human error occurring throughout the completion of a specific task. From such analyses measures can then be taken to reduce the likelihood of errors occurring within a system and therefore lead to an improvement in the overall levels of safety. There exist three primary reasons for conducting an HRA; error identification, error quantification and error reduction. As there exist a number of techniques used for such purposes, they can be split into one of two classifications; first generation techniques and second generation techniques. First generation techniques work on the basis of the simple dichotomy of ‘fits/doesn’t fit’ in the matching of the error situation in context with related error identification and quantification and second generation techniques are more theory based in their assessment and quantification of errors. ‘HRA techniques have been utilised in a range of industries including healthcare, engineering, nuclear, transportation and business sector; each technique has varying uses within different disciplines.

The Technique for human error-rate prediction (THERP) is a technique that is used in the field of Human Reliability Assessment (HRA) to evaluate the probability of human error occurring throughout the completion of a task. From such an analysis, some corrective measures could be taken to reduce the likelihood of errors occurring within a system. The overall goal of THERP is to apply and document probabilistic methodological analyses to increase safety during a given process. THERP is used in fields such as error identification, error quantification and error reduction.

Human error assessment and reduction technique (HEART) is a technique used in the field of human reliability assessment (HRA), for the purposes of evaluating the probability of a human error occurring throughout the completion of a specific task. From such analyses measures can then be taken to reduce the likelihood of errors occurring within a system and therefore lead to an improvement in the overall levels of safety. There exist three primary reasons for conducting an HRA: error identification, error quantification, and error reduction. As there exist a number of techniques used for such purposes, they can be split into one of two classifications: first-generation techniques and second generation techniques. First generation techniques work on the basis of the simple dichotomy of 'fits/doesn't fit' in the matching of the error situation in context with related error identification and quantification and second generation techniques are more theory based in their assessment and quantification of errors. HRA techniques have been used in a range of industries including healthcare, engineering, nuclear, transportation, and business sectors. Each technique has varying uses within different disciplines.

Success Likelihood Index Method (SLIM) is a technique used in the field of Human reliability Assessment (HRA), for the purposes of evaluating the probability of a human error occurring throughout the completion of a specific task. From such analyses measures can then be taken to reduce the likelihood of errors occurring within a system and therefore lead to an improvement in the overall levels of safety. There exist three primary reasons for conducting an HRA; error identification, error quantification and error reduction. As there exist a number of techniques used for such purposes, they can be split into one of two classifications; first generation techniques and second generation techniques. First generation techniques work on the basis of the simple dichotomy of ‘fits/doesn’t fit’ in the matching of the error situation in context with related error identification and quantification and second generation techniques are more theory based in their assessment and quantification of errors. ‘HRA techniques have been utilised in a range of industries including healthcare, engineering, nuclear, transportation and business sector; each technique has varying uses within different disciplines.

Influence Diagrams Approach (IDA) is a technique used in the field of Human reliability Assessment (HRA), for the purposes of evaluating the probability of a human error occurring throughout the completion of a specific task. From such analyses measures can then be taken to reduce the likelihood of errors occurring within a system and therefore lead to an improvement in the overall levels of safety. There exist three primary reasons for conducting an HRA; error identification, error quantification and error reduction. As there exist a number of techniques used for such purposes, they can be split into one of two classifications; first generation techniques and second generation techniques. First generation techniques work on the basis of the simple dichotomy of ‘fits/doesn’t fit’ in the matching of the error situation in context with related error identification and quantification and second generation techniques are more theory based in their assessment and quantification of errors. ‘HRA techniques have been utilised in a range of industries including healthcare, engineering, nuclear, transportation and business sector; each technique has varying uses within different disciplines.

A Technique for Human Event Analysis (ATHEANA) is a technique used in the field of human reliability assessment (HRA). The purpose of ATHEANA is to evaluate the probability of human error while performing a specific task. From such analyses, preventative measures can then be taken to reduce human errors within a system and therefore lead to improvements in the overall level of safety.

In simple terms, risk is the possibility of something bad happening. Risk involves uncertainty about the effects/implications of an activity with respect to something that humans value, often focusing on negative, undesirable consequences. Many different definitions have been proposed. One international standard definition of risk is the "effect of uncertainty on objectives".

Risk management tools allow the uncertainty to be addressed by identifying and generating metrics, parameterizing, prioritizing, and developing responses, and tracking risk. These activities may be difficult to track without tools and techniques, documentation and information systems.

Cultural consensus theory is an approach to information pooling which supports a framework for the measurement and evaluation of beliefs as cultural; shared to some extent by a group of individuals. Cultural consensus models guide the aggregation of responses from individuals to estimate (1) the culturally appropriate answers to a series of related questions and (2) individual competence in answering those questions. The theory is applicable when there is sufficient agreement across people to assume that a single set of answers exists. The agreement between pairs of individuals is used to estimate individual cultural competence. Answers are estimated by weighting responses of individuals by their competence and then combining responses.

The curse of knowledge is a cognitive bias that occurs when an individual, who is communicating with others, assumes that others have information that is only available to themselves, assuming they all share a background and understanding. This bias is also called by some authors the curse of expertise.

Human factors are the physical or cognitive properties of individuals, or social behavior which is specific to humans, and which influence functioning of technological systems as well as human-environment equilibria. The safety of underwater diving operations can be improved by reducing the frequency of human error and the consequences when it does occur. Human error can be defined as an individual's deviation from acceptable or desirable practice which culminates in undesirable or unexpected results. Human factors include both the non-technical skills that enhance safety and the non-technical factors that contribute to undesirable incidents that put the diver at risk.

[Safety is] An active, adaptive process which involves making sense of the task in the context of the environment to successfully achieve explicit and implied goals, with the expectation that no harm or damage will occur. – G. Lock, 2022

Dive safety is primarily a function of four factors: the environment, equipment, individual diver performance and dive team performance. The water is a harsh and alien environment which can impose severe physical and psychological stress on a diver. The remaining factors must be controlled and coordinated so the diver can overcome the stresses imposed by the underwater environment and work safely. Diving equipment is crucial because it provides life support to the diver, but the majority of dive accidents are caused by individual diver panic and an associated degradation of the individual diver's performance. – M.A. Blumenberg, 1996

References

- 1 2 3 4 5 Humphreys, P., (1995) Human Reliability Assessor's Guide. Human Factors in Reliability Group.

- ↑ Dalkey, N. & Helmer, O. (1963) An experimental application of the Delphi method to the use of experts. Management Science. 9(3) 458-467.

- ↑ Linstone, H.A. & Turoff, M. (1978) The Delphi Method: Techniques and Applications. Addison-Wesley, London.

- ↑ Kirwan, Practical Guide to Human Reliability Assessment, CPC Press, 1994

- ↑ 2004. Eurocontrol Experimental Centre; Review of Techniques to Support the EATMP Safety Assessment Methodology. EuroControl, Vol 1