In mathematics, a symmetric matrix with real entries is positive-definite if the real number is positive for every nonzero real column vector where is the transpose of . More generally, a Hermitian matrix is positive-definite if the real number is positive for every nonzero complex column vector where denotes the conjugate transpose of

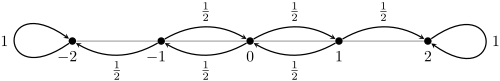

A Markov chain or Markov process is a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. Informally, this may be thought of as, "What happens next depends only on the state of affairs now." A countably infinite sequence, in which the chain moves state at discrete time steps, gives a discrete-time Markov chain (DTMC). A continuous-time process is called a continuous-time Markov chain (CTMC). It is named after the Russian mathematician Andrey Markov.

In mathematics, particularly in linear algebra, matrix multiplication is a binary operation that produces a matrix from two matrices. For matrix multiplication, the number of columns in the first matrix must be equal to the number of rows in the second matrix. The resulting matrix, known as the matrix product, has the number of rows of the first and the number of columns of the second matrix. The product of matrices A and B is denoted as AB.

For statistics and control theory, Kalman filtering, also known as linear quadratic estimation (LQE), is an algorithm that uses a series of measurements observed over time, including statistical noise and other inaccuracies, and produces estimates of unknown variables that tend to be more accurate than those based on a single measurement alone, by estimating a joint probability distribution over the variables for each timeframe. The filter is named after Rudolf E. Kálmán, who was one of the primary developers of its theory.

In probability theory and statistics, a covariance matrix is a square matrix giving the covariance between each pair of elements of a given random vector.

This article contains examples of Markov chains and Markov processes in action.

In mathematics, a stochastic matrix is a square matrix used to describe the transitions of a Markov chain. Each of its entries is a nonnegative real number representing a probability. It is also called a probability matrix, transition matrix, substitution matrix, or Markov matrix. The stochastic matrix was first developed by Andrey Markov at the beginning of the 20th century, and has found use throughout a wide variety of scientific fields, including probability theory, statistics, mathematical finance and linear algebra, as well as computer science and population genetics. There are several different definitions and types of stochastic matrices:

In linear algebra, a QR decomposition, also known as a QR factorization or QU factorization, is a decomposition of a matrix A into a product A = QR of an orthonormal matrix Q and an upper triangular matrix R. QR decomposition is often used to solve the linear least squares (LLS) problem and is the basis for a particular eigenvalue algorithm, the QR algorithm.

In linear algebra, a rotation matrix is a transformation matrix that is used to perform a rotation in Euclidean space. For example, using the convention below, the matrix

A continuous-time Markov chain (CTMC) is a continuous stochastic process in which, for each state, the process will change state according to an exponential random variable and then move to a different state as specified by the probabilities of a stochastic matrix. An equivalent formulation describes the process as changing state according to the least value of a set of exponential random variables, one for each possible state it can move to, with the parameters determined by the current state.

In linear algebra, it is often important to know which vectors have their directions unchanged by a given linear transformation. An eigenvector or characteristic vector is such a vector. Thus an eigenvector of a linear transformation is scaled by a constant factor when the linear transformation is applied to it: . The corresponding eigenvalue, characteristic value, or characteristic root is the multiplying factor .

In queueing theory, a discipline within the mathematical theory of probability, a Jackson network is a class of queueing network where the equilibrium distribution is particularly simple to compute as the network has a product-form solution. It was the first significant development in the theory of networks of queues, and generalising and applying the ideas of the theorem to search for similar product-form solutions in other networks has been the subject of much research, including ideas used in the development of the Internet. The networks were first identified by James R. Jackson and his paper was re-printed in the journal Management Science’s ‘Ten Most Influential Titles of Management Sciences First Fifty Years.’

In probability, a discrete-time Markov chain (DTMC) is a sequence of random variables, known as a stochastic process, in which the value of the next variable depends only on the value of the current variable, and not any variables in the past. For instance, a machine may have two states, A and E. When it is in state A, there is a 40% chance of it moving to state E and a 60% chance of it remaining in state A. When it is in state E, there is a 70% chance of it moving to A and a 30% chance of it staying in E. The sequence of states of the machine is a Markov chain. If we denote the chain by then is the state which the machine starts in and is the random variable describing its state after 10 transitions. The process continues forever, indexed by the natural numbers.

A phase-type distribution is a probability distribution constructed by a convolution or mixture of exponential distributions. It results from a system of one or more inter-related Poisson processes occurring in sequence, or phases. The sequence in which each of the phases occurs may itself be a stochastic process. The distribution can be represented by a random variable describing the time until absorption of a Markov process with one absorbing state. Each of the states of the Markov process represents one of the phases.

In statistical mechanics, the Zimm–Bragg model is a helix-coil transition model that describes helix-coil transitions of macromolecules, usually polymer chains. Most models provide a reasonable approximation of the fractional helicity of a given polypeptide; the Zimm–Bragg model differs by incorporating the ease of propagation (self-replication) with respect to nucleation. It is named for co-discoverers Bruno H. Zimm and J. K. Bragg.

A number of different Markov models of DNA sequence evolution have been proposed. These substitution models differ in terms of the parameters used to describe the rates at which one nucleotide replaces another during evolution. These models are frequently used in molecular phylogenetic analyses. In particular, they are used during the calculation of likelihood of a tree and they are used to estimate the evolutionary distance between sequences from the observed differences between the sequences.

In quantum computing, quantum finite automata (QFA) or quantum state machines are a quantum analog of probabilistic automata or a Markov decision process. They provide a mathematical abstraction of real-world quantum computers. Several types of automata may be defined, including measure-once and measure-many automata. Quantum finite automata can also be understood as the quantization of subshifts of finite type, or as a quantization of Markov chains. QFAs are, in turn, special cases of geometric finite automata or topological finite automata.

The discrete phase-type distribution is a probability distribution that results from a system of one or more inter-related geometric distributions occurring in sequence, or phases. The sequence in which each of the phases occur may itself be a stochastic process. The distribution can be represented by a random variable describing the time until absorption of an absorbing Markov chain with one absorbing state. Each of the states of the Markov chain represents one of the phases.

A dependability state diagram is a method for modelling a system as a Markov chain. It is used in reliability engineering for availability and reliability analysis.

In probability theory, uniformization method, is a method to compute transient solutions of finite state continuous-time Markov chains, by approximating the process by a discrete-time Markov chain. The original chain is scaled by the fastest transition rate γ, so that transitions occur at the same rate in every state, hence the name. The method is simple to program and efficiently calculates an approximation to the transient distribution at a single point in time. The method was first introduced by Winfried Grassmann in 1977.