In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable , which takes values in the alphabet and is distributed according to , the entropy is

The standard probability axioms are the foundations of probability theory introduced by Russian mathematician Andrey Kolmogorov in 1933. These axioms remain central and have direct contributions to mathematics, the physical sciences, and real-world probability cases.

Reinforcement learning (RL) is an interdisciplinary area of machine learning and optimal control concerned with how an intelligent agent ought to take actions in a dynamic environment in order to maximize the cumulative reward. Reinforcement learning is one of three basic machine learning paradigms, alongside supervised learning and unsupervised learning.

Quantum superposition is a fundamental principle of quantum mechanics that states that linear combinations of solutions to the Schrödinger equation are also solutions of the Schrödinger equation. This follows from the fact that the Schrödinger equation is a linear differential equation in time and position. More precisely, the state of a system is given by a linear combination of all the eigenfunctions of the Schrödinger equation governing that system.

In probability theory, the law of large numbers (LLN) is a mathematical theorem that states that the average of the results obtained from a large number of independent and identical random samples converges to the true value, if it exists. More formally, the LLN states that given a sample of independent and identically distributed values, the sample mean converges to the true mean.

A Bayesian network is a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG). While it is one of several forms of causal notation, causal networks are special cases of Bayesian networks. Bayesian networks are ideal for taking an event that occurred and predicting the likelihood that any one of several possible known causes was the contributing factor. For example, a Bayesian network could represent the probabilistic relationships between diseases and symptoms. Given symptoms, the network can be used to compute the probabilities of the presence of various diseases.

Ergodic theory is a branch of mathematics that studies statistical properties of deterministic dynamical systems; it is the study of ergodicity. In this context, "statistical properties" refers to properties which are expressed through the behavior of time averages of various functions along trajectories of dynamical systems. The notion of deterministic dynamical systems assumes that the equations determining the dynamics do not contain any random perturbations, noise, etc. Thus, the statistics with which we are concerned are properties of the dynamics.

The edge of chaos is a transition space between order and disorder that is hypothesized to exist within a wide variety of systems. This transition zone is a region of bounded instability that engenders a constant dynamic interplay between order and disorder.

The Wigner quasiprobability distribution is a quasiprobability distribution. It was introduced by Eugene Wigner in 1932 to study quantum corrections to classical statistical mechanics. The goal was to link the wavefunction that appears in Schrödinger's equation to a probability distribution in phase space.

Renewal theory is the branch of probability theory that generalizes the Poisson process for arbitrary holding times. Instead of exponentially distributed holding times, a renewal process may have any independent and identically distributed (IID) holding times that have finite mean. A renewal-reward process additionally has a random sequence of rewards incurred at each holding time, which are IID but need not be independent of the holding times.

For supervised learning applications in machine learning and statistical learning theory, generalization error is a measure of how accurately an algorithm is able to predict outcome values for previously unseen data. Because learning algorithms are evaluated on finite samples, the evaluation of a learning algorithm may be sensitive to sampling error. As a result, measurements of prediction error on the current data may not provide much information about predictive ability on new data. Generalization error can be minimized by avoiding overfitting in the learning algorithm. The performance of a machine learning algorithm is visualized by plots that show values of estimates of the generalization error through the learning process, which are called learning curves.

In mathematical analysis, the Russo–Vallois integral is an extension to stochastic processes of the classical Riemann–Stieltjes integral

In the mathematical theory of probability, a Borel right process, named after Émile Borel, is a particular kind of continuous-time random process.

Biological neuron models, also known as spiking neuron models, are mathematical descriptions of the conduction of electrical signals in neurons. Neurons are electrically excitable cells within the nervous system, able to fire electric signals, called action potentials, across a neural network.These mathematical models describe the role of the biophysical and geometrical characteristics of neurons on the conduction of electrical activity.

The matched Z-transform method, also called the pole–zero mapping or pole–zero matching method, and abbreviated MPZ or MZT, is a technique for converting a continuous-time filter design to a discrete-time filter design.

In the mathematical theory of probability, the voter model is an interacting particle system introduced by Richard A. Holley and Thomas M. Liggett in 1975.

In queueing theory, a discipline within the mathematical theory of probability, a heavy traffic approximation is the matching of a queueing model with a diffusion process under some limiting conditions on the model's parameters. The first such result was published by John Kingman who showed that when the utilisation parameter of an M/M/1 queue is near 1 a scaled version of the queue length process can be accurately approximated by a reflected Brownian motion.

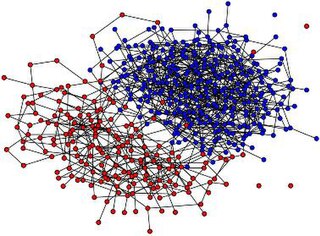

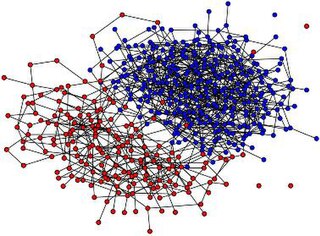

Maximal entropy random walk (MERW) is a popular type of biased random walk on a graph, in which transition probabilities are chosen accordingly to the principle of maximum entropy, which says that the probability distribution which best represents the current state of knowledge is the one with largest entropy. While standard random walk chooses for every vertex uniform probability distribution among its outgoing edges, locally maximizing entropy rate, MERW maximizes it globally by assuming uniform probability distribution among all paths in a given graph.

Trophic coherence is a property of directed graphs. It is based on the concept of trophic levels used mainly in ecology, but which can be defined for directed networks in general and provides a measure of hierarchical structure among nodes. Trophic coherence is the tendency of nodes to fall into well-defined trophic levels. It has been related to several structural and dynamical properties of directed networks, including the prevalence of cycles and network motifs, ecological stability, intervality, and spreading processes like epidemics and neuronal avalanches.

The Kaniadakis Weibull distribution is a probability distribution arising as a generalization of the Weibull distribution. It is one example of a Kaniadakis κ-distribution. The κ-Weibull distribution has been adopted successfully for describing a wide variety of complex systems in seismology, economy, epidemiology, among many others.