Google Search is a search engine provided by Google. Handling more than 3.5 billion searches per day, it has a 92% share of the global search engine market. It is also the most-visited website in the world.

Triple oppression, also called double jeopardy, Jane Crow, or triple exploitation, is a theory developed by black socialists in the United States, such as Claudia Jones. The theory states that a connection exists between various types of oppression, specifically classism, racism, and sexism. It hypothesizes that all three types of oppression need to be overcome at once.

I Know Why the Caged Bird Sings is a 1969 autobiography describing the young and early years of American writer and poet Maya Angelou. The first in a seven-volume series, it is a coming-of-age story that illustrates how strength of character and a love of literature can help overcome racism and trauma. The book begins when three-year-old Maya and her older brother are sent to Stamps, Arkansas, to live with their grandmother and ends when Maya becomes a mother at the age of 16. In the course of Caged Bird, Maya transforms from a victim of racism with an inferiority complex into a self-possessed, dignified young woman capable of responding to prejudice.

In social justice theory, internalized oppression is a concept in which an oppressed group uses the methods of the oppressing group against itself. It occurs when one group perceives an inequality of value relative to another group, and desires to be like the more highly-valued group.

Black feminism is a philosophy that centers on the idea that "Black women are inherently valuable, that [Black women's] liberation is a necessity not as an adjunct to somebody else's but because our need as human persons for autonomy."

Robert Epstein is an American psychologist, professor, author, and journalist. He earned his Ph.D. in psychology at Harvard University in 1981, was editor in chief of Psychology Today, and has held positions at several universities including Boston University, University of California, San Diego, and Harvard University. He is also the founder and director emeritus of the Cambridge Center for Behavioral Studies in Concord, MA. In 2012, he founded the American Institute for Behavioral Research and Technology (AIBRT), a nonprofit organization that conducts research to promote the well-being and functioning of people worldwide.

Internalized racism is a form of internalized oppression, defined by sociologist Karen D. Pyke as the "internalization of racial oppression by the racially subordinated." In her study The Psychology of Racism, Robin Nicole Johnson emphasizes that internalized racism involves both "conscious and unconscious acceptance of a racial hierarchy in which whites are consistently ranked above people of color." These definitions encompass a wide range of instances, including, but not limited to, belief in negative stereotypes, adaptations to white cultural standards, and thinking that supports the status quo.

The Combahee River Collective was a Black feminist lesbian socialist organization active in Boston from 1974 to 1980. The Collective argued that both the white feminist movement and the Civil Rights Movement were not addressing their particular needs as Black women and, more specifically, as Black lesbians. Racism was present in the mainstream feminist movement, while Delaney and Manditch-Prottas argue that much of the Civil Rights Movement had a sexist and homophobic reputation. The Collective are perhaps best known for developing the Combahee River Collective Statement, a key document in the history of contemporary Black feminism and the development of the concepts of identity politics as used among political organizers and social theorists, and for introducing the concept of interlocking systems of oppression, a key concept of intersectionality. Gerald Izenberg credits the 1977 Combahee statement with the first usage of the phrase "identity politics". Through writing their statement, the CRC connected themselves to the activist tradition of Black women in the 19th Century and to the struggles of Black liberation in the 1960s.

Racist rhetoric is distributed through computer-mediated means and includes some or all of the following characteristics: ideas of racial uniqueness, racist attitudes towards specific social categories, racist stereotypes, hate-speech, nationalism and common destiny, racial supremacy, superiority and separation, conceptions of racial otherness, and anti-establishment world-view. Racism online can have the same effects as offensive remarks not online.

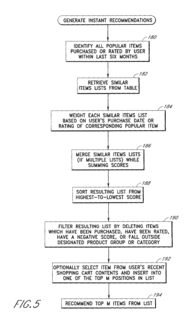

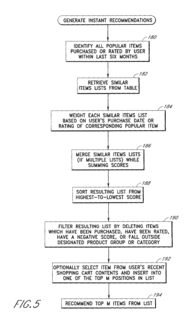

A filter bubble or ideological frame is a state of intellectual isolation that can result from personalized searches when a website algorithm selectively guesses what information a user would like to see based on information about the user, such as location, past click-behavior and search history. As a result, users become separated from information that disagrees with their viewpoints, effectively isolating them in their own cultural or ideological bubbles. The choices made by these algorithms are not transparent. Prime examples include Google Personalized Search results and Facebook's personalized news-stream.

The Cyber Civil Rights Initiative (CCRI) is a non-profit organization founded by Holly Jacobs in 2012. The organization offers services to victims of cybercrimes through its crisis helpline. They have compiled resources to help victims of cybercrimes both in America and internationally. CCRI's resources include a list of frequently asked questions, an online image removal guide, a roster of attorneys who may be able to offer low-cost or pro-bono legal assistance, and a list of laws related to nonconsensual pornography and related issues. CCRI publishes reports on nonconsensual pornography, engages in advocacy work, and contributes to updating tech policy. CCRI offers expert advice to tech industry leaders such as Twitter, Facebook, and Google regarding their policies against nonconsensual pornography. CCRI is the lead educator in the United States on subject matter related to nonconsensual pornography, recorded sexual assault, and sextortion.

Algorithmic bias describes systematic and repeatable errors in a computer system that create "unfair" outcomes, such as "privileging" one category over another in ways different from the intended function of the algorithm.

Joy Adowaa Buolamwini is a Ghanaian-American-Canadian computer scientist and digital activist based at the MIT Media Lab. Buolamwini introduces herself as a poet of code, daughter of art and science. She founded the Algorithmic Justice League, an organization that works to challenge bias in decision-making software, using art, advocacy, and research to highlight the social implications and harms of artificial intelligence (AI).

Safiya Umoja Noble is a Professor at UCLA, and is the Co-Founder and Co-Director of the UCLA Center for Critical Internet Inquiry. She is the author of Algorithms of Oppression, and co-editor of two edited volumes: The Intersectional Internet: Race, Sex, Class and Culture and Emotions, Technology & Design. She is a Research Associate at the Oxford Internet Institute at the University of Oxford. She was appointed a Commissioner to the University of Oxford Commission on AI and Good Governance in 2020. In 2020 she was nominated to the Global Future Council on Artificial Intelligence for Humanity at the World Economic Foundation.

Timnit Gebru is an American computer scientist who works on algorithmic bias and data mining. She is an advocate for diversity in technology and co-founder of Black in AI, a community of Black researchers working in artificial intelligence (AI). She is the founder of the Distributed Artificial Intelligence Research Institute (DAIR).

White Fragility: Why It's So Hard for White People to Talk About Racism is a 2018 book written by Robin DiAngelo about race relations in the United States. An academic with experience in diversity training, DiAngelo coined the term "white fragility" in 2011 to describe any defensive instincts or reactions that a white person experiences when questioned about race or made to consider their own race. In White Fragility, DiAngelo views racism in the United States as systemic and often perpetuated unconsciously by individuals. She recommends against viewing racism as committed intentionally by "bad people".

Sarah T. Roberts is a professor, author, and scholar who specializes in content moderation of social media. She is an expert in the areas of internet culture, social media, digital labor, and the intersections of media and technology. She coined the term "commercial content moderation" (CCM) to describe the job paid content moderators do to regulate legal guidelines and standards. Roberts wrote the book Behind the Screen: Content Moderation in the Shadows of Social Media.

Danielle Hairston is an American psychiatrist who is Director of Residency Training in the Department of Psychiatry at Howard University College of Medicine, and a practicing psychiatrist in the Division of Consultation-Liaison Psychiatry at the University of Maryland Medical Center in Baltimore, Maryland. Hairston is also the Scientific Program Chair for the Black Psychiatrists of America and the President of the American Psychiatric Association's Black Caucus.

Coded Bias is an American documentary film directed by Shalini Kantayya that premiered at the 2020 Sundance Film Festival. The film includes contributions from researchers Joy Buolamwini, Deborah Raji, Meredith Broussard, Cathy O’Neil, Zeynep Tufekci, Safiya Noble, Timnit Gebru, Virginia Eubanks, and Silkie Carlo, and others.

The Algorithmic Justice League (AJL) is a digital advocacy non-profit organization based in Cambridge, Massachusetts. Founded in 2016 by computer scientist Joy Buolamwini, the AJL uses research, artwork, and policy advocacy to increase societal awareness regarding the use of artificial intelligence (AI) in society and the harms and biases that AI can pose to society. The AJL has engaged in a variety of open online seminars, media appearances, and tech advocacy initiatives to communicate information about bias in AI systems and promote industry and government action to mitigate against the creation and deployment of biased AI systems. In 2021, Fast Company named AJL as one of the 10 most innovative AI companies in the world.