Related Research Articles

A census is the procedure of systematically acquiring, recording and calculating population information about the members of a given population. This term is used mostly in connection with national population and housing censuses; other common censuses include censuses of agriculture, traditional culture, business, supplies, and traffic censuses. The United Nations (UN) defines the essential features of population and housing censuses as "individual enumeration, universality within a defined territory, simultaneity and defined periodicity", and recommends that population censuses be taken at least every ten years. UN recommendations also cover census topics to be collected, official definitions, classifications and other useful information to co-ordinate international practices.

Demographic statistics are measures of the characteristics of, or changes to, a population. Records of births, deaths, marriages, immigration and emigration and a regular census of population provide information that is key to making sound decisions about national policy.

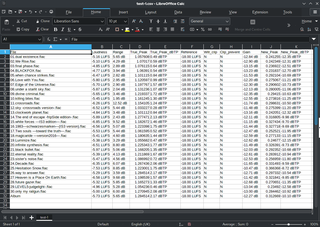

A spreadsheet is a computer application for computation, organization, analysis and storage of data in tabular form. Spreadsheets were developed as computerized analogs of paper accounting worksheets. The program operates on data entered in cells of a table. Each cell may contain either numeric or text data, or the results of formulas that automatically calculate and display a value based on the contents of other cells. The term spreadsheet may also refer to one such electronic document.

The United States Census Bureau (USCB), officially the Bureau of the Census, is a principal agency of the U.S. Federal Statistical System, responsible for producing data about the American people and economy. The Census Bureau is part of the U.S. Department of Commerce and its director is appointed by the President of the United States. Currently, Rob Santos is the Director of the U.S. Census Bureau and Dr. Ron Jarmin is the Deputy Director of the U.S. Census Bureau

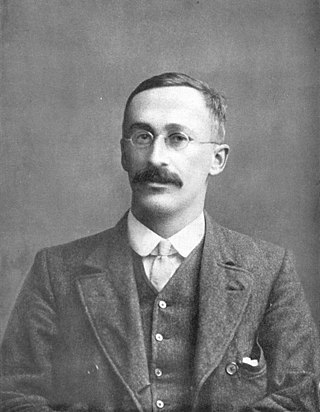

William Sealy Gosset was an English statistician, chemist and brewer who served as Head Brewer of Guinness and Head Experimental Brewer of Guinness and was a pioneer of modern statistics. He pioneered small sample experimental design and analysis with an economic approach to the logic of uncertainty. Gosset published under the pen name Student and developed most famously Student's t-distribution – originally called Student's "z" – and "Student's test of statistical significance".

Statistics Canada, formed in 1971, is the agency of the Government of Canada commissioned with producing statistics to help better understand Canada, its population, resources, economy, society, and culture. It is headquartered in Ottawa.

A chi-squared test is a statistical hypothesis test used in the analysis of contingency tables when the sample sizes are large. In simpler terms, this test is primarily used to examine whether two categorical variables are independent in influencing the test statistic. The test is valid when the test statistic is chi-squared distributed under the null hypothesis, specifically Pearson's chi-squared test and variants thereof. Pearson's chi-squared test is used to determine whether there is a statistically significant difference between the expected frequencies and the observed frequencies in one or more categories of a contingency table. For contingency tables with smaller sample sizes, a Fisher's exact test is used instead.

Mann–Whitney test is a nonparametric test of the null hypothesis that, for randomly selected values X and Y from two populations, the probability of X being greater than Y is equal to the probability of Y being greater than X.

Statistics New Zealand, branded as Stats NZ, is the public service department of New Zealand charged with the collection of statistics related to the economy, population and society of New Zealand. To this end, Stats NZ produces censuses and surveys.

The American Community Survey (ACS) is an annual demographics survey program conducted by the U.S. Census Bureau. It regularly gathers information previously contained only in the long form of the decennial census, including ancestry, US citizenship status, educational attainment, income, language proficiency, migration, disability, employment, and housing characteristics. These data are used by many public-sector, private-sector, and not-for-profit stakeholders to allocate funding, track shifting demographics, plan for emergencies, and learn about local communities.

Fisher's exact test is a statistical significance test used in the analysis of contingency tables. Although in practice it is employed when sample sizes are small, it is valid for all sample sizes. It is named after its inventor, Ronald Fisher, and is one of a class of exact tests, so called because the significance of the deviation from a null hypothesis can be calculated exactly, rather than relying on an approximation that becomes exact in the limit as the sample size grows to infinity, as with many statistical tests.

In statistics, a contingency table is a type of table in a matrix format that displays the multivariate frequency distribution of the variables. They are heavily used in survey research, business intelligence, engineering, and scientific research. They provide a basic picture of the interrelation between two variables and can help find interactions between them. The term contingency table was first used by Karl Pearson in "On the Theory of Contingency and Its Relation to Association and Normal Correlation", part of the Drapers' Company Research Memoirs Biometric Series I published in 1904.

Cohen's kappa coefficient is a statistic that is used to measure inter-rater reliability for qualitative (categorical) items. It is generally thought to be a more robust measure than simple percent agreement calculation, as κ takes into account the possibility of the agreement occurring by chance. There is controversy surrounding Cohen's kappa due to the difficulty in interpreting indices of agreement. Some researchers have suggested that it is conceptually simpler to evaluate disagreement between items.

Beaumont is a city in Leduc County within the Edmonton Metropolitan Region of Alberta, Canada. It is located at the intersection of Highway 625 and Highway 814, adjacent to the City of Edmonton and 6.0 kilometres (3.7 mi) northeast of the City of Leduc. The Nisku Industrial Park and the Edmonton International Airport are located 4.0 kilometres (2.5 mi) to the west and 8.0 kilometres (5.0 mi) to the southwest respectively.

Stephen Elliott Fienberg was a professor emeritus in the Department of Statistics, the Machine Learning Department, Heinz College, and Cylab at Carnegie Mellon University. Fienberg was the founding co-editor of the Annual Review of Statistics and Its Application and of the Journal of Privacy and Confidentiality.

Official statistics are statistics published by government agencies or other public bodies such as international organizations as a public good. They provide quantitative or qualitative information on all major areas of citizens' lives, such as economic and social development, living conditions, health, education, and the environment.

According to the 2021 census, the City of Edmonton had a population of 1,010,899 residents, compared to 4,262,635 for all of Alberta, Canada. The total population of the Edmonton census metropolitan area (CMA) was 1,418,118, making it the sixth-largest CMA in Canada.

Differential privacy (DP) is an approach for providing privacy while sharing information about a group of individuals, by describing the patterns within the group while withholding information about specific individuals. This is done by making arbitrary small changes to individual data that do not change the statistics of interest. Thus the data cannot be used to infer much about any individual.

Statistical disclosure control (SDC), also known as statistical disclosure limitation (SDL) or disclosure avoidance, is a technique used in data-driven research to ensure no person or organization is identifiable from the results of an analysis of survey or administrative data, or in the release of microdata. The purpose of SDC is to protect the confidentiality of the respondents and subjects of the research.

The Five Safes is a framework for helping make decisions about making effective use of data which is confidential or sensitive. It is mainly used to describe or design research access to statistical data held by government and health agencies, and by data archives such as the UK Data Service.

References

- 1 2 3 Newman, Dennis (1978). Techniques for ensuring the confidentiality of census information in Great Britain (Occasional Paper 4 ed.). Census Division, OPCS.

- 1 2 3 4 ONS (2006). Review of the dissemination of health statistics: confidentiality guidance (PDF) (Working Paper 3: Risk Management ed.). Office for National Statistics.

- 1 2 3 Moore, P G (1973). "'Security of the Census of Population". Journal of the Royal Statistical Society. Series A (General). 136 (4): 583–596. doi:10.2307/2344751. JSTOR 2344751.

- ↑ New Scientist (1973). "Census data not so secret". New Scientist (19th July): 142.

- 1 2 Jones, H. J. M.; Lawson, H. B.; Newman, D. (1973). "Population census: recent British developments in methodology". Royal Statistical Society. Series A (General). 136 (4): 505–538. doi:10.2307/2344749. JSTOR 2344749. S2CID 133740484 . Retrieved 16 May 2022.

- ↑ Statistics Canada (1974). 1971 Census of Canada : population : vol. I - part 1 (PDF) (Introduction to volume I (part 1) ed.). Ottawa: Statistics Canada. Retrieved 16 May 2022.

- ↑ Rhind, D W (1975). Geographical analysis and mapping of the 1971 UK Census data, Working Paper 3. Dept of Geography, University of Durham: Census Research Unit.

- ↑ Hakim, Catherine (1978). Census confidentiality, microdata and census analysis (Occasional Paper 3 ed.). Census Division, OPCS.

- ↑ J. C. Dewdney (1983). "Censuses past and present". In Rhind, D W (ed.). A Census User's Handbook. London: Methuen. pp. 1–16.

- 1 2 Marsh (1993). "Privacy, confidentiality and anonymity in the 1991 Census". In Dale, A; Marsh, C (eds.). The 1991 Census User's Guide. London: HMSO. pp. 129–154. ISBN 0-11-691527-7.

- ↑ Hakim, Catherine (1979). "Census confidentiality in Britain". In Bulmer, M (ed.). Censuses, Surveys and Privacy. London: Palgrave. pp. 132–157. doi:10.1007/978-1-349-16184-3_10. ISBN 978-0-333-26223-8.

- ↑ Openshaw, Stan (1995). Census Users' Handbok. Cambridge: Pearson. ISBN 1-899761-06-3.

- ↑ Williamson, Paul (2022). "Personal communication". Dept. of Geography and Planning, University of Liverpool.

- 1 2 3 SDC UKCDMAC Subgroup. "Statistical Disclosure Control (SDC) methods short-listed for 2011 UK Census tabular outputs, Paper 1" (PDF). Office for National Statistics. Retrieved 16 May 2022.

- 1 2 3 4 Scottish Information Commissioner (2010). "Decision 021/2005 Mr Michael Collie and the Common Services Agency for the Scottish Health ServiceChildhood leukaemia statistics in Dumfries and Galloway" (PDF). Retrieved 16 May 2022.

- ↑ Willliamson, Paul (2007). "The impact of cell adjustment on the analysis of aggregate census data". Environment and Planning A. 39 (5): 1058–1078. doi:10.1068/a38142. S2CID 154653446.

- ↑ Spicer, K. EAP125 on Statistical disclosure control (SDC) for Census 2021. Titchfield: Office for National Statistics. Retrieved 16 May 2022.[ date missing ]