Related Research Articles

A statistical hypothesis test is a method of statistical inference used to decide whether the data sufficiently support a particular hypothesis. A statistical hypothesis test typically involves a calculation of a test statistic. Then a decision is made, either by comparing the test statistic to a critical value or equivalently by evaluating a p-value computed from the test statistic. Roughly 100 specialized statistical tests have been defined.

In probability theory and statistics, Bayes' theorem, named after Thomas Bayes, describes the probability of an event, based on prior knowledge of conditions that might be related to the event. For example, if the risk of developing health problems is known to increase with age, Bayes' theorem allows the risk to an individual of a known age to be assessed more accurately by conditioning it relative to their age, rather than assuming that the individual is typical of the population as a whole.

In statistics, the power of a binary hypothesis test is the probability that the test correctly rejects the null hypothesis when a specific alternative hypothesis is true. It is commonly denoted by , and represents the chances of a true positive detection conditional on the actual existence of an effect to detect. Statistical power ranges from 0 to 1, and as the power of a test increases, the probability of making a type II error by wrongly failing to reject the null hypothesis decreases.

The base rate fallacy, also called base rate neglect or base rate bias, is a type of fallacy in which people tend to ignore the base rate in favor of the individuating information. Base rate neglect is a specific form of the more general extension neglect.

In evidence-based medicine, likelihood ratios are used for assessing the value of performing a diagnostic test. They use the sensitivity and specificity of the test to determine whether a test result usefully changes the probability that a condition exists. The first description of the use of likelihood ratios for decision rules was made at a symposium on information theory in 1954. In medicine, likelihood ratios were introduced between 1975 and 1980.

Data dredging is the misuse of data analysis to find patterns in data that can be presented as statistically significant, thus dramatically increasing and understating the risk of false positives. This is done by performing many statistical tests on the data and only reporting those that come back with significant results.

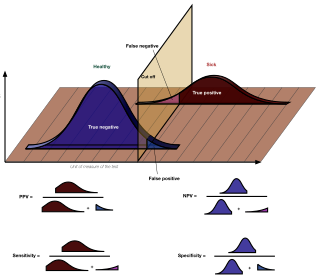

The positive and negative predictive values are the proportions of positive and negative results in statistics and diagnostic tests that are true positive and true negative results, respectively. The PPV and NPV describe the performance of a diagnostic test or other statistical measure. A high result can be interpreted as indicating the accuracy of such a statistic. The PPV and NPV are not intrinsic to the test ; they depend also on the prevalence. Both PPV and NPV can be derived using Bayes' theorem.

Given a population whose members each belong to one of a number of different sets or classes, a classification rule or classifier is a procedure by which the elements of the population set are each predicted to belong to one of the classes. A perfect classification is one for which every element in the population is assigned to the class it really belongs to. The bayes classifier is the classifier which assigns classes optimally based on the known attributes of the elements to be classified.

The use of evidence under Bayes' theorem relates to the probability of finding evidence in relation to the accused, where Bayes' theorem concerns the probability of an event and its inverse. Specifically, it compares the probability of finding particular evidence if the accused were guilty, versus if they were not guilty. An example would be the probability of finding a person's hair at the scene, if guilty, versus if just passing through the scene. Another issue would be finding a person's DNA where they lived, regardless of committing a crime there.

In medicine and statistics, sensitivity and specificity mathematically describe the accuracy of a test that reports the presence or absence of a medical condition. If individuals who have the condition are considered "positive" and those who do not are considered "negative", then sensitivity is a measure of how well a test can identify true positives and specificity is a measure of how well a test can identify true negatives:

In statistical hypothesis testing, a type I error, or a false positive, is the rejection of the null hypothesis when it is actually true. For example, an innocent person may be convicted. A type II error, or a false negative, is the failure to reject a null hypothesis that is actually false. For example: a guilty person may be not convicted.

In statistics, the multiple comparisons, multiplicity or multiple testing problem occurs when one considers a set of statistical inferences simultaneously or estimates a subset of parameters selected based on the observed values.

Medical statistics deals with applications of statistics to medicine and the health sciences, including epidemiology, public health, forensic medicine, and clinical research. Medical statistics has been a recognized branch of statistics in the United Kingdom for more than 40 years, but the term has not come into general use in North America, where the wider term 'biostatistics' is more commonly used. However, "biostatistics" more commonly connotes all applications of statistics to biology. Medical statistics is a subdiscipline of statistics.

It is the science of summarizing, collecting, presenting and interpreting data in medical practice, and using them to estimate the magnitude of associations and test hypotheses. It has a central role in medical investigations. It not only provides a way of organizing information on a wider and more formal basis than relying on the exchange of anecdotes and personal experience, but also takes into account the intrinsic variation inherent in most biological processes.

Frequentist inference is a type of statistical inference based in frequentist probability, which treats “probability” in equivalent terms to “frequency” and draws conclusions from sample-data by means of emphasizing the frequency or proportion of findings in the data. Frequentist inference underlies frequentist statistics, in which the well-established methodologies of statistical hypothesis testing and confidence intervals are founded.

In probability theory, conditional probability is a measure of the probability of an event occurring, given that another event (by assumption, presumption, assertion or evidence) is already known to have occurred. This particular method relies on event A occurring with some sort of relationship with another event B. In this situation, the event A can be analyzed by a conditional probability with respect to B. If the event of interest is A and the event B is known or assumed to have occurred, "the conditional probability of A given B", or "the probability of A under the condition B", is usually written as P(A|B) or occasionally PB(A). This can also be understood as the fraction of probability B that intersects with A, or the ratio of the probabilities of both events happening to the "given" one happening (how many times A occurs rather than not assuming B has occurred): .

Pre-test probability and post-test probability are the probabilities of the presence of a condition before and after a diagnostic test, respectively. Post-test probability, in turn, can be positive or negative, depending on whether the test falls out as a positive test or a negative test, respectively. In some cases, it is used for the probability of developing the condition of interest in the future.

The frequency format hypothesis is the idea that the brain understands and processes information better when presented in frequency formats rather than a numerical or probability format. Thus according to the hypothesis, presenting information as 1 in 5 people rather than 20% leads to better comprehension. The idea was proposed by German scientist Gerd Gigerenzer, after compilation and comparison of data collected between 1976 and 1997.

Misuse of p-values is common in scientific research and scientific education. p-values are often used or interpreted incorrectly; the American Statistical Association states that p-values can indicate how incompatible the data are with a specified statistical model. From a Neyman–Pearson hypothesis testing approach to statistical inferences, the data obtained by comparing the p-value to a significance level will yield one of two results: either the null hypothesis is rejected, or the null hypothesis cannot be rejected at that significance level. From a Fisherian statistical testing approach to statistical inferences, a low p-value means either that the null hypothesis is true and a highly improbable event has occurred or that the null hypothesis is false.

The discipline of forensic epidemiology (FE) is a hybrid of principles and practices common to both forensic medicine and epidemiology. FE is directed at filling the gap between clinical judgment and epidemiologic data for determinations of causality in civil lawsuits and criminal prosecution and defense.

Intuitive statistics, or folk statistics, is the cognitive phenomenon where organisms use data to make generalizations and predictions about the world. This can be a small amount of sample data or training instances, which in turn contribute to inductive inferences about either population-level properties, future data, or both. Inferences can involve revising hypotheses, or beliefs, in light of probabilistic data that inform and motivate future predictions. The informal tendency for cognitive animals to intuitively generate statistical inferences, when formalized with certain axioms of probability theory, constitutes statistics as an academic discipline.

References

- ↑ Stephanie (2015-08-18). "Base Rates and the Base Rate Fallacy: Definition, Examples". Statistics How To. Retrieved 2022-10-07.

- ↑ Birnbaum, Michael H. (Spring 1983). "Base Rates in Bayesian Inference: Signal Detection Analysis of the Cab Problem". The American Journal of Psychology. 96 (1): 85–94. doi:10.2307/1422211. JSTOR 1422211.

- ↑ Darling, John A.; Jerde, Christopher L.; Sepulveda, Adam J. (September 2021). "What do you mean by false positive?". Environmental DNA. 3 (5): 879–883. doi:10.1002/edn3.194. ISSN 2637-4943. PMC 8941663 . PMID 35330629.

- ↑ Bar-Hillel, Maya (1980). "The base-rate fallacy in probability judgments". Acta Psychologica. 44 (3): 211–233. doi:10.1016/0001-6918(80)90046-3.

- ↑ Koehler, Jonathan J. (1996). "The base rate fallacy reconsidered: Descriptive, normative, and methodological challenges". Behavioral and Brain Sciences. 19 (1): 1–17. doi:10.1017/S0140525X00041157. ISSN 0140-525X. S2CID 53343238.

- ↑ "Edge.org". Edge.org. Retrieved 2021-03-22.