Related Research Articles

In the field of artificial intelligence, the most difficult problems are informally known as AI-complete or AI-hard, implying that the difficulty of these computational problems, assuming intelligence is computational, is equivalent to that of solving the central artificial intelligence problem—making computers as intelligent as people. To call a problem AI-complete reflects an attitude that it would not be solved by a simple specific algorithm.

The Chinese room argument holds that a digital computer executing a program cannot have a "mind", "understanding", or "consciousness", regardless of how intelligently or human-like the program may make the computer behave. Philosopher John Searle presented the argument in his paper "Minds, Brains, and Programs", published in Behavioral and Brain Sciences in 1980. Gottfried Leibniz (1714), Anatoly Dneprov (1961), Lawrence Davis (1974) and Ned Block (1978) presented similar arguments. Searle's version has been widely discussed in the years since. The centerpiece of Searle's argument is a thought experiment known as the Chinese room.

The Age of Spiritual Machines: When Computers Exceed Human Intelligence is a non-fiction book by inventor and futurist Ray Kurzweil about artificial intelligence and the future course of humanity. First published in hardcover on January 1, 1999, by Viking, it has received attention from The New York Times, The New York Review of Books and The Atlantic. In the book Kurzweil outlines his vision for how technology will progress during the 21st century.

In the philosophy of mind, functionalism is the thesis that each and every mental state is constituted solely by its functional role, which means its causal relation to other mental states, sensory inputs, and behavioral outputs. Functionalism developed largely as an alternative to the identity theory of mind and behaviorism.

Artificial consciousness (AC), also known as machine consciousness (MC), synthetic consciousness or digital consciousness, is the consciousness hypothesized to be possible in artificial intelligence. It is also the corresponding field of study, which draws insights from philosophy of mind, philosophy of artificial intelligence, cognitive science and neuroscience. The same terminology can be used with the term "sentience" instead of "consciousness" when specifically designating phenomenal consciousness.

"Computing Machinery and Intelligence" is a seminal paper written by Alan Turing on the topic of artificial intelligence. The paper, published in 1950 in Mind, was the first to introduce his concept of what is now known as the Turing test to the general public.

A philosophical zombie is a being in a thought experiment in philosophy of mind that is physically identical to a normal human being but does not have conscious experience.

Ned Joel Block is an American philosopher working in philosophy of mind who has made important contributions to the understanding of consciousness and the philosophy of cognitive science. He has been professor of philosophy and psychology at New York University since 1996.

Psychologism is a family of philosophical positions, according to which certain psychological facts, laws, or entities play a central role in grounding or explaining certain non-psychological facts, laws, or entities. The word was coined by Johann Eduard Erdmann as Psychologismus, being translated into English as psychologism.

The philosophy of artificial intelligence is a branch of the philosophy of mind and the philosophy of computer science that explores artificial intelligence and its implications for knowledge and understanding of intelligence, ethics, consciousness, epistemology, and free will. Furthermore, the technology is concerned with the creation of artificial animals or artificial people so the discipline is of considerable interest to philosophers. These factors contributed to the emergence of the philosophy of artificial intelligence.

In the philosophy of mind, the China brain thought experiment considers what would happen if each member of the Chinese nation were asked to simulate the action of one neuron in the brain, using telephones or walkie-talkies to simulate the axons and dendrites that connect neurons. Would this arrangement have a mind or consciousness in the same way that brains do?

The symbol grounding problem is a concept in the fields of artificial intelligence, cognitive science, philosophy of mind, and semantics. It addresses the challenge of connecting symbols, such as words or abstract representations, to the real-world objects or concepts they refer to. In essence, it is about how symbols acquire meaning in a way that is tied to the physical world. It is concerned with how it is that words get their meanings, and hence is closely related to the problem of what meaning itself really is. The problem of meaning is in turn related to the problem of how it is that mental states are meaningful, and hence to the problem of consciousness: what is the connection between certain physical systems and the contents of subjective experiences.

The minimum intelligent signal test, or MIST, is a variation of the Turing test proposed by Chris McKinstry in which only boolean answers may be given to questions. The purpose of such a test is to provide a quantitative statistical measure of humanness, which may subsequently be used to optimize the performance of artificial intelligence systems intended to imitate human responses.

In philosophy of mind, the computational theory of mind (CTM), also known as computationalism, is a family of views that hold that the human mind is an information processing system and that cognition and consciousness together are a form of computation. Warren McCulloch and Walter Pitts (1943) were the first to suggest that neural activity is computational. They argued that neural computations explain cognition. The theory was proposed in its modern form by Hilary Putnam in 1967, and developed by his PhD student, philosopher, and cognitive scientist Jerry Fodor in the 1960s, 1970s, and 1980s. It was vigorously disputed in analytic philosophy in the 1990s due to work by Putnam himself, John Searle, and others.

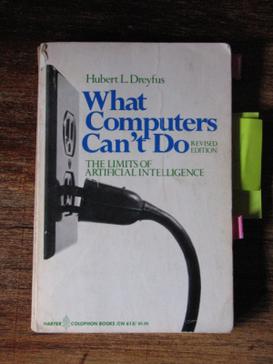

Hubert Dreyfus was a critic of artificial intelligence research. In a series of papers and books, including Alchemy and AI(1965), What Computers Can't Do and Mind over Machine(1986), he presented a pessimistic assessment of AI's progress and a critique of the philosophical foundations of the field. Dreyfus' objections are discussed in most introductions to the philosophy of artificial intelligence, including Russell & Norvig (2021), a standard AI textbook, and in Fearn (2007), a survey of contemporary philosophy.

Computational humor is a branch of computational linguistics and artificial intelligence which uses computers in humor research. It is a relatively new area, with the first dedicated conference organized in 1996.

Eugene Goostman is a chatbot that some regard as having passed the Turing test, a test of a computer's ability to communicate indistinguishably from a human. Developed in Saint Petersburg in 2001 by a group of three programmers, the Russian-born Vladimir Veselov, Ukrainian-born Eugene Demchenko, and Russian-born Sergey Ulasen, Goostman is portrayed as a 13-year-old Ukrainian boy—characteristics that are intended to induce forgiveness in those with whom it interacts for its grammatical errors and lack of general knowledge.

The Turing test, originally called the imitation game by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluator would judge natural language conversations between a human and a machine designed to generate human-like responses. The evaluator would be aware that one of the two partners in conversation was a machine, and all participants would be separated from one another. The conversation would be limited to a text-only channel, such as a computer keyboard and screen, so the result would not depend on the machine's ability to render words as speech. If the evaluator could not reliably tell the machine from the human, the machine would be said to have passed the test. The test results would not depend on the machine's ability to give correct answers to questions, only on how closely its answers resembled those a human would give. Since the Turing test is a test of indistinguishability in performance capacity, the verbal version generalizes naturally to all of human performance capacity, verbal as well as nonverbal (robotic).

The Winograd schema challenge (WSC) is a test of machine intelligence proposed in 2012 by Hector Levesque, a computer scientist at the University of Toronto. Designed to be an improvement on the Turing test, it is a multiple-choice test that employs questions of a very specific structure: they are instances of what are called Winograd schemas, named after Terry Winograd, professor of computer science at Stanford University.

In machine learning, the term stochastic parrot is a metaphor to describe the theory that large language models, though able to generate plausible language, do not understand the meaning of the language they process. The term was coined by Emily M. Bender in the 2021 artificial intelligence research paper "On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜" by Bender, Timnit Gebru, Angelina McMillan-Major, and Margaret Mitchell.

References

- Block, Ned (1981), "Psychologism and Behaviorism", The Philosophical Review, 90 (1): 5–43, CiteSeerX 10.1.1.4.5828 , doi:10.2307/2184371, JSTOR 2184371 .

- Ben-Yami, Hanoch (2005), "Behaviorism and Psychologism: Why Block's Argument Against Behaviorism is Unsound", Philosophical Psychology, 18 (2): 179–186, doi:10.1080/09515080500169470, S2CID 144390248 .

- Zalta, Edward N. (ed.). "The Turing test". Stanford Encyclopedia of Philosophy .