In mathematics, the determinant is a scalar value that is a certain function of the entries of a square matrix. The determinant of a matrix A is commonly denoted det(A), det A, or |A|. Its value characterizes some properties of the matrix and the linear map represented, on a given basis, by the matrix. In particular, the determinant is nonzero if and only if the matrix is invertible and the corresponding linear map is an isomorphism. The determinant of a product of matrices is the product of their determinants.

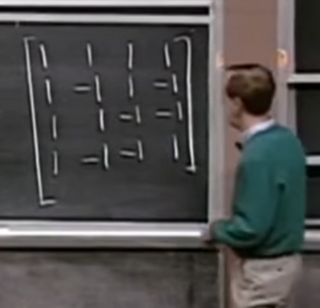

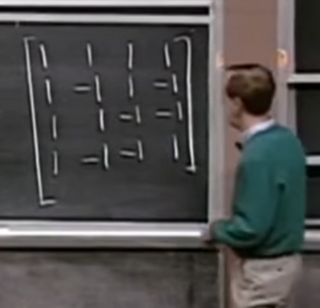

In mathematics, Gaussian elimination, also known as row reduction, is an algorithm for solving systems of linear equations. It consists of a sequence of row-wise operations performed on the corresponding matrix of coefficients. This method can also be used to compute the rank of a matrix, the determinant of a square matrix, and the inverse of an invertible matrix. The method is named after Carl Friedrich Gauss (1777–1855). To perform row reduction on a matrix, one uses a sequence of elementary row operations to modify the matrix until the lower left-hand corner of the matrix is filled with zeros, as much as possible. There are three types of elementary row operations:

In mathematics, the special linear groupSL(n, R) of degree n over a commutative ring R is the set of n × n matrices with determinant 1, with the group operations of ordinary matrix multiplication and matrix inversion. This is the normal subgroup of the general linear group given by the kernel of the determinant

In linear algebra, the Cayley–Hamilton theorem states that every square matrix over a commutative ring satisfies its own characteristic equation.

In linear algebra, a diagonal matrix is a matrix in which the entries outside the main diagonal are all zero; the term usually refers to square matrices. Elements of the main diagonal can either be zero or nonzero. An example of a 2×2 diagonal matrix is , while an example of a 3×3 diagonal matrix is. An identity matrix of any size, or any multiple of it is a diagonal matrix called a scalar matrix, for example, . In geometry, a diagonal matrix may be used as a scaling matrix, since matrix multiplication with it results in changing scale (size) and possibly also shape; only a scalar matrix results in uniform change in scale.

In linear algebra, the characteristic polynomial of a square matrix is a polynomial which is invariant under matrix similarity and has the eigenvalues as roots. It has the determinant and the trace of the matrix among its coefficients. The characteristic polynomial of an endomorphism of a finite-dimensional vector space is the characteristic polynomial of the matrix of that endomorphism over any base. The characteristic equation, also known as the determinantal equation, is the equation obtained by equating the characteristic polynomial to zero.

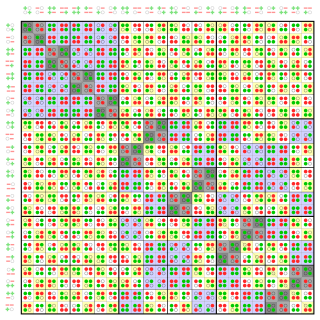

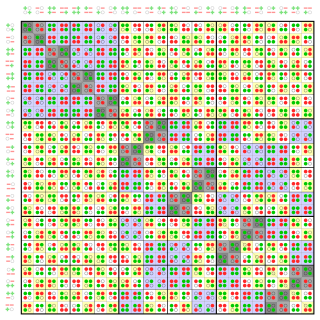

In mathematics, a Hadamard matrix, named after the French mathematician Jacques Hadamard, is a square matrix whose entries are either +1 or −1 and whose rows are mutually orthogonal. In geometric terms, this means that each pair of rows in a Hadamard matrix represents two perpendicular vectors, while in combinatorial terms, it means that each pair of rows has matching entries in exactly half of their columns and mismatched entries in the remaining columns. It is a consequence of this definition that the corresponding properties hold for columns as well as rows.

In numerical analysis, one of the most important problems is designing efficient and stable algorithms for finding the eigenvalues of a matrix. These eigenvalue algorithms may also find eigenvectors.

In linear algebra, a Hessenberg matrix is a special kind of square matrix, one that is "almost" triangular. To be exact, an upper Hessenberg matrix has zero entries below the first subdiagonal, and a lower Hessenberg matrix has zero entries above the first superdiagonal. They are named after Karl Hessenberg.

In linear algebra, a tridiagonal matrix is a band matrix that has nonzero elements only on the main diagonal, the subdiagonal/lower diagonal, and the supradiagonal/upper diagonal. For example, the following matrix is tridiagonal:

In mathematics, a unimodular matrixM is a square integer matrix having determinant +1 or −1. Equivalently, it is an integer matrix that is invertible over the integers: there is an integer matrix N that is its inverse. Thus every equation Mx = b, where M and b both have integer components and M is unimodular, has an integer solution. The n × n unimodular matrices form a group called the n × n general linear group over , which is denoted .

In linear algebra, it is often important to know which vectors have their directions unchanged by a given linear transformation. An eigenvector or characteristic vector is such a vector. Thus an eigenvector of a linear transformation is scaled by a constant factor when the linear transformation is applied to it: . The corresponding eigenvalue, characteristic value, or characteristic root is the multiplying factor .

In mathematics, an integer matrix is a matrix whose entries are all integers. Examples include binary matrices, the zero matrix, the matrix of ones, the identity matrix, and the adjacency matrices used in graph theory, amongst many others. Integer matrices find frequent application in combinatorics.

In linear algebra, the Hermite normal form is an analogue of reduced echelon form for matrices over the integers Z. Just as reduced echelon form can be used to solve problems about the solution to the linear system Ax=b where x is in Rn, the Hermite normal form can solve problems about the solution to the linear system Ax=b where this time x is restricted to have integer coordinates only. Other applications of the Hermite normal form include integer programming, cryptography, and abstract algebra.

In mathematics, a matrix is a rectangular array or table of numbers, symbols, or expressions, arranged in rows and columns, which is used to represent a mathematical object or a property of such an object.

A Jacobi operator, also known as Jacobi matrix, is a symmetric linear operator acting on sequences which is given by an infinite tridiagonal matrix. It is commonly used to specify systems of orthonormal polynomials over a finite, positive Borel measure. This operator is named after Carl Gustav Jacob Jacobi.

Joel Lee Brenner was an American mathematician who specialized in matrix theory, linear algebra, and group theory. He is known as the translator of several popular Russian texts. He was a teaching professor at some dozen colleges and universities and was a Senior Mathematician at Stanford Research Institute from 1956 to 1968. He published over one hundred scholarly papers, 35 with coauthors, and wrote book reviews.

In mathematics, particularly in linear algebra and applications, matrix analysis is the study of matrices and their algebraic properties. Some particular topics out of many include; operations defined on matrices, functions of matrices, and the eigenvalues of matrices.

In mathematics, Fischer's inequality gives an upper bound for the determinant of a positive-semidefinite matrix whose entries are complex numbers in terms of the determinants of its principal diagonal blocks. Suppose A, C are respectively p×p, q×q positive-semidefinite complex matrices and B is a p×q complex matrix. Let

Wilson matrix is the following matrix having integers as elements: