The BSP model

Overview

A BSP computer consists of the following:

- Components capable of processing and/or local memory transactions (i.e., processors),

- A network that routes messages between pairs of such components, and

- A hardware facility that allows for the synchronization of all or a subset of components.

This is commonly interpreted as a set of processors that may follow different threads of computation, with each processor equipped with fast local memory and interconnected by a communication network.

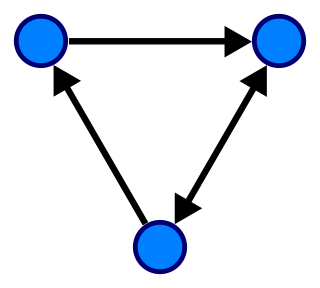

BSP algorithms rely heavily on the third feature; a computation proceeds in a series of global supersteps, which consists of three components:

- Concurrent computation: every participating processor may perform local computations, i.e., each process can only make use of values stored in the local fast memory of the processor. The computations occur asynchronously of all the others but may overlap with communication.

- Communication: The processes exchange data to facilitate remote data storage.

- Barrier synchronization: When a process reaches this point (the barrier), it waits until all other processes have reached the same barrier.

The computation and communication actions do not have to be ordered in time. Communication typically takes the form of the one-sided PUT and GET remote direct memory access (RDMA) calls rather than paired two-sided send and receive message-passing calls.

The barrier synchronization concludes the superstep—it ensures that all one-sided communications are properly concluded. Systems based on two-sided communication include this synchronization cost implicitly for every message sent. The barrier synchronization method relies on the BSP computer's hardware facility. In Valiant's original paper, this facility periodically checks if the end of the current superstep is reached globally. The period of this check is denoted by . [1]

The BSP model is also well-suited for automatic memory management for distributed-memory computing through over-decomposition of the problem and oversubscription of the processors. The computation is divided into more logical processes than there are physical processors, and processes are randomly assigned to processors. This strategy can be shown statistically to lead to almost perfect load balancing, both of work and communication.

Communication

In many parallel programming systems, communications are considered at the level of individual actions, such as sending and receiving a message or memory-to-memory transfer. This is difficult to work with since there are many simultaneous communication actions in a parallel program, and their interactions are typically complex. In particular, it is difficult to say much about the time any single communication action will take to complete.

The BSP model considers communication actions en masse. This has the effect that an upper bound on the time taken to communicate a set of data can be given. BSP considers all communication actions of a superstep as one unit and assumes all individual messages sent as part of this unit have a fixed size.

The maximum number of incoming or outgoing messages for a superstep is denoted by . The ability of a communication network to deliver data is captured by a parameter , defined such that it takes time for a processor to deliver messages of size 1.

A message of length obviously takes longer to send than a message of size 1. However, the BSP model does not make a distinction between a message length of or messages of length 1. In either case, the cost is said to be .

The parameter depends on the following:

- The protocols used to interact within the communication network.

- Buffer management by both the processors and the communication network.

- The routing strategy used in the network.

- The BSP runtime system.

In practice, is determined empirically for each parallel computer. Note that is not the normalized single-word delivery time but the single-word delivery time under continuous traffic conditions.

Barriers

This section needs additional citations for verification .(November 2013) |

The one-sided communication of the BSP model requires barrier synchronization. Barriers are potentially costly but avoid the possibility of deadlock or livelock, since barriers cannot create circular data dependencies. Tools to detect them and deal with them are unnecessary. Barriers also permit novel forms of fault tolerance [ citation needed ].

The cost of barrier synchronization is influenced by a couple of issues:

- The cost imposed by the variation in the completion time of the participating concurrent computations. Take the example where all but one of the processes have completed their work for this superstep, and are waiting for the last process, which still has a lot of work to complete. The best that an implementation can do is ensure that each process works on roughly the same problem size.

- The cost of reaching a globally consistent state in all of the processors. This depends on the communication network but also on whether there is special-purpose hardware available for synchronizing and on the way in which interrupts are handled by processors.

The cost of a barrier synchronization is denoted by . Note that if the synchronization mechanism of the BSP computer is as suggested by Valiant. [1] In practice, a value of is determined empirically.

On large computers, barriers are expensive, and this is increasingly so on large scales. There is a large body of literature on removing synchronization points from existing algorithms in the context of BSP computing and beyond. For example, many algorithms allow for the local detection of the global end of a superstep simply by comparing local information to the number of messages already received. This drives the cost of global synchronization, compared to the minimally required latency of communication, to zero. [8] Yet also this minimal latency is expected to increase further for future supercomputer architectures and network interconnects; the BSP model, along with other models for parallel computation, require adaptation to cope with this trend. Multi-BSP is one BSP-based solution. [5]

Algorithmic cost

The cost of a superstep is determined as the sum of three terms:

- The cost of the longest-running local computation

- The cost of global communication between the processors

- The cost of the barrier synchronization at the end of the superstep

Thus, the cost of one superstep for processors:

where is the cost for the local computation in process , and is the number of messages sent or received by process . Note that homogeneous processors are assumed here. It is more common for the expression to be written as where and are maxima. The cost of an entire BSP algorithm is the sum of the cost of each superstep.

where is the number of supersteps.

, , and are usually modeled as functions that vary with problem size. These three characteristics of a BSP algorithm are usually described in terms of asymptotic notation, e.g., .