Related Research Articles

Computer programming is the process of performing particular computations, usually by designing and building executable computer programs. Programming involves tasks such as analysis, generating algorithms, profiling algorithms' accuracy and resource consumption, and the implementation of algorithms. The source code of a program is written in one or more languages that are intelligible to programmers, rather than machine code, which is directly executed by the central processing unit. The purpose of programming is to find a sequence of instructions that will automate the performance of a task on a computer, often for solving a given problem. Proficient programming thus usually requires expertise in several different subjects, including knowledge of the application domain, specialized algorithms, and formal logic.

Computer science is the study of computation, information, and automation. Computer science spans theoretical disciplines to applied disciplines. Though more often considered an academic discipline, computer science is closely related to computer programming.

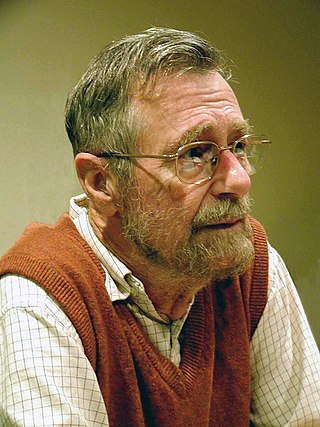

Edsger Wybe Dijkstra was a Dutch computer scientist, programmer, software engineer, and science essayist.

In computer science, static program analysis is the analysis of computer programs performed without executing them, in contrast with dynamic program analysis, which is performed on programs during their execution.

The ACM A. M. Turing Award is an annual prize given by the Association for Computing Machinery (ACM) for contributions of lasting and major technical importance to computer science. It is generally recognized as the highest distinction in computer science and is colloquially known as or often referred to as the "Nobel Prize of Computing".

Sir Charles Antony Richard Hoare is a British computer scientist who has made foundational contributions to programming languages, algorithms, operating systems, formal verification, and concurrent computing. His work earned him the Turing Award, usually regarded as the highest distinction in computer science, in 1980.

In computer science, an abstract machine is a theoretical model that allows for a detailed and precise analysis of how a computer system functions. It is similar to a mathematical function in that it receives inputs and produces outputs based on predefined rules. Abstract machines vary from literal machines in that they are expected to perform correctly and independently of hardware. Abstract machines are "machines" because they allow step-by-step execution of programmes; they are "abstract" because they ignore many aspects of actual (hardware) machines. A typical abstract machine consists of a definition in terms of input, output, and the set of allowable operations used to turn the former into the latter. They can be used for purely theoretical reasons as well as models for real-world computer systems. In the theory of computation, abstract machines are often used in thought experiments regarding computability or to analyse the complexity of algorithms. This use of abstract machines is fundamental to the field of computational complexity theory, such as finite state machines, Mealy machines, push-down automata, and Turing machines.

In computer science, formal methods are mathematically rigorous techniques for the specification, development, analysis, and verification of software and hardware systems. The use of formal methods for software and hardware design is motivated by the expectation that, as in other engineering disciplines, performing appropriate mathematical analysis can contribute to the reliability and robustness of a design.

In the context of hardware and software systems, formal verification is the act of proving or disproving the correctness of intended algorithms underlying a system with respect to a certain formal specification or property, using formal methods of mathematics.

In programming language theory, semantics is the rigorous mathematical study of the meaning of programming languages. Semantics assigns computational meaning to valid strings in a programming language syntax.

A randomized algorithm is an algorithm that employs a degree of randomness as part of its logic or procedure. The algorithm typically uses uniformly random bits as an auxiliary input to guide its behavior, in the hope of achieving good performance in the "average case" over all possible choices of random determined by the random bits; thus either the running time, or the output are random variables.

In computer science, a loop invariant is a property of a program loop that is true before each iteration. It is a logical assertion, sometimes checked with a code assertion. Knowing its invariant(s) is essential in understanding the effect of a loop.

In computer science, concurrency is the ability of different parts or units of a program, algorithm, or problem to be executed out-of-order or in partial order, without affecting the outcome. This allows for parallel execution of the concurrent units, which can significantly improve overall speed of the execution in multi-processor and multi-core systems. In more technical terms, concurrency refers to the decomposability of a program, algorithm, or problem into order-independent or partially-ordered components or units of computation.

In computer science, formal specifications are mathematically based techniques whose purpose are to help with the implementation of systems and software. They are used to describe a system, to analyze its behavior, and to aid in its design by verifying key properties of interest through rigorous and effective reasoning tools. These specifications are formal in the sense that they have a syntax, their semantics fall within one domain, and they are able to be used to infer useful information.

In computer science, unbounded nondeterminism or unbounded indeterminacy is a property of concurrency by which the amount of delay in servicing a request can become unbounded as a result of arbitration of contention for shared resources while still guaranteeing that the request will eventually be serviced. Unbounded nondeterminism became an important issue in the development of the denotational semantics of concurrency, and later became part of research into the theoretical concept of hypercomputation.

Programming language theory (PLT) is a branch of computer science that deals with the design, implementation, analysis, characterization, and classification of formal languages known as programming languages. Programming language theory is closely related to other fields including mathematics, software engineering, and linguistics. There are a number of academic conferences and journals in the area.

In computability theory, the halting problem is the problem of determining, from a description of an arbitrary computer program and an input, whether the program will finish running, or continue to run forever. The halting problem is undecidable, meaning that no general algorithm exists that solves the halting problem for all possible program–input pairs.

In computer science, interference freedom is a technique for proving partial correctness of concurrent programs with shared variables. Hoare logic had been introduced earlier to prove correctness of sequential programs. In her PhD thesis under advisor David Gries, Susan Owicki extended this work to apply to concurrent programs.

References

- "Human Language Technology. Challenges for Computer Science and Linguistics." Google Books. N.p., n.d. Web. 10 April 2017.

- "Security in Computing and Communications." Google Books. N.p., n.d. Web. 10 April 2017.

- "The Halting Problem of Alan Turing - A Most Merry and Illustrated Explanation." The Halting Problem of Alan Turing - A Most Merry and Illustrated Explanation. N.p., n.d. Web. 10 April 2017.

- Turner, Raymond, and Nicola Angius. "The Philosophy of Computer Science." Stanford Encyclopedia of Philosophy. Stanford University, 20 August 2013. Web. 10 April 2017.

- Dijkstra, E. W. "Program Correctness". U of Texas at Austin, Departments of Mathematics and Computer Sciences, Automatic Theorem Proving Project, 1970. Web.