In computer science, binary search, also known as half-interval search, logarithmic search, or binary chop, is a search algorithm that finds the position of a target value within a sorted array. Binary search compares the target value to the middle element of the array. If they are not equal, the half in which the target cannot lie is eliminated and the search continues on the remaining half, again taking the middle element to compare to the target value, and repeating this until the target value is found. If the search ends with the remaining half being empty, the target is not in the array.

Computational geometry is a branch of computer science devoted to the study of algorithms which can be stated in terms of geometry. Some purely geometrical problems arise out of the study of computational geometric algorithms, and such problems are also considered to be part of computational geometry. While modern computational geometry is a recent development, it is one of the oldest fields of computing with a history stretching back to antiquity.

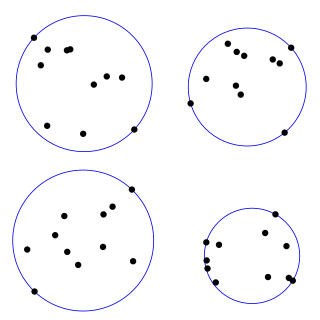

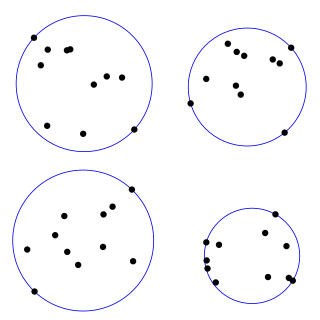

In mathematics, given a non-empty set of objects of finite extension in -dimensional space, for example a set of points, a bounding sphere, enclosing sphere or enclosing ball for that set is an -dimensional solid sphere containing all of these objects.

A Euclidean minimum spanning tree of a finite set of points in the Euclidean plane or higher-dimensional Euclidean space connects the points by a system of line segments with the points as endpoints, minimizing the total length of the segments. In it, any two points can reach each other along a path through the line segments. It can be found as the minimum spanning tree of a complete graph with the points as vertices and the Euclidean distances between points as edge weights.

In computer science, a k-d tree is a space-partitioning data structure for organizing points in a k-dimensional space. k-d trees are a useful data structure for several applications, such as searches involving a multidimensional search key and creating point clouds. k-d trees are a special case of binary space partitioning trees.

In statistics, the k-nearest neighbors algorithm (k-NN) is a non-parametric supervised learning method first developed by Evelyn Fix and Joseph Hodges in 1951, and later expanded by Thomas Cover. It is used for classification and regression. In both cases, the input consists of the k closest training examples in a data set. The output depends on whether k-NN is used for classification or regression:

Nearest neighbor search (NNS), as a form of proximity search, is the optimization problem of finding the point in a given set that is closest to a given point. Closeness is typically expressed in terms of a dissimilarity function: the less similar the objects, the larger the function values.

The closest pair of points problem or closest pair problem is a problem of computational geometry: given points in metric space, find a pair of points with the smallest distance between them. The closest pair problem for points in the Euclidean plane was among the first geometric problems that were treated at the origins of the systematic study of the computational complexity of geometric algorithms.

In computer science, the range searching problem consists of processing a set S of objects, in order to determine which objects from S intersect with a query object, called the range. For example, if S is a set of points corresponding to the coordinates of several cities, find the subset of cities within a given range of latitudes and longitudes.

Richard Jay Lipton is an American computer scientist who is Associate Dean of Research, Professor, and the Frederick G. Storey Chair in Computing in the College of Computing at the Georgia Institute of Technology. He has worked in computer science theory, cryptography, and DNA computing.

In computer science, locality-sensitive hashing (LSH) is an algorithmic technique that hashes similar input items into the same "buckets" with high probability. Since similar items end up in the same buckets, this technique can be used for data clustering and nearest neighbor search. It differs from conventional hashing techniques in that hash collisions are maximized, not minimized. Alternatively, the technique can be seen as a way to reduce the dimensionality of high-dimensional data; high-dimensional input items can be reduced to low-dimensional versions while preserving relative distances between items.

Density-based spatial clustering of applications with noise (DBSCAN) is a data clustering algorithm proposed by Martin Ester, Hans-Peter Kriegel, Jörg Sander and Xiaowei Xu in 1996. It is a density-based clustering non-parametric algorithm: given a set of points in some space, it groups together points that are closely packed together, marking as outliers points that lie alone in low-density regions . DBSCAN is one of the most common, and most commonly cited, clustering algorithms.

In mathematics, the Johnson–Lindenstrauss lemma is a result named after William B. Johnson and Joram Lindenstrauss concerning low-distortion embeddings of points from high-dimensional into low-dimensional Euclidean space. The lemma states that a set of points in a high-dimensional space can be embedded into a space of much lower dimension in such a way that distances between the points are nearly preserved. The map used for the embedding is at least Lipschitz, and can even be taken to be an orthogonal projection.

Ordering points to identify the clustering structure (OPTICS) is an algorithm for finding density-based clusters in spatial data. It was presented by Mihael Ankerst, Markus M. Breunig, Hans-Peter Kriegel and Jörg Sander. Its basic idea is similar to DBSCAN, but it addresses one of DBSCAN's major weaknesses: the problem of detecting meaningful clusters in data of varying density. To do so, the points of the database are (linearly) ordered such that spatially closest points become neighbors in the ordering. Additionally, a special distance is stored for each point that represents the density that must be accepted for a cluster so that both points belong to the same cluster. This is represented as a dendrogram.

In computer science and data mining, MinHash is a technique for quickly estimating how similar two sets are. The scheme was invented by Andrei Broder (1997), and initially used in the AltaVista search engine to detect duplicate web pages and eliminate them from search results. It has also been applied in large-scale clustering problems, such as clustering documents by the similarity of their sets of words.

(1+ε)-approximate nearest neighbor search is a variant of the nearest neighbor search problem. A solution to the -approximate nearest neighbor search is a point or multiple points within distance R from a query point, where R is the distance between the query point and its true nearest neighbor.

Nathan S. Netanyahu is an Israeli computer scientist, a professor of computer science at Bar-Ilan University.

In computer science, the cell-probe model is a model of computation similar to the random-access machine, except that all operations are free except memory access. This model is useful for proving lower bounds of algorithms for data structure problems.

In computer science, a retrieval data structure, also known as static function, is a space-efficient dictionary-like data type composed of a collection of pairs that allows the following operations:

Approximate Membership Query Filter (AMQ-Filter) is a group of space-efficient probabilistic data structures that supports approximate membership queries. An approximate membership query answers if an element is in a set or not with a false positive rate of .