In mathematics, the associative property is a property of some binary operations, which means that rearranging the parentheses in an expression will not change the result. In propositional logic, associativity is a valid rule of replacement for expressions in logical proofs.

In mathematics, the Euclidean algorithm, or Euclid's algorithm, is an efficient method for computing the greatest common divisor (GCD) of two integers (numbers), the largest number that divides them both without a remainder. It is named after the ancient Greek mathematician Euclid, who first described it in his Elements . It is an example of an algorithm, a step-by-step procedure for performing a calculation according to well-defined rules, and is one of the oldest algorithms in common use. It can be used to reduce fractions to their simplest form, and is a part of many other number-theoretic and cryptographic calculations.

In mathematics, the greatest common divisor (GCD) of two or more integers, which are not all zero, is the largest positive integer that divides each of the integers. For two integers x, y, the greatest common divisor of x and y is denoted . For example, the GCD of 8 and 12 is 4, that is, gcd(8, 12) = 4.

In mathematics, an integral domain is a nonzero commutative ring in which the product of any two nonzero elements is nonzero. Integral domains are generalizations of the ring of integers and provide a natural setting for studying divisibility. In an integral domain, every nonzero element a has the cancellation property, that is, if a ≠ 0, an equality ab = ac implies b = c.

In mathematics, a principal ideal domain, or PID, is an integral domain in which every ideal is principal, i.e., can be generated by a single element. More generally, a principal ideal ring is a nonzero commutative ring whose ideals are principal, although some authors refer to PIDs as principal rings. The distinction is that a principal ideal ring may have zero divisors whereas a principal ideal domain cannot.

In number theory, a Gaussian integer is a complex number whose real and imaginary parts are both integers. The Gaussian integers, with ordinary addition and multiplication of complex numbers, form an integral domain, usually written as or

In mathematics, a unique factorization domain (UFD) is a ring in which a statement analogous to the fundamental theorem of arithmetic holds. Specifically, a UFD is an integral domain in which every non-zero non-unit element can be written as a product of irreducible elements, uniquely up to order and units.

In algebra, an irreducible element of an integral domain is a non-zero element that is not invertible, and is not the product of two non-invertible elements.

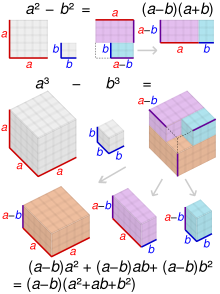

In mathematics, factorization (or factorisation, see English spelling differences) or factoring consists of writing a number or another mathematical object as a product of several factors, usually smaller or simpler objects of the same kind. For example, 3 × 5 is an integer factorization of 15, and (x – 2)(x + 2) is a polynomial factorization of x2 – 4.

In mathematics, specifically ring theory, a principal ideal is an ideal in a ring that is generated by a single element of through multiplication by every element of The term also has another, similar meaning in order theory, where it refers to an (order) ideal in a poset generated by a single element which is to say the set of all elements less than or equal to in

In mathematics, the complex conjugate of a complex number is the number with an equal real part and an imaginary part equal in magnitude but opposite in sign. That is, if and are real numbers then the complex conjugate of is The complex conjugate of is often denoted as or .

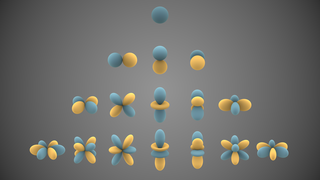

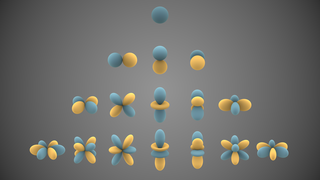

In mathematics and physical science, spherical harmonics are special functions defined on the surface of a sphere. They are often employed in solving partial differential equations in many scientific fields. A list of the spherical harmonics is available in Table of spherical harmonics.

Pollard's rho algorithm is an algorithm for integer factorization. It was invented by John Pollard in 1975. It uses only a small amount of space, and its expected running time is proportional to the square root of the smallest prime factor of the composite number being factorized.

The quadratic sieve algorithm (QS) is an integer factorization algorithm and, in practice, the second-fastest method known. It is still the fastest for integers under 100 decimal digits or so, and is considerably simpler than the number field sieve. It is a general-purpose factorization algorithm, meaning that its running time depends solely on the size of the integer to be factored, and not on special structure or properties. It was invented by Carl Pomerance in 1981 as an improvement to Schroeppel's linear sieve.

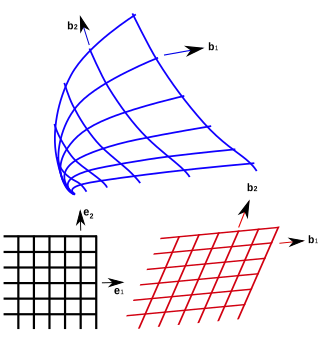

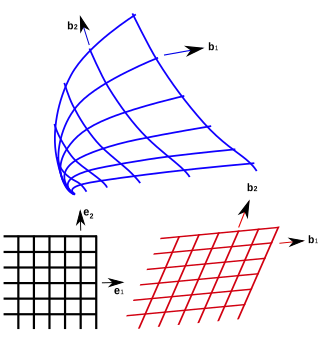

In geometry, curvilinear coordinates are a coordinate system for Euclidean space in which the coordinate lines may be curved. These coordinates may be derived from a set of Cartesian coordinates by using a transformation that is locally invertible at each point. This means that one can convert a point given in a Cartesian coordinate system to its curvilinear coordinates and back. The name curvilinear coordinates, coined by the French mathematician Lamé, derives from the fact that the coordinate surfaces of the curvilinear systems are curved.

In number theory, Dixon's factorization method is a general-purpose integer factorization algorithm; it is the prototypical factor base method. Unlike for other factor base methods, its run-time bound comes with a rigorous proof that does not rely on conjectures about the smoothness properties of the values taken by a polynomial.

Fermat's factorization method, named after Pierre de Fermat, is based on the representation of an odd integer as the difference of two squares:

Shanks' square forms factorization is a method for integer factorization devised by Daniel Shanks as an improvement on Fermat's factorization method.

In algebra, the greatest common divisor of two polynomials is a polynomial, of the highest possible degree, that is a factor of both the two original polynomials. This concept is analogous to the greatest common divisor of two integers.

In mathematics and computer algebra the factorization of a polynomial consists of decomposing it into a product of irreducible factors. This decomposition is theoretically possible and is unique for polynomials with coefficients in any field, but rather strong restrictions on the field of the coefficients are needed to allow the computation of the factorization by means of an algorithm. In practice, algorithms have been designed only for polynomials with coefficients in a finite field, in the field of rationals or in a finitely generated field extension of one of them.