Natural language processing (NLP) is an interdisciplinary subfield of computer science and information retrieval. It is primarily concerned with giving computers the ability to support and manipulate human language. It involves processing natural language datasets, such as text corpora or speech corpora, using either rule-based or probabilistic machine learning approaches. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. To this end, natural language processing often borrows ideas from theoretical linguistics. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves.

Corpus linguistics is an empirical method for the study of language by way of a text corpus. Corpora are balanced, often stratified collections of authentic, "real world", text of speech or writing that aim to represent a given linguistic variety. Today, corpora are generally machine-readable data collections.

A parse tree or parsing tree or derivation tree or concrete syntax tree is an ordered, rooted tree that represents the syntactic structure of a string according to some context-free grammar. The term parse tree itself is used primarily in computational linguistics; in theoretical syntax, the term syntax tree is more common.

Parsing, syntax analysis, or syntactic analysis is the process of analyzing a string of symbols, either in natural language, computer languages or data structures, conforming to the rules of a formal grammar. The term parsing comes from Latin pars (orationis), meaning part.

Link grammar (LG) is a theory of syntax by Davy Temperley and Daniel Sleator which builds relations between pairs of words, rather than constructing constituents in a phrase structure hierarchy. Link grammar is similar to dependency grammar, but dependency grammar includes a head-dependent relationship, whereas link grammar makes the head-dependent relationship optional. Colored Multiplanar Link Grammar (CMLG) is an extension of LG allowing crossing relations between pairs of words. The relationship between words is indicated with link types, thus making the Link grammar closely related to certain categorial grammars.

In corpus linguistics, part-of-speech tagging, also called grammatical tagging is the process of marking up a word in a text (corpus) as corresponding to a particular part of speech, based on both its definition and its context. A simplified form of this is commonly taught to school-age children, in the identification of words as nouns, verbs, adjectives, adverbs, etc.

In linguistics, a treebank is a parsed text corpus that annotates syntactic or semantic sentence structure. The construction of parsed corpora in the early 1990s revolutionized computational linguistics, which benefitted from large-scale empirical data.

Grammar induction is the process in machine learning of learning a formal grammar from a set of observations, thus constructing a model which accounts for the characteristics of the observed objects. More generally, grammatical inference is that branch of machine learning where the instance space consists of discrete combinatorial objects such as strings, trees and graphs.

Linguistic categories include

Error-driven learning is a type of reinforcement learning method. This method tweaks a model’s parameters based on the difference between the proposed and actual results. These models stand out as they depend on environmental feedback instead of explicit labels or categories. They are based on the idea that language acquisition involves the minimization of the prediction error (MPSE). By leveraging these prediction errors, the models consistently refine expectations and decrease computational complexity. Typically, these algorithms are operated by the GeneRec algorithm.

Structured prediction or structured (output) learning is an umbrella term for supervised machine learning techniques that involves predicting structured objects, rather than scalar discrete or real values.

The history of natural language processing describes the advances of natural language processing. There is some overlap with the history of machine translation, the history of speech recognition, and the history of artificial intelligence.

A constrained conditional model (CCM) is a machine learning and inference framework that augments the learning of conditional models with declarative constraints. The constraint can be used as a way to incorporate expressive prior knowledge into the model and bias the assignments made by the learned model to satisfy these constraints. The framework can be used to support decisions in an expressive output space while maintaining modularity and tractability of training and inference.

MedSLT is a medium-ranged open source spoken language translator developed by the University of Geneva. It is funded by the Swiss National Science Foundation. The system has been designed for the medical domain. It currently covers the doctor-patient diagnosis dialogues for the domains of headache, chest and abdominal pain in English, French, Japanese, Spanish, Catalan and Arabic. The vocabulary used ranges from 350 to 1000 words depending on the domain and language pair.

Deep linguistic processing is a natural language processing framework which draws on theoretical and descriptive linguistics. It models language predominantly by way of theoretical syntactic/semantic theory. Deep linguistic processing approaches differ from "shallower" methods in that they yield more expressive and structural representations which directly capture long-distance dependencies and underlying predicate-argument structures.

The knowledge-intensive approach of deep linguistic processing requires considerable computational power, and has in the past sometimes been judged as being intractable. However, research in the early 2000s had made considerable advancement in efficiency of deep processing. Today, efficiency is no longer a major problem for applications using deep linguistic processing.

The following outline is provided as an overview of and topical guide to natural-language processing:

In natural language processing (NLP), a word embedding is a representation of a word. The embedding is used in text analysis. Typically, the representation is a real-valued vector that encodes the meaning of the word in such a way that the words that are closer in the vector space are expected to be similar in meaning. Word embeddings can be obtained using language modeling and feature learning techniques, where words or phrases from the vocabulary are mapped to vectors of real numbers.

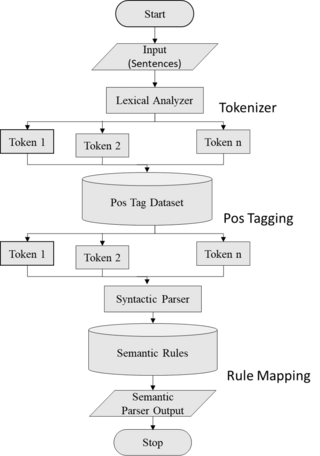

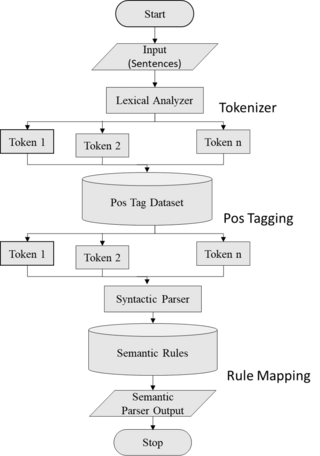

Semantic parsing is the task of converting a natural language utterance to a logical form: a machine-understandable representation of its meaning. Semantic parsing can thus be understood as extracting the precise meaning of an utterance. Applications of semantic parsing include machine translation, question answering, ontology induction, automated reasoning, and code generation. The phrase was first used in the 1970s by Yorick Wilks as the basis for machine translation programs working with only semantic representations. Semantic parsing is one of the important tasks in computational linguistics and natural language processing.

Universal Dependencies, frequently abbreviated as UD, is an international cooperative project to create treebanks of the world's languages. These treebanks are openly accessible and available. Core applications are automated text processing in the field of natural language processing (NLP) and research into natural language syntax and grammar, especially within linguistic typology. The project's primary aim is to achieve cross-linguistic consistency of annotation, while still permitting language-specific extensions when necessary. The annotation scheme has it roots in three related projects: Stanford Dependencies, Google universal part-of-speech tags, and the Interset interlingua for morphosyntactic tagsets. The UD annotation scheme uses a representation in the form of dependency trees as opposed to a phrase structure trees. At the present time, there are just over 200 treebanks of more than 100 languages available in the UD inventory.

Syntactic parsing is the automatic analysis of syntactic structure of natural language, especially syntactic relations and labelling spans of constituents. It is motivated by the problem of structural ambiguity in natural language: a sentence can be assigned multiple grammatical parses, so some kind of knowledge beyond computational grammar rules is needed to tell which parse is intended. Syntactic parsing is one of the important tasks in computational linguistics and natural language processing, and has been a subject of research since the mid-20th century with the advent of computers.