A central processing unit (CPU), also called a central processor, main processor or just processor, is the electronic circuitry that executes instructions comprising a computer program. The CPU performs basic arithmetic, logic, controlling, and input/output (I/O) operations specified by the instructions in the program. This contrasts with external components such as main memory and I/O circuitry, and specialized processors such as graphics processing units (GPUs).

The Pentium is a microprocessor that was introduced by Intel on March 22, 1993, as the first CPU in the Pentium brand. It was instruction set compatible with the 80486 but was a new and very different microarchitecture design. The P5 Pentium was the first superscalar x86 microarchitecture and the world's first superscalar microprocessor to be in mass production. It included dual integer pipelines, a faster floating-point unit, wider data bus, separate code and data caches, and many other techniques and features to enhance performance and support security, encryption, and multiprocessing, for workstations and servers.

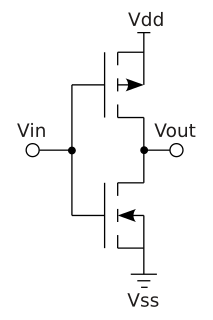

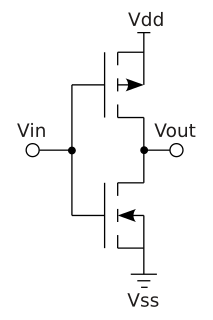

Complementary metal–oxide–semiconductor, also known as complementary-symmetry metal–oxide–semiconductor (COS-MOS), is a type of metal–oxide–semiconductor field-effect transistor (MOSFET) fabrication process that uses complementary and symmetrical pairs of p-type and n-type MOSFETs for logic functions. CMOS technology is used for constructing integrated circuit (IC) chips, including microprocessors, microcontrollers, memory chips, and other digital logic circuits. CMOS technology is also used for analog circuits such as image sensors, data converters, RF circuits, and highly integrated transceivers for many types of communication.

A rectifier is an electrical device that converts alternating current (AC), which periodically reverses direction, to direct current (DC), which flows in only one direction. The reverse operation is performed by the inverter.

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling. As power consumption by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

Processor power dissipation or processing unit power dissipation is the process in which computer processors consume electrical energy, and dissipate this energy in the form of heat due to the resistance in the electronic circuits.

In computing, the clock rate or clock speed typically refers to the frequency at which the clock generator of a processor can generate pulses, which are used to synchronize the operations of its components, and is used as an indicator of the processor's speed. It is measured in clock cycles per second or its equivalent, the SI unit hertz (Hz).

In electronics, a voltage divider is a passive linear circuit that produces an output voltage (Vout) that is a fraction of its input voltage (Vin). Voltage division is the result of distributing the input voltage among the components of the divider. A simple example of a voltage divider is two resistors connected in series, with the input voltage applied across the resistor pair and the output voltage emerging from the connection between them.

In computer engineering, microarchitecture, also called computer organization and sometimes abbreviated as µarch or uarch, is the way a given instruction set architecture (ISA) is implemented in a particular processor. A given ISA may be implemented with different microarchitectures; implementations may vary due to different goals of a given design or due to shifts in technology.

POWER7 is a family of superscalar multi-core microprocessors based on the Power ISA 2.06 instruction set architecture released in 2010 that succeeded the POWER6 and POWER6+. POWER7 was developed by IBM at several sites including IBM's Rochester, MN; Austin, TX; Essex Junction, VT; T. J. Watson Research Center, NY; Bromont, QC and IBM Deutschland Research & Development GmbH, Böblingen, Germany laboratories. IBM announced servers based on POWER7 on 8 February 2010.

Enhanced SpeedStep is a series of dynamic frequency scaling technologies built into some Intel microprocessors that allow the clock speed of the processor to be dynamically changed by software. This allows the processor to meet the instantaneous performance needs of the operation being performed, while minimizing power draw and heat generation. EIST was introduced in several Prescott 6 series in the first quarter of 2005, namely the Pentium 4 660. Intel Speed Shift Technology (SST) was introduced in Intel Skylake Processor.

A buck converter is a DC-to-DC power converter which steps down voltage from its input (supply) to its output (load). It is a class of switched-mode power supply (SMPS) typically containing at least two semiconductors and at least one energy storage element, a capacitor, inductor, or the two in combination. To reduce voltage ripple, filters made of capacitors are normally added to such a converter's output and input. It is called a buck converter because the voltage across the inductor “bucks” or opposes the supply voltage.

Ripple in electronics is the residual periodic variation of the DC voltage within a power supply which has been derived from an alternating current (AC) source. This ripple is due to incomplete suppression of the alternating waveform after rectification. Ripple voltage originates as the output of a rectifier or from generation and commutation of DC power.

In computing, computer performance is the amount of useful work accomplished by a computer system. Outside of specific contexts, computer performance is estimated in terms of accuracy, efficiency and speed of executing computer program instructions. When it comes to high computer performance, one or more of the following factors might be involved:

The history of general-purpose CPUs is a continuation of the earlier history of computing hardware.

Intel Teraflops Research Chip is a research manycore processor containing 80 cores, using a network-on-chip architecture, developed by Intel's Tera-Scale Computing Research Program. It was manufactured using a 65 nm CMOS process with eight layers of copper interconnect and contains 100 million transistors on a 275 mm2 die. Its design goal was to demonstrate a modular architecture capable of a sustained performance of 1.0 TFLOPS while dissipating less than 100 W. Research from the project was later incorporated into Xeon Phi. The technical lead of the project was Sriram R. Vangal.

Dynamic frequency scaling is a power management technique in computer architecture whereby the frequency of a microprocessor can be automatically adjusted "on the fly" depending on the actual needs, to conserve power and reduce the amount of heat generated by the chip. Dynamic frequency scaling helps preserve battery on mobile devices and decrease cooling cost and noise on quiet computing settings, or can be useful as a security measure for overheated systems.

Dynamic voltage scaling is a power management technique in computer architecture, where the voltage used in a component is increased or decreased, depending upon circumstances. Dynamic voltage scaling to increase voltage is known as overvolting; dynamic voltage scaling to decrease voltage is known as undervolting. Undervolting is done in order to conserve power, particularly in laptops and other mobile devices, where energy comes from a battery and thus is limited, or in rare cases, to increase reliability. Overvolting is done in order to support higher frequencies for performance.

In computer engineering, computer architecture is a set of rules and methods that describe the functionality, organization, and implementation of computer systems. The architecture of a system refers to its structure in terms of separately specified components of that system and their interrelationships.

Electronic systems’ power consumption has been a real challenge for Hardware and Software designers as well as users especially in portable devices like cell phones and laptop computers. Power consumption also has been an issue for many industries that use computer systems heavily such as Internet service providers using servers or companies with many employees using computers and other computational devices. Many different approaches have been discovered by researchers to estimate power consumption efficiently. This survey paper focuses on the different methods where power consumption can be estimated or measured in real-time.