A conceptual schema or conceptual data model is a high-level description of informational needs underlying the design of a database. It typically includes only the main concepts and the main relationships among them. Typically this is a first-cut model, with insufficient detail to build an actual database. This level describes the structure of the whole database for a group of users. The conceptual model is also known as the data model that can be used to describe the conceptual schema when a database system is implemented. It hides the internal details of physical storage and targets the description of entities, datatypes, relationships and constraints.

A data model is an abstract model that organizes elements of data and standardizes how they relate to one another and to the properties of real-world entities. For instance, a data model may specify that the data element representing a car be composed of a number of other elements which, in turn, represent the color and size of the car and define its owner.

IDEF, initially an abbreviation of ICAM Definition and renamed in 1999 as Integration Definition, is a family of modeling languages in the field of systems and software engineering. They cover a wide range of uses from functional modeling to data, simulation, object-oriented analysis and design, and knowledge acquisition. These definition languages were developed under funding from U.S. Air Force and, although still most commonly used by them and other military and United States Department of Defense (DoD) agencies, are in the public domain.

Terence Aidan (Terry) Halpin is an Australian computer scientist who is known for his formalization of the Object Role Modeling notation.

An entity–relationship model describes interrelated things of interest in a specific domain of knowledge. A basic ER model is composed of entity types and specifies relationships that can exist between entities.

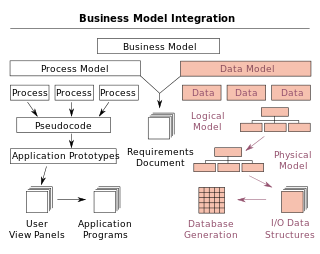

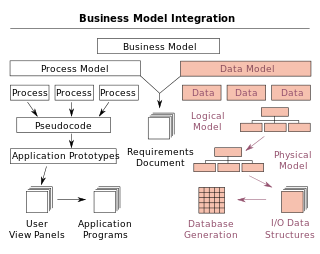

Data modeling in software engineering is the process of creating a data model for an information system by applying certain formal techniques. It may be applied as part of broader Model-driven engineering (MDE) concept.

Object–role modeling (ORM) is used to model the semantics of a universe of discourse. ORM is often used for data modeling and software engineering.

A logical data model or logical schema is a data model of a specific problem domain expressed independently of a particular database management product or storage technology but in terms of data structures such as relational tables and columns, object-oriented classes, or XML tags. This is as opposed to a conceptual data model, which describes the semantics of an organization without reference to technology.

An information model in software engineering is a representation of concepts and the relationships, constraints, rules, and operations to specify data semantics for a chosen domain of discourse. Typically it specifies relations between kinds of things, but may also include relations with individual things. It can provide sharable, stable, and organized structure of information requirements or knowledge for the domain context.

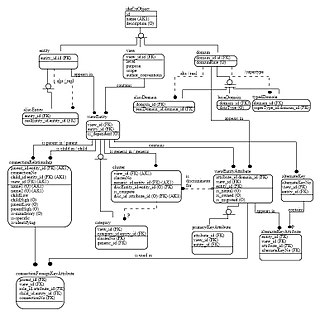

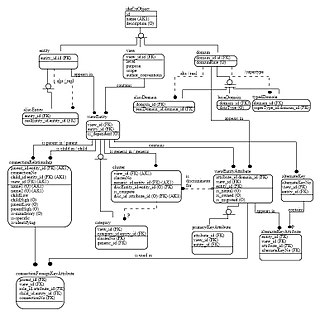

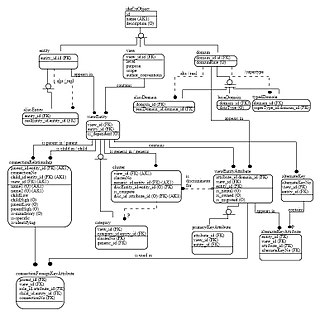

Integration DEFinition for information modeling (IDEF1X) is a data modeling language for the development of semantic data models. IDEF1X is used to produce a graphical information model which represents the structure and semantics of information within an environment or system.

Jean Leonardus Gerardus (Jan) Dietz is a Dutch Information Systems researcher, Professor Emeritus of Information Systems Design at the Delft University of Technology, known for the development of the Design & Engineering Methodology for Organisations. and his work on Enterprise Engineering.

A semantic data model (SDM) is a high-level semantics-based database description and structuring formalism for databases. This database model is designed to capture more of the meaning of an application environment than is possible with contemporary database models. An SDM specification describes a database in terms of the kinds of entities that exist in the application environment, the classifications and groupings of those entities, and the structural interconnections among them. SDM provides a collection of high-level modeling primitives to capture the semantics of an application environment. By accommodating derived information in a database structural specification, SDM allows the same information to be viewed in several ways; this makes it possible to directly accommodate the variety of needs and processing requirements typically present in database applications. The design of the present SDM is based on our experience in using a preliminary version of it. SDM is designed to enhance the effectiveness and usability of database systems. An SDM database description can serve as a formal specification and documentation tool for a database; it can provide a basis for supporting a variety of powerful user interface facilities, it can serve as a conceptual database model in the database design process; and, it can be used as the database model for a new kind of database management system.

Ronald K. (Ron) Stamper was a British computer scientist, formerly a researcher in the LSE and emeritus professor at the University of Twente, known for his pioneering work in Organisational semiotics, and the creation of the MEASUR methodology and the SEDITA framework.

NORMA is a conceptual modeling tool that implements the object-role modeling (ORM) method.

Jacobus Nicolaas (Sjaak) Brinkkemper is a Dutch computer scientist, and Full Professor of organisation and information at the Department of Information and Computing Sciences of Utrecht University.

The following is provided as an overview of and topical guide to databases:

Henderik Alex (Erik) Proper is a Dutch computer scientist, an FNR PEARL Laureate, and a senior research manager within the Computer Science (ITIS) department of the Luxembourg Institute of Science and Technology (LIST). He is also adjunct professor in data and knowledge engineering at the University of Luxembourg. He is known for work on conceptual modeling, enterprise architecture and enterprise engineering.

Eckhard D. Falkenberg is a German scientist and Professor Emeritus of Information Systems at the Radboud University Nijmegen. He is known for his contributions in the fields of information modelling, especially object-role modeling, and the conceptual foundations of information systems.

Cognition enhanced Natural language Information Analysis Method (CogNIAM) is a conceptual fact-based modelling method, that aims to integrate the different dimensions of knowledge: data, rules, processes and semantics. To represent these dimensions world standards SBVR, BPMN and DMN from the Object Management Group (OMG) are used. CogNIAM, a successor of NIAM, is based on the work of knowledge scientist Sjir Nijssen.