Consumer privacy is information privacy as it relates to the consumers of products and services.

Information privacy is the relationship between the collection and dissemination of data, technology, the public expectation of privacy, contextual information norms, and the legal and political issues surrounding them. It is also known as data privacy or data protection.

The Information Commissioner's Office (ICO) is a non-departmental public body which reports directly to the Parliament of the United Kingdom and is sponsored by the Department for Science, Innovation and Technology. It is the independent regulatory office dealing with the Data Protection Act 2018 and the General Data Protection Regulation, the Privacy and Electronic Communications Regulations 2003 across the UK; and the Freedom of Information Act 2000 and the Environmental Information Regulations 2004 in England, Wales and Northern Ireland and, to a limited extent, in Scotland. When they audit an organisation they use Symbiant's audit software.

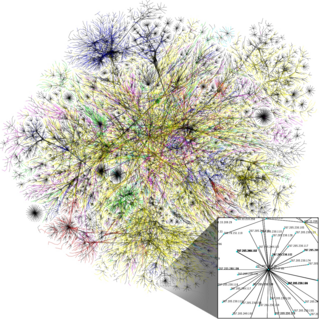

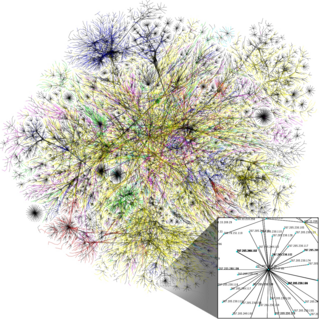

Internet privacy involves the right or mandate of personal privacy concerning the storage, re-purposing, provision to third parties, and display of information pertaining to oneself via the Internet. Internet privacy is a subset of data privacy. Privacy concerns have been articulated from the beginnings of large-scale computer sharing and especially relate to mass surveillance.

A data broker is an individual or company that specializes in collecting personal data or data about companies, mostly from public records but sometimes sourced privately, and selling or licensing such information to third parties for a variety of uses. Sources, usually Internet-based since the 1990s, may include census and electoral roll records, social networking sites, court reports and purchase histories. The information from data brokers may be used in background checks used by employers and housing.

A privacy policy is a statement or legal document that discloses some or all of the ways a party gathers, uses, discloses, and manages a customer or client's data. Personal information can be anything that can be used to identify an individual, not limited to the person's name, address, date of birth, marital status, contact information, ID issue, and expiry date, financial records, credit information, medical history, where one travels, and intentions to acquire goods and services. In the case of a business, it is often a statement that declares a party's policy on how it collects, stores, and releases personal information it collects. It informs the client what specific information is collected, and whether it is kept confidential, shared with partners, or sold to other firms or enterprises. Privacy policies typically represent a broader, more generalized treatment, as opposed to data use statements, which tend to be more detailed and specific.

Personal data, also known as personal information or personally identifiable information (PII), is any information related to an identifiable person.

Information privacy, data privacy or data protection laws provide a legal framework on how to obtain, use and store data of natural persons. The various laws around the world describe the rights of natural persons to control who is using its data. This includes usually the right to get details on which data is stored, for what purpose and to request the deletion in case the purpose is not given anymore.

Privacy law is a set of regulations that govern the collection, storage, and utilization of personal information from healthcare, governments, companies, public or private entities, or individuals.

Digital privacy is often used in contexts that promote advocacy on behalf of individual and consumer privacy rights in e-services and is typically used in opposition to the business practices of many e-marketers, businesses, and companies to collect and use such information and data. Digital privacy, a crucial aspect of modern online interactions and services, can be defined under three sub-related categories: information privacy, communication privacy, and individual privacy.

Since the arrival of early social networking sites in the early 2000s, online social networking platforms have expanded exponentially, with the biggest names in social media in the mid-2010s being Facebook, Instagram, Twitter and Snapchat. The massive influx of personal information that has become available online and stored in the cloud has put user privacy at the forefront of discussion regarding the database's ability to safely store such personal information. The extent to which users and social media platform administrators can access user profiles has become a new topic of ethical consideration, and the legality, awareness, and boundaries of subsequent privacy violations are critical concerns in advance of the technological age.

Privacy by design is an approach to systems engineering initially developed by Ann Cavoukian and formalized in a joint report on privacy-enhancing technologies by a joint team of the Information and Privacy Commissioner of Ontario (Canada), the Dutch Data Protection Authority, and the Netherlands Organisation for Applied Scientific Research in 1995. The privacy by design framework was published in 2009 and adopted by the International Assembly of Privacy Commissioners and Data Protection Authorities in 2010. Privacy by design calls for privacy to be taken into account throughout the whole engineering process. The concept is an example of value sensitive design, i.e., taking human values into account in a well-defined manner throughout the process.

Do Not Track legislation protects Internet users' right to choose whether or not they want to be tracked by third-party websites. It has been called the online version of "Do Not Call". This type of legislation is supported by privacy advocates and opposed by advertisers and services that use tracking information to personalize web content. Do Not Track (DNT) is a formerly official HTTP header field, designed to allow internet users to opt-out of tracking by websites—which includes the collection of data regarding a user's activity across multiple distinct contexts, and the retention, use, or sharing of that data outside its context. Efforts to standardize Do Not Track by the World Wide Web Consortium did not reach their goal and ended in September 2018 due to insufficient deployment and support.

The General Data Protection Regulation is a European Union regulation on information privacy in the European Union (EU) and the European Economic Area (EEA). The GDPR is an important component of EU privacy law and human rights law, in particular Article 8(1) of the Charter of Fundamental Rights of the European Union. It also governs the transfer of personal data outside the EU and EEA. The GDPR's goals are to enhance individuals' control and rights over their personal information and to simplify the regulations for international business. It supersedes the Data Protection Directive 95/46/EC and, among other things, simplifies the terminology.

Chris Jay Hoofnagle is an American professor at the University of California, Berkeley who teaches information privacy law, computer crime law, regulation of online privacy, internet law, and seminars on new technology. Hoofnagle has contributed to the privacy literature by writing privacy law legal reviews and conducting research on the privacy preferences of Americans. Notably, his research demonstrates that most Americans prefer not to be targeted online for advertising and despite claims to the contrary, young people care about privacy and take actions to protect it. Hoofnagle has written scholarly articles regarding identity theft, consumer privacy, U.S. and European privacy laws, and privacy policy suggestions.

Corporate surveillance describes the practice of businesses monitoring and extracting information from their users, clients, or staff. This information may consist of online browsing history, email correspondence, phone calls, location data, and other private details. Acts of corporate surveillance frequently look to boost results, detect potential security problems, or adjust advertising strategies. These practices have been criticized for violating ethical standards and invading personal privacy. Critics and privacy activists have called for businesses to incorporate rules and transparency surrounding their monitoring methods to ensure they are not misusing their position of authority or breaching regulatory standards.

A dark pattern is "a user interface that has been carefully crafted to trick users into doing things, such as buying overpriced insurance with their purchase or signing up for recurring bills". User experience designer Harry Brignull coined the neologism on 28 July 2010 with the registration of darkpatterns.org, a "pattern library with the specific goal of naming and shaming deceptive user interfaces". In 2023 he released the book Deceptive Patterns.

The Biometric Information Privacy Act is a law set forth on October 3, 2008 in the U.S. state of Illinois, in an effort to regulate the collection, use, and handling of biometric identifiers and information by private entities. Notably, the Act does not apply to government entities. While Texas and Washington are the only other states that implemented similar biometric protections, BIPA is the most stringent. The Act prescribes $1,000 per violation, and $5,000 per violation if the violation is intentional or reckless. Because of this damages provision, the BIPA has spawned several class action lawsuits.

The California Consumer Privacy Act (CCPA) is a state statute intended to enhance privacy rights and consumer protection for residents of the state of California in the United States. The bill was passed by the California State Legislature and signed into law by the Governor of California, Jerry Brown, on June 28, 2018, to amend Part 4 of Division 3 of the California Civil Code. Officially called AB-375, the act was introduced by Ed Chau, member of the California State Assembly, and State Senator Robert Hertzberg.

The Personal Information Protection Law of the People's Republic of China referred to as the Personal Information Protection Law or ("PIPL") protecting personal information rights and interests, standardize personal information handling activities, and promote the rational use of personal information. It also addresses the transfer of personal data outside of China.