In formal language theory, a context-free grammar (CFG) is a formal grammar whose production rules can be applied to a nonterminal symbol regardless of its context. In particular, in a context-free grammar, each production rule is of the form

In linguistics, syntax is the study of how words and morphemes combine to form larger units such as phrases and sentences. Central concerns of syntax include word order, grammatical relations, hierarchical sentence structure (constituency), agreement, the nature of crosslinguistic variation, and the relationship between form and meaning (semantics). There are numerous approaches to syntax that differ in their central assumptions and goals.

In linguistics, transformational grammar (TG) or transformational-generative grammar (TGG) is part of the theory of generative grammar, especially of natural languages. It considers grammar to be a system of rules that generate exactly those combinations of words that form grammatical sentences in a given language and involves the use of defined operations to produce new sentences from existing ones. The method is commonly associated with American linguist Noam Chomsky.

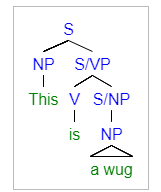

A parse tree or parsing tree or derivation tree or concrete syntax tree is an ordered, rooted tree that represents the syntactic structure of a string according to some context-free grammar. The term parse tree itself is used primarily in computational linguistics; in theoretical syntax, the term syntax tree is more common.

Head-driven phrase structure grammar (HPSG) is a highly lexicalized, constraint-based grammar developed by Carl Pollard and Ivan Sag. It is a type of phrase structure grammar, as opposed to a dependency grammar, and it is the immediate successor to generalized phrase structure grammar. HPSG draws from other fields such as computer science and uses Ferdinand de Saussure's notion of the sign. It uses a uniform formalism and is organized in a modular way which makes it attractive for natural language processing.

Parsing, syntax analysis, or syntactic analysis is the process of analyzing a string of symbols, either in natural language, computer languages or data structures, conforming to the rules of a formal grammar. The term parsing comes from Latin pars (orationis), meaning part.

Generative grammar, or generativism, is a linguistic theory that regards linguistics as the study of a hypothesised innate grammatical structure. It is a biological or biologistic modification of earlier structuralist theories of linguistics, deriving ultimately from glossematics. Generative grammar considers grammar as a system of rules that generates exactly those combinations of words that form grammatical sentences in a given language. It is a system of explicit rules that may apply repeatedly to generate an indefinite number of sentences which can be as long as one wants them to be. The difference from structural and functional models is that the object is base-generated within the verb phrase in generative grammar. This purportedly cognitive structure is thought of as being a part of a universal grammar, a syntactic structure which is caused by a genetic mutation in humans.

Geoffrey Keith Pullum is a British and American linguist specialising in the study of English. Pullum has published over 300 articles and books on various topics in linguistics, including phonology, morphology, semantics, pragmatics, computational linguistics, and philosophy of language. He is Professor Emeritus of General Linguistics at the University of Edinburgh.

Ivan Andrew Sag was an American linguist and cognitive scientist. He did research in areas of syntax and semantics as well as work in computational linguistics.

The term phrase structure grammar was originally introduced by Noam Chomsky as the term for grammar studied previously by Emil Post and Axel Thue. Some authors, however, reserve the term for more restricted grammars in the Chomsky hierarchy: context-sensitive grammars or context-free grammars. In a broader sense, phrase structure grammars are also known as constituency grammars. The defining trait of phrase structure grammars is thus their adherence to the constituency relation, as opposed to the dependency relation of dependency grammars.

In grammar, a complement is a word, phrase, or clause that is necessary to complete the meaning of a given expression. Complements are often also arguments.

Syntactic Structures is an important work in linguistics by American linguist Noam Chomsky, originally published in 1957. A short monograph of about a hundred pages, it is recognized as one of the most significant and influential linguistic studies of the 20th century. It contains the now-famous sentence "Colorless green ideas sleep furiously", which Chomsky offered as an example of a grammatically correct sentence that has no discernible meaning, thus arguing for the independence of syntax from semantics.

Gerald James Michael Gazdar, FBA is a British linguist and computer scientist.

ID/LP Grammars are a subset of Phrase Structure Grammars, differentiated from other formal grammars by distinguishing between immediate dominance (ID) and linear precedence (LP) constraints. Whereas traditional phrase structure rules incorporate dominance and precedence into a single rule, ID/LP Grammars maintains separate rule sets which need not be processed simultaneously. ID/LP Grammars are used in Computational Linguistics.

In linguistics, a treebank is a parsed text corpus that annotates syntactic or semantic sentence structure. The construction of parsed corpora in the early 1990s revolutionized computational linguistics, which benefitted from large-scale empirical data.

In linguistics, subordination is a principle of the hierarchical organization of linguistic units. While the principle is applicable in semantics, morphology, and phonology, most work in linguistics employs the term "subordination" in the context of syntax, and that is the context in which it is considered here. The syntactic units of sentences are often either subordinate or coordinate to each other. Hence an understanding of subordination is promoted by an understanding of coordination, and vice versa.

In linguistics, arc pair grammar (APG) is a theory of syntax that aims to formalize and expand upon relational grammar. It primarily builds upon the relational grammar concept of an arc, but also makes use of more formally stated ideas from model theory and graph theory. It was developed in the late 1970s by David E. Johnson and Paul Postal, and formalized in 1980 in the eponymous book Arc Pair Grammar.

In computational linguistics, the term mildly context-sensitive grammar formalisms refers to several grammar formalisms that have been developed in an effort to provide adequate descriptions of the syntactic structure of natural language.

Model-theoretic grammars, also known as constraint-based grammars, contrast with generative grammars in the way they define sets of sentences: they state constraints on syntactic structure rather than providing operations for generating syntactic objects. A generative grammar provides a set of operations such as rewriting, insertion, deletion, movement, or combination, and is interpreted as a definition of the set of all and only the objects that these operations are capable of producing through iterative application. A model-theoretic grammar simply states a set of conditions that an object must meet, and can be regarded as defining the set of all and only the structures of a certain sort that satisfy all of the constraints. The approach applies the mathematical techniques of model theory to the task of syntactic description: a grammar is a theory in the logician's sense and the well-formed structures are the models that satisfy the theory.

Syntactic parsing is the automatic analysis of syntactic structure of natural language, especially syntactic relations and labelling spans of constituents. It is motivated by the problem of structural ambiguity in natural language: a sentence can be assigned multiple grammatical parses, so some kind of knowledge beyond computational grammar rules are need to tell which parse is intended. Syntactic parsing is one of the important tasks in computational linguistics and natural language processing, and has been a subject of research since the mid-20th century with the advent of computers.