A Web crawler, sometimes called a spider or spiderbot and often shortened to crawler, is an Internet bot that systematically browses the World Wide Web and that is typically operated by search engines for the purpose of Web indexing.

robots.txt is the filename used for implementing the Robots Exclusion Protocol, a standard used by websites to indicate to visiting web crawlers and other web robots which portions of the website they are allowed to visit.

Distributed web crawling is a distributed computing technique whereby Internet search engines employ many computers to index the Internet via web crawling. Such systems may allow for users to voluntarily offer their own computing and bandwidth resources towards crawling web pages. By spreading the load of these tasks across many computers, costs that would otherwise be spent on maintaining large computing clusters are avoided.

Googlebot is the web crawler software used by Google that collects documents from the web to build a searchable index for the Google Search engine. This name is actually used to refer to two different types of web crawlers: a desktop crawler and a mobile crawler.

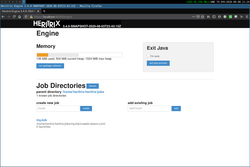

Apache Nutch is a highly extensible and scalable open source web crawler software project.

The deep web, invisible web, or hidden web are parts of the World Wide Web whose contents are not indexed by standard web search-engine programs. This is in contrast to the "surface web", which is accessible to anyone using the Internet. Computer scientist Michael K. Bergman is credited with inventing the term in 2001 as a search-indexing term.

cURL is a computer software project providing a library (libcurl) and command-line tool (curl) for transferring data using various network protocols. The name stands for "Client for URL".

Web scraping, web harvesting, or web data extraction is data scraping used for extracting data from websites. Web scraping software may directly access the World Wide Web using the Hypertext Transfer Protocol or a web browser. While web scraping can be done manually by a software user, the term typically refers to automated processes implemented using a bot or web crawler. It is a form of copying in which specific data is gathered and copied from the web, typically into a central local database or spreadsheet, for later retrieval or analysis.

Sitemaps is a protocol in XML format meant for a webmaster to inform search engines about URLs on a website that are available for web crawling. It allows webmasters to include additional information about each URL: when it was last updated, how often it changes, and how important it is in relation to other URLs of the site. This allows search engines to crawl the site more efficiently and to find URLs that may be isolated from the rest of the site's content. The Sitemaps protocol is a URL inclusion protocol and complements robots.txt, a URL exclusion protocol.

A search engine is a software system that provides hyperlinks to web pages and other relevant information on the Web in response to a user's query. The user inputs a query within a web browser or a mobile app, and the search results are often a list of hyperlinks, accompanied by textual summaries and images. Users also have the option of limiting the search to a specific type of results, such as images, videos, or news.

Web archiving is the process of collecting portions of the World Wide Web to ensure the information is preserved in an archive for future researchers, historians, and the public. Web archivists typically employ web crawlers for automated capture due to the massive size and amount of information on the Web. The largest web archiving organization based on a bulk crawling approach is the Wayback Machine, which strives to maintain an archive of the entire Web.

In programming, Libarc is a C++ library that accesses contents of GZIP compressed ARC files. These ARC files are generated by the Internet Archive's Heritrix web crawler.

PADICAT acronym for Patrimoni Digital de Catalunya, in Catalan; or Digital Heritage of Catalonia, in English, is the Web Archive of Catalonia.

The Wayback Machine is a digital archive of the World Wide Web founded by the Internet Archive, an American nonprofit organization based in San Francisco, California. Created in 1996 and launched to the public in 2001, it allows the user to go "back in time" to see how websites looked in the past. Its founders, Brewster Kahle and Bruce Gilliat, developed the Wayback Machine to provide "universal access to all knowledge" by preserving archived copies of defunct web pages.

Webarchiv is a digital archive of important Czech web resources, which are collected with the aim of their long-term preservation.

The International Internet Preservation Consortium is an international organization of libraries and other organizations established to coordinate efforts to preserve internet content for the future. It was founded in July 2003 by 12 participating institutions, and had grown to 35 members by January 2010. As of January 2022, there are 52 members.

The WARC archive format specifies a method for combining multiple digital resources into an aggregate archive file together with related information. The WARC format is a revision of the Internet Archive's ARC_IA File Format that has traditionally been used to store "web crawls" as sequences of content blocks harvested from the World Wide Web. The WARC format generalizes the older format to better support the harvesting, access, and exchange needs of archiving organizations. Besides the primary content currently recorded, the revision accommodates related secondary content, such as assigned metadata, abbreviated duplicate detection events, and later-date transformations. The WARC format is inspired by HTTP/1.0 streams, with a similar header and the use of CRLFs as delimiters, making it very conducive to crawler implementations.

The Internet Memory Foundation was a non-profitable foundation whose purpose was archiving content of the World Wide Web. It supported projects and research that included the preservation and protection of digital media content in various forms to form a digital library of cultural content. As of August 2018, it is defunct.

Common Crawl is a nonprofit 501(c)(3) organization that crawls the web and freely provides its archives and datasets to the public. Common Crawl's web archive consists of petabytes of data collected since 2008. It completes crawls generally every month.

Norconex Web Crawler is a free and open-source web crawling and web scraping Software written in Java and released under an Apache License. It can export data to many repositories such as Apache Solr, Elasticsearch, Microsoft Azure Cognitive Search, Amazon CloudSearch and more.