Related Research Articles

A greedy algorithm is any algorithm that follows the problem-solving heuristic of making the locally optimal choice at each stage. In many problems, a greedy strategy does not produce an optimal solution, but a greedy heuristic can yield locally optimal solutions that approximate a globally optimal solution in a reasonable amount of time.

A* is a graph traversal and path search algorithm, which is used in many fields of computer science due to its completeness, optimality, and optimal efficiency. One major practical drawback is its space complexity, as it stores all generated nodes in memory. Thus, in practical travel-routing systems, it is generally outperformed by algorithms that can pre-process the graph to attain better performance, as well as memory-bounded approaches; however, A* is still the best solution in many cases.

Best-first search is a class of search algorithms, which explores a graph by expanding the most promising node chosen according to a specified rule.

In computer science and operations research, the ant colony optimization algorithm (ACO) is a probabilistic technique for solving computational problems which can be reduced to finding good paths through graphs. Artificial ants stand for multi-agent methods inspired by the behavior of real ants. The pheromone-based communication of biological ants is often the predominant paradigm used. Combinations of artificial ants and local search algorithms have become a method of choice for numerous optimization tasks involving some sort of graph, e.g., vehicle routing and internet routing.

In computer science, beam search is a heuristic search algorithm that explores a graph by expanding the most promising node in a limited set. Beam search is an optimization of best-first search that reduces its memory requirements. Best-first search is a graph search which orders all partial solutions (states) according to some heuristic. But in beam search, only a predetermined number of best partial solutions are kept as candidates. It is thus a greedy algorithm.

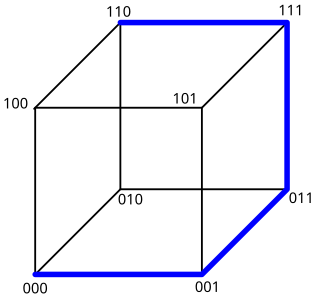

The snake-in-the-box problem in graph theory and computer science deals with finding a certain kind of path along the edges of a hypercube. This path starts at one corner and travels along the edges to as many corners as it can reach. After it gets to a new corner, the previous corner and all of its neighbors must be marked as unusable. The path should never travel to a corner which has been marked unusable.

In computer science, the min-conflicts algorithm is a search algorithm or heuristic method to solve constraint satisfaction problems.

Iterative deepening A* (IDA*) is a graph traversal and path search algorithm that can find the shortest path between a designated start node and any member of a set of goal nodes in a weighted graph. It is a variant of iterative deepening depth-first search that borrows the idea to use a heuristic function to evaluate the remaining cost to get to the goal from the A* search algorithm. Since it is a depth-first search algorithm, its memory usage is lower than in A*, but unlike ordinary iterative deepening search, it concentrates on exploring the most promising nodes and thus does not go to the same depth everywhere in the search tree. Unlike A*, IDA* does not utilize dynamic programming and therefore often ends up exploring the same nodes many times.

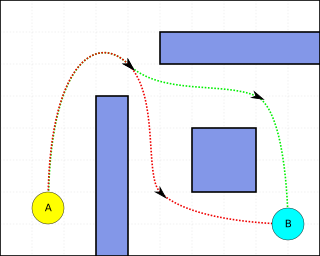

Pathfinding or pathing is the plotting, by a computer application, of the shortest route between two points. It is a more practical variant on solving mazes. This field of research is based heavily on Dijkstra's algorithm for finding the shortest path on a weighted graph.

Bidirectional search is a graph search algorithm that finds a shortest path from an initial vertex to a goal vertex in a directed graph. It runs two simultaneous searches: one forward from the initial state, and one backward from the goal, stopping when the two meet. The reason for this approach is that in many cases it is faster: for instance, in a simplified model of search problem complexity in which both searches expand a tree with branching factor b, and the distance from start to goal is d, each of the two searches has complexity O(bd/2) (in Big O notation), and the sum of these two search times is much less than the O(bd) complexity that would result from a single search from the beginning to the goal.

A rapidly exploring random tree (RRT) is an algorithm designed to efficiently search nonconvex, high-dimensional spaces by randomly building a space-filling tree. The tree is constructed incrementally from samples drawn randomly from the search space and is inherently biased to grow towards large unsearched areas of the problem. RRTs were developed by Steven M. LaValle and James J. Kuffner Jr. They easily handle problems with obstacles and differential constraints and have been widely used in autonomous robotic motion planning.

In mathematical optimization and computer science, heuristic is a technique designed for solving a problem more quickly when classic methods are too slow for finding an approximate solution, or when classic methods fail to find any exact solution. This is achieved by trading optimality, completeness, accuracy, or precision for speed. In a way, it can be considered a shortcut.

D* is any one of the following three related incremental search algorithms:

A hyper-heuristic is a heuristic search method that seeks to automate, often by the incorporation of machine learning techniques, the process of selecting, combining, generating or adapting several simpler heuristics to efficiently solve computational search problems. One of the motivations for studying hyper-heuristics is to build systems which can handle classes of problems rather than solving just one problem.

A maze-solving algorithm is an automated method for solving a maze. The random mouse, wall follower, Pledge, and Trémaux's algorithms are designed to be used inside the maze by a traveler with no prior knowledge of the maze, whereas the dead-end filling and shortest path algorithms are designed to be used by a person or computer program that can see the whole maze at once.

Any-angle path planning algorithms are pathfinding algorithms that search for a Euclidean shortest path between two points on a grid map while allowing the turns in the path to have any angle. The result is a path that cuts directly through open areas and has relatively few turns. More traditional pathfinding algorithms such as A* either lack in performance or produce jagged, indirect paths.

Sven Koenig is a full professor in computer science at the University of Southern California. He received an M.S. degree in computer science from the University of California at Berkeley in 1991 and a Ph.D. in computer science from Carnegie Mellon University in 1997, advised by Reid Simmons.

In computer science, anytime A* is a family of variants of the A* search algorithm. Like other anytime algorithms, it has a flexible time cost, can return a valid solution to a pathfinding or graph traversal problem even if it is interrupted before it ends, by generating a fast, non-optimal solution before progressively optimizing it. This ability to quickly generate solutions has made it attractive to Search-base sites and AI designs.

LPA* or Lifelong Planning A* is an incremental heuristic search algorithm based on A*. It was first described by Sven Koenig and Maxim Likhachev in 2001.

The problem of Multi-Agent Path Finding (MAPF) is an instance of multi-agent planning and consists in the computation of collision-free paths for a group of agents from their location to an assigned target. It is an optimization problem, since the aim is to find those paths that optimize a given objective function, usually defined as the number of time steps until all agents reach their goal cells. MAPF is the multi-agent generalization of the pathfinding problem, and it is closely related to the shortest path problem in the context of graph theory.

References

- ↑ S. Koenig, M. Likhachev, Y. Liu and D. Furcy. Incremental Heuristic Search in Artificial Intelligence. Artificial Intelligence Magazine, 25(2), 99-112, 2004.

- ↑ N. Deo and C. Pang. Shortest-path algorithms: Taxonomy and Annotation. Networks 14, 275–323, 1984.

- ↑ P. Hart, N. Nilsson and B. Raphael, A Formal Basis for the Heuristic Determination of Minimum Cost Paths, IEEE Trans. Syst. Science and Cybernetics, SSC-4(2), 100-107, 1968.

- ↑ N. Deo and C. Pang. Shortest-path algorithms: Taxonomy and Annotation. Networks 14, 275–323, 1984.

- ↑ X. Sun and S. Koenig. The Fringe-Saving A* Search Algorithm - A Feasibility Study. In Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), 2391-2397, 2007.

- ↑ X. Sun, S. Koenig and W. Yeoh. Generalized Adaptive A*. In Proceedings of the International Joint Conference on Autonomous Agents and Multiagent Systems (AAMAS), 469-476, 2008.

- ↑ S. Koenig, M. Likhachev and D. Furcy. Lifelong Planning A*. Artificial Intelligence, 155, (1-2), 93-146, 2004.

- ↑ 6. A. Stentz. The Focussed D* Algorithm for Real-Time Replanning. Proceedings of the International Joint Conference on Artificial Intelligence, 1652–1659, 1995.

- ↑ S. Koenig and M. Likhachev. Fast Replanning for Navigation in Unknown Terrain. Transactions on Robotics, 21, (3), 354-363, 2005.