Definition for stochastic processes

Let be a stochastic process. In most cases, or . Then the stochastic process has independent increments if and only if for every and any choice with

the random variables

In probability theory, independent increments are a property of stochastic processes and random measures. Most of the time, a process or random measure has independent increments by definition, which underlines their importance. Some of the stochastic processes that by definition possess independent increments are the Wiener process, all Lévy processes, all additive process [1] and the Poisson point process.

Let be a stochastic process. In most cases, or . Then the stochastic process has independent increments if and only if for every and any choice with

the random variables

A random measure has got independent increments if and only if the random variables are stochastically independent for every selection of pairwise disjoint measurable sets and every . [3]

Let be a random measure on and define for every bounded measurable set the random measure on as

Then is called a random measure with independent S-increments, if for all bounded sets and all the random measures are independent. [4]

Independent increments are a basic property of many stochastic processes and are often incorporated in their definition. The notion of independent increments and independent S-increments of random measures plays an important role in the characterization of Poisson point process and infinite divisibility

In mathematics, a measurable space or Borel space is a basic object in measure theory. It consists of a set and a σ-algebra, which defines the subsets that will be measured.

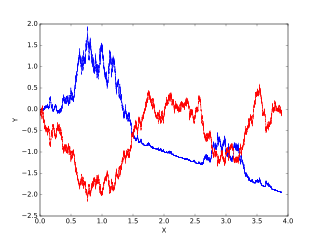

In probability theory and related fields, a stochastic or random process is a mathematical object usually defined as a family of random variables. Historically, the random variables were associated with or indexed by a set of numbers, usually viewed as points in time, giving the interpretation of a stochastic process representing numerical values of some system randomly changing over time, such as the growth of a bacterial population, an electrical current fluctuating due to thermal noise, or the movement of a gas molecule. Stochastic processes are widely used as mathematical models of systems and phenomena that appear to vary in a random manner. They have applications in many disciplines including sciences such as biology, chemistry, ecology, neuroscience, and physics as well as technology and engineering fields such as image processing, signal processing, information theory, computer science, cryptography and telecommunications. Furthermore, seemingly random changes in financial markets have motivated the extensive use of stochastic processes in finance.

In probability theory and statistics, a Gaussian process is a stochastic process, such that every finite collection of those random variables has a multivariate normal distribution, i.e. every finite linear combination of them is normally distributed. The distribution of a Gaussian process is the joint distribution of all those random variables, and as such, it is a distribution over functions with a continuous domain, e.g. time or space.

In the field of mathematical optimization, stochastic programming is a framework for modeling optimization problems that involve uncertainty. Whereas deterministic optimization problems are formulated with known parameters, real world problems almost invariably include some unknown parameters. When the parameters are known only within certain bounds, one approach to tackling such problems is called robust optimization. Here the goal is to find a solution which is feasible for all such data and optimal in some sense. Stochastic programming models are similar in style but take advantage of the fact that probability distributions governing the data are known or can be estimated. The goal here is to find some policy that is feasible for all the possible data instances and maximizes the expectation of some function of the decisions and the random variables. More generally, such models are formulated, solved analytically or numerically, and analyzed in order to provide useful information to a decision-maker.

In probability theory, a Lévy process, named after the French mathematician Paul Lévy, is a stochastic process with independent, stationary increments: it represents the motion of a point whose successive displacements are random, in which displacements in pairwise disjoint time intervals are independent, and displacements in different time intervals of the same length have identical probability distributions. A Lévy process may thus be viewed as the continuous-time analog of a random walk.

In mathematics, mixing is an abstract concept originating from physics: the attempt to describe the irreversible thermodynamic process of mixing in the everyday world: mixing paint, mixing drinks, etc.

Itô calculus, named after Kiyoshi Itô, extends the methods of calculus to stochastic processes such as Brownian motion. It has important applications in mathematical finance and stochastic differential equations.

In statistics and probability theory, a point process or point field is a collection of mathematical points randomly located on some underlying mathematical space such as the real line, the Cartesian plane, or more abstract spaces. Point processes can be used as mathematical models of phenomena or objects representable as points in some type of space.

In mathematics, the Bernoulli scheme or Bernoulli shift is a generalization of the Bernoulli process to more than two possible outcomes. Bernoulli schemes are important in the study of dynamical systems, as most such systems exhibit a repellor that is the product of the Cantor set and a smooth manifold, and the dynamics on the Cantor set are isomorphic to that of the Bernoulli shift. This is essentially the Markov partition. The term shift is in reference to the shift operator, which may be used to study Bernoulli schemes. The Ornstein isomorphism theorem shows that Bernoulli shifts are isomorphic when their entropy is equal.

In probability theory, a random measure is a measure-valued random element. Random measures are for example used in the theory of random processes, where they form many important point processes such as Poisson point processes and Cox processes.

In actuarial science and applied probability ruin theory uses mathematical models to describe an insurer's vulnerability to insolvency/ruin. In such models key quantities of interest are the probability of ruin, distribution of surplus immediately prior to ruin and deficit at time of ruin.

In probability theory, a Markov kernel is a map that in the general theory of Markov processes, plays the role that the transition matrix does in the theory of Markov processes with a finite state space.

In probability theory, an interacting particle system (IPS) is a stochastic process on some configuration space given by a site space, a countable-infinite graph and a local state space, a compact metric space . More precisely IPS are continuous-time Markov jump processes describing the collective behavior of stochastically interacting components. IPS are the continuous-time analogue of stochastic cellular automata.

In probability, statistics and related fields, a Poisson point process is a type of random mathematical object that consists of points randomly located on a mathematical space. The Poisson point process is often called simply the Poisson process, but it is also called a Poisson random measure, Poisson random point field or Poisson point field. This point process has convenient mathematical properties, which has led to it being frequently defined in Euclidean space and used as a mathematical model for seemingly random processes in numerous disciplines such as astronomy, biology, ecology, geology, seismology, physics, economics, image processing, and telecommunications.

In probability theory, an intensity measure is a measure that is derived from a random measure. The intensity measure is a non-random measure and is defined as the expectation value of the random measure of a set, hence it corresponds to the average volume the random measure assigns to a set. The intensity measure contains important information about the properties of the random measure. A Poisson point process, interpreted as a random measure, is for example uniquely determined by its intensity measure.

In the mathematics of probability, a transition kernel or kernel is a function in mathematics that has different applications. Kernels can for example be used to define random measures or stochastic processes. The most important example of kernels are the Markov kernels.

Cramér's theorem is a fundamental result in the theory of large deviations, a subdiscipline of probability theory. It determines the rate function of a series of iid random variables. A weak version of this result was first shown by Harald Cramér in 1938.

In the theory of stochastic processes, a subdiscipline of probability theory, filtrations are totally ordered collections of subsets that are used to model the information that is available at a given point and therefore play an important role in the formalization of random processes.

A mixed binomial process is a special point process in probability theory. They naturally arise from restrictions of (mixed) Poisson processes bounded intervals.

The mapping theorem is a theorem in the theory of point processes, a sub-discipline of probability theory. It describes how a Poisson point process is altered under measurable transformations. This allows to construct more complex Poisson point processes out of homogeneous Poisson point processes and can for example be used to simulate these more complex Poisson point processes in a similar manner than inverse transform sampling.