Mathematical optimization or mathematical programming is the selection of a best element, with regard to some criterion, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries.

Simulated annealing (SA) is a probabilistic technique for approximating the global optimum of a given function. Specifically, it is a metaheuristic to approximate global optimization in a large search space for an optimization problem. For large numbers of local optima, SA can find the global optima. It is often used when the search space is discrete. For problems where finding an approximate global optimum is more important than finding a precise local optimum in a fixed amount of time, simulated annealing may be preferable to exact algorithms such as gradient descent or branch and bound.

In computer science, local search is a heuristic method for solving computationally hard optimization problems. Local search can be used on problems that can be formulated as finding a solution maximizing a criterion among a number of candidate solutions. Local search algorithms move from solution to solution in the space of candidate solutions by applying local changes, until a solution deemed optimal is found or a time bound is elapsed.

In numerical analysis, hill climbing is a mathematical optimization technique which belongs to the family of local search. It is an iterative algorithm that starts with an arbitrary solution to a problem, then attempts to find a better solution by making an incremental change to the solution. If the change produces a better solution, another incremental change is made to the new solution, and so on until no further improvements can be found.

Tabu search (TS) is a metaheuristic search method employing local search methods used for mathematical optimization. It was created by Fred W. Glover in 1986 and formalized in 1989.

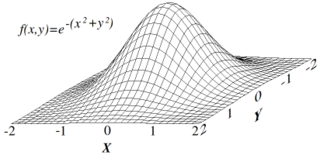

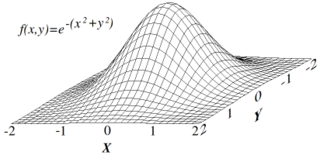

Global optimization is a branch of applied mathematics and numerical analysis that attempts to find the global minima or maxima of a function or a set of functions on a given set. It is usually described as a minimization problem because the maximization of the real-valued function is equivalent to the minimization of the function .

In computer science and operations research, the ant colony optimization algorithm (ACO) is a probabilistic technique for solving computational problems which can be reduced to finding good paths through graphs. Artificial ants stand for multi-agent methods inspired by the behavior of real ants. The pheromone-based communication of biological ants is often the predominant paradigm used. Combinations of artificial ants and local search algorithms have become a method of choice for numerous optimization tasks involving some sort of graph, e.g., vehicle routing and internet routing.

In computer science and mathematical optimization, a metaheuristic is a higher-level procedure or heuristic designed to find, generate, tune, or select a heuristic that may provide a sufficiently good solution to an optimization problem or a machine learning problem, especially with incomplete or imperfect information or limited computation capacity. Metaheuristics sample a subset of solutions which is otherwise too large to be completely enumerated or otherwise explored. Metaheuristics may make relatively few assumptions about the optimization problem being solved and so may be usable for a variety of problems.

The greedy randomized adaptive search procedure is a metaheuristic algorithm commonly applied to combinatorial optimization problems. GRASP typically consists of iterations made up from successive constructions of a greedy randomized solution and subsequent iterative improvements of it through a local search. The greedy randomized solutions are generated by adding elements to the problem's solution set from a list of elements ranked by a greedy function according to the quality of the solution they will achieve. To obtain variability in the candidate set of greedy solutions, well-ranked candidate elements are often placed in a restricted candidate list (RCL), and chosen at random when building up the solution. This kind of greedy randomized construction method is also known as a semi-greedy heuristic, first described in Hart and Shogan (1987).

In computer science, the min-conflicts algorithm is a search algorithm or heuristic method to solve constraint satisfaction problems.

Boender-Rinnooy-Stougie-Timmer algorithm (BRST) is an optimization algorithm suitable for finding global optimum of black box functions. In their paper Boender et al. describe their method as a stochastic method involving a combination of sampling, clustering and local search, terminating with a range of confidence intervals on the value of the global minimum.

Stochastic optimization (SO) methods are optimization methods that generate and use random variables. For stochastic problems, the random variables appear in the formulation of the optimization problem itself, which involves random objective functions or random constraints. Stochastic optimization methods also include methods with random iterates. Some stochastic optimization methods use random iterates to solve stochastic problems, combining both meanings of stochastic optimization. Stochastic optimization methods generalize deterministic methods for deterministic problems.

Guided local search is a metaheuristic search method. A meta-heuristic method is a method that sits on top of a local search algorithm to change its behavior.

Roberto Battiti is an Italian computer scientist, Professor of computer science at the University of Trento, director of the LIONlab, and deputy director of the DISI Department and delegate for technology transfer.

In computational engineering, Luus–Jaakola (LJ) denotes a heuristic for global optimization of a real-valued function. In engineering use, LJ is not an algorithm that terminates with an optimal solution; nor is it an iterative method that generates a sequence of points that converges to an optimal solution. However, when applied to a twice continuously differentiable function, the LJ heuristic is a proper iterative method, that generates a sequence that has a convergent subsequence; for this class of problems, Newton's method is recommended and enjoys a quadratic rate of convergence, while no convergence rate analysis has been given for the LJ heuristic. In practice, the LJ heuristic has been recommended for functions that need be neither convex nor differentiable nor locally Lipschitz: The LJ heuristic does not use a gradient or subgradient when one be available, which allows its application to non-differentiable and non-convex problems.

Variable neighborhood search (VNS), proposed by Mladenović & Hansen in 1997, is a metaheuristic method for solving a set of combinatorial optimization and global optimization problems. It explores distant neighborhoods of the current incumbent solution, and moves from there to a new one if and only if an improvement was made. The local search method is applied repeatedly to get from solutions in the neighborhood to local optima. VNS was designed for approximating solutions of discrete and continuous optimization problems and according to these, it is aimed for solving linear program problems, integer program problems, mixed integer program problems, nonlinear program problems, etc.

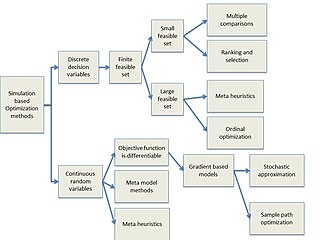

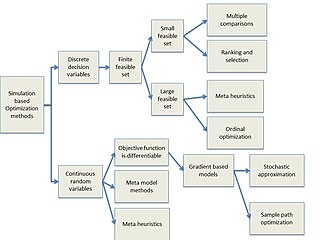

Simulation-based optimization integrates optimization techniques into simulation modeling and analysis. Because of the complexity of the simulation, the objective function may become difficult and expensive to evaluate. Usually, the underlying simulation model is stochastic, so that the objective function must be estimated using statistical estimation techniques.

In applied mathematics, Basin-hopping is a global optimization technique that iterates by performing random perturbation of coordinates, performing local optimization, and accepting or rejecting new coordinates based on a minimized function value. The algorithm was described in 1997 by David J. Wales and Jonathan Doye. It is a particularly useful algorithm for global optimization in very high-dimensional landscapes, such as finding the minimum energy structure for molecules. The method is inspired from Monte-Carlo Minimization first suggested by Li and Scheraga.