In vector calculus, the gradient of a scalar-valued differentiable function f of several variables is the vector field whose value at a point is the vector whose components are the partial derivatives of at . That is, for , its gradient is defined at the point in n-dimensional space as the vector:

In geometry, a geodesic is commonly a curve representing in some sense the shortest path (arc) between two points in a surface, or more generally in a Riemannian manifold. The term also has meaning in any differentiable manifold with a connection. It is a generalization of the notion of a "straight line" to a more general setting.

In mathematical optimization, the method of Lagrange multipliers is a strategy for finding the local maxima and minima of a function subject to equality constraints. It is named after the mathematician Joseph-Louis Lagrange. The basic idea is to convert a constrained problem into a form such that the derivative test of an unconstrained problem can still be applied. The relationship between the gradient of the function and gradients of the constraints rather naturally leads to a reformulation of the original problem, known as the Lagrangian function.

In Riemannian geometry, the sectional curvature is one of the ways to describe the curvature of Riemannian manifolds with dimension greater than 1. The sectional curvature K(σp) depends on a two-dimensional linear subspace σp of the tangent space at a point p of the manifold. It can be defined geometrically as the Gaussian curvature of the surface which has the plane σp as a tangent plane at p, obtained from geodesics which start at p in the directions of σp. The sectional curvature is a real-valued function on the 2-Grassmannian bundle over the manifold.

In mathematics, specifically in differential topology, Morse theory enables one to analyze the topology of a manifold by studying differentiable functions on that manifold. According to the basic insights of Marston Morse, a typical differentiable function on a manifold will reflect the topology quite directly. Morse theory allows one to find CW structures and handle decompositions on manifolds and to obtain substantial information about their homology.

In mathematics, the Hessian matrix or Hessian is a square matrix of second-order partial derivatives of a scalar-valued function, or scalar field. It describes the local curvature of a function of many variables. The Hessian matrix was developed in the 19th century by the German mathematician Ludwig Otto Hesse and later named after him. Hesse originally used the term "functional determinants".

In mathematics, the covariant derivative is a way of specifying a derivative along tangent vectors of a manifold. Alternatively, the covariant derivative is a way of introducing and working with a connection on a manifold by means of a differential operator, to be contrasted with the approach given by a principal connection on the frame bundle – see affine connection. In the special case of a manifold isometrically embedded into a higher-dimensional Euclidean space, the covariant derivative can be viewed as the orthogonal projection of the Euclidean directional derivative onto the manifold's tangent space. In this case the Euclidean derivative is broken into two parts, the extrinsic normal component and the intrinsic covariant derivative component.

This is a glossary of some terms used in Riemannian geometry and metric geometry — it doesn't cover the terminology of differential topology.

In mathematics, a Killing vector field, named after Wilhelm Killing, is a vector field on a Riemannian manifold that preserves the metric. Killing fields are the infinitesimal generators of isometries; that is, flows generated by Killing fields are continuous isometries of the manifold. More simply, the flow generates a symmetry, in the sense that moving each point of an object the same distance in the direction of the Killing vector will not distort distances on the object.

Interior-point methods are a certain class of algorithms that solve linear and nonlinear convex optimization problems.

In differential geometry, the Laplace–Beltrami operator is a generalization of the Laplace operator to functions defined on submanifolds in Euclidean space and, even more generally, on Riemannian and pseudo-Riemannian manifolds. It is named after Pierre-Simon Laplace and Eugenio Beltrami.

In mathematical optimization, the Karush–Kuhn–Tucker (KKT) conditions, also known as the Kuhn–Tucker conditions, are first derivative tests for a solution in nonlinear programming to be optimal, provided that some regularity conditions are satisfied.

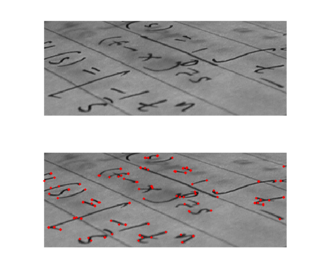

Corner detection is an approach used within computer vision systems to extract certain kinds of features and infer the contents of an image. Corner detection is frequently used in motion detection, image registration, video tracking, image mosaicing, panorama stitching, 3D reconstruction and object recognition. Corner detection overlaps with the topic of interest point detection.

In mathematics, the structure tensor, also referred to as the second-moment matrix, is a matrix derived from the gradient of a function. It describes the distribution of the gradient in a specified neighborhood around a point and makes the information invariant respect the observing coordinates. The structure tensor is often used in image processing and computer vision.

Covariance matrix adaptation evolution strategy (CMA-ES) is a particular kind of strategy for numerical optimization. Evolution strategies (ES) are stochastic, derivative-free methods for numerical optimization of non-linear or non-convex continuous optimization problems. They belong to the class of evolutionary algorithms and evolutionary computation. An evolutionary algorithm is broadly based on the principle of biological evolution, namely the repeated interplay of variation and selection: in each generation (iteration) new individuals are generated by variation, usually in a stochastic way, of the current parental individuals. Then, some individuals are selected to become the parents in the next generation based on their fitness or objective function value . Like this, over the generation sequence, individuals with better and better -values are generated.

In mathematics, the Abel–Jacobi map is a construction of algebraic geometry which relates an algebraic curve to its Jacobian variety. In Riemannian geometry, it is a more general construction mapping a manifold to its Jacobi torus. The name derives from the theorem of Abel and Jacobi that two effective divisors are linearly equivalent if and only if they are indistinguishable under the Abel–Jacobi map.

In mathematics, a quasitoric manifold is a topological analogue of the nonsingular projective toric variety of algebraic geometry. A smooth -dimensional manifold is a quasitoric manifold if it admits a smooth, locally standard action of an -dimensional torus, with orbit space an -dimensional simple convex polytope.

Proximal gradientmethods for learning is an area of research in optimization and statistical learning theory which studies algorithms for a general class of convex regularization problems where the regularization penalty may not be differentiable. One such example is regularization of the form

In differential geometry, a complete Riemannian manifold is called a Ricci soliton if, and only if, there exists a smooth vector field such that

In mathematics, a harmonic morphism is a (smooth) map :(M^{m},g)\to (N^{n},h)} between Riemannian manifolds that pulls back real-valued harmonic functions on the codomain to harmonic functions on the domain. Harmonic morphisms form a special class of harmonic maps i.e. those that are horizontally (weakly) conformal.