Related Research Articles

In machine learning, early stopping is a form of regularization used to avoid overfitting when training a learner with an iterative method, such as gradient descent. Such methods update the learner so as to make it better fit the training data with each iteration. Up to a point, this improves the learner's performance on data outside of the training set. Past that point, however, improving the learner's fit to the training data comes at the expense of increased generalization error. Early stopping rules provide guidance as to how many iterations can be run before the learner begins to over-fit. Early stopping rules have been employed in many different machine learning methods, with varying amounts of theoretical foundation.

John Wilder Tukey was an American mathematician and statistician, best known for the development of the fast Fourier Transform (FFT) algorithm and box plot. The Tukey range test, the Tukey lambda distribution, the Tukey test of additivity, and the Teichmüller–Tukey lemma all bear his name. He is also credited with coining the term bit and the first published use of the word software.

Functional data analysis (FDA) is a branch of statistics that analyses data providing information about curves, surfaces or anything else varying over a continuum. In its most general form, under an FDA framework, each sample element of functional data is considered to be a random function. The physical continuum over which these functions are defined is often time, but may also be spatial location, wavelength, probability, etc. Intrinsically, functional data are infinite dimensional. The high intrinsic dimensionality of these data brings challenges for theory as well as computation, where these challenges vary with how the functional data were sampled. However, the high or infinite dimensional structure of the data is a rich source of information and there are many interesting challenges for research and data analysis.

Projection pursuit (PP) is a type of statistical technique that involves finding the most "interesting" possible projections in multidimensional data. Often, projections that deviate more from a normal distribution are considered to be more interesting. As each projection is found, the data are reduced by removing the component along that projection, and the process is repeated to find new projections; this is the "pursuit" aspect that motivated the technique known as matching pursuit.

Nonparametric regression is a category of regression analysis in which the predictor does not take a predetermined form but is constructed according to information derived from the data. That is, no parametric form is assumed for the relationship between predictors and dependent variable. Nonparametric regression requires larger sample sizes than regression based on parametric models because the data must supply the model structure as well as the model estimates.

Bradley Efron is an American statistician. Efron has been president of the American Statistical Association (2004) and of the Institute of Mathematical Statistics (1987–1988). He is a past editor of the Journal of the American Statistical Association, and he is the founding editor of the Annals of Applied Statistics. Efron is also the recipient of many awards.

Jack Carl Kiefer was an American mathematical statistician at Cornell University and the University of California, Berkeley. His research interests included the optimal design of experiments, which was his major research area, as well as a wide variety of topics in mathematical statistics.

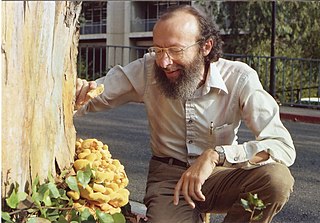

In machine learning and computational learning theory, LogitBoost is a boosting algorithm formulated by Jerome Friedman, Trevor Hastie, and Robert Tibshirani.

In statistics, multivariate adaptive regression splines (MARS) is a form of regression analysis introduced by Jerome H. Friedman in 1991. It is a non-parametric regression technique and can be seen as an extension of linear models that automatically models nonlinearities and interactions between variables.

In statistics, an additive model (AM) is a nonparametric regression method. It was suggested by Jerome H. Friedman and Werner Stuetzle (1981) and is an essential part of the ACE algorithm. The AM uses a one-dimensional smoother to build a restricted class of nonparametric regression models. Because of this, it is less affected by the curse of dimensionality than e.g. a p-dimensional smoother. Furthermore, the AM is more flexible than a standard linear model, while being more interpretable than a general regression surface at the cost of approximation errors. Problems with AM, like many other machine-learning methods, include model selection, overfitting, and multicollinearity.

In statistics, the Huber loss is a loss function used in robust regression, that is less sensitive to outliers in data than the squared error loss. A variant for classification is also sometimes used.

Gradient boosting is a machine learning technique based on boosting in a functional space, where the target is pseudo-residuals rather than the typical residuals used in traditional boosting. It gives a prediction model in the form of an ensemble of weak prediction models, i.e., models that make very few assumptions about the data, which are typically simple decision trees. When a decision tree is the weak learner, the resulting algorithm is called gradient-boosted trees; it usually outperforms random forest. A gradient-boosted trees model is built in a stage-wise fashion as in other boosting methods, but it generalizes the other methods by allowing optimization of an arbitrary differentiable loss function.

Robert Tibshirani is a professor in the Departments of Statistics and Biomedical Data Science at Stanford University. He was a professor at the University of Toronto from 1985 to 1998. In his work, he develops statistical tools for the analysis of complex datasets, most recently in genomics and proteomics.

Jacqueline Meulman is a Dutch statistician and professor emerita of Applied Statistics at the Mathematical Institute of Leiden University.

In machine learning, a probabilistic classifier is a classifier that is able to predict, given an observation of an input, a probability distribution over a set of classes, rather than only outputting the most likely class that the observation should belong to. Probabilistic classifiers provide classification that can be useful in its own right or when combining classifiers into ensembles.

The following outline is provided as an overview of and topical guide to machine learning:

Lawrence C. Rafsky, is an American data scientist, inventor, and entrepreneur. Rafsky created search algorithms and methodologies for the financial and news information industries. He is co-inventor of the Friedman-Rafsky Test commonly used to test goodness-of-fit for the multivariate normal distribution. Rafsky founded and became chief scientist for Acquire Media, a news and information syndication company, now a subsidiary of Moody's.

Peter Lukas Bühlmann is a Swiss mathematician and statistician.

Linda Hong Zhao is a Chinese-American statistician. She is a Professor of Statistics and at the Wharton School of the University of Pennsylvania. She is a Fellow of the Institute of Mathematical Statistics. Zhao specializes in modern machine learning methods.

Charles "Chuck" Joel Stone was an American statistician and mathematician.

References

- ↑ Fisher, N. I. (2015-05-01). "A Conversation with Jerry Friedman". Statistical Science. 30 (2). arXiv: 1507.08502 . doi: 10.1214/14-STS509 . ISSN 0883-4237. S2CID 56860536.

- 1 2 Jerome H. Friedman Professor of Statistics. Accessed 18 July 2017.

- ↑ Chow, Rony (2021-06-03). "Jerome H. Friedman: Applying Statistics to Data and Machine Learning". History of Data Science. Retrieved 2023-01-04.

- 1 2 Jerome H. Friedman Vita December 2012, at stat.stanford.edu. Accessed 18 July 2017.

- ↑ Jerome Harold Friedman. Mathematics Genealogy Project

- ↑ View/Search Fellows of the ASA Archived 2016-06-16 at the Wayback Machine , accessed 2016-10-29.

- ↑ Dr. Jerome H. Friedman awarded the SIGKDD Innovation Award, 2002.