Related Research Articles

A distributed system is a system whose components are located on different networked computers, which communicate and coordinate their actions by passing messages to one another. Distributed computing is a field of computer science that studies distributed systems.

In computer science, mutual exclusion is a property of concurrency control, which is instituted for the purpose of preventing race conditions. It is the requirement that one thread of execution never enters a critical section while a concurrent thread of execution is already accessing said critical section, which refers to an interval of time during which a thread of execution accesses a shared resource or shared memory.

A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, there have existed supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.

In computer science, model checking or property checking is a method for checking whether a finite-state model of a system meets a given specification. This is typically associated with hardware or software systems, where the specification contains liveness requirements as well as safety requirements.

In artificial intelligence, symbolic artificial intelligence is the term for the collection of all methods in artificial intelligence research that are based on high-level symbolic (human-readable) representations of problems, logic and search. Symbolic AI used tools such as logic programming, production rules, semantic nets and frames, and it developed applications such as knowledge-based systems, symbolic mathematics, automated theorem provers, ontologies, the semantic web, and automated planning and scheduling systems. The Symbolic AI paradigm led to seminal ideas in search, symbolic programming languages, agents, multi-agent systems, the semantic web, and the strengths and limitations of formal knowledge and reasoning systems.

In compiler optimization, register allocation is the process of assigning local automatic variables and expression results to a limited number of processor registers.

In computer science, symbolic execution (also symbolic evaluation or symbex) is a means of analyzing a program to determine what inputs cause each part of a program to execute. An interpreter follows the program, assuming symbolic values for inputs rather than obtaining actual inputs as normal execution of the program would. It thus arrives at expressions in terms of those symbols for expressions and variables in the program, and constraints in terms of those symbols for the possible outcomes of each conditional branch. Finally, the possible inputs that trigger a branch can be determined by solving the constraints.

ESC/Java, the "Extended Static Checker for Java," is a programming tool that attempts to find common run-time errors in Java programs at compile time. The underlying approach used in ESC/Java is referred to as extended static checking, which is a collective name referring to a range of techniques for statically checking the correctness of various program constraints. For example, that an integer variable is greater-than-zero, or lies between the bounds of an array. This technique was pioneered in ESC/Java and can be thought of as an extended form of type checking. Extended static checking usually involves the use of an automated theorem prover and, in ESC/Java, the Simplify theorem prover was used.

In computer science, concurrency is the ability of different parts or units of a program, algorithm, or problem to be executed out-of-order or in partial order, without affecting the outcome. This allows for parallel execution of the concurrent units, which can significantly improve overall speed of the execution in multi-processor and multi-core systems. In more technical terms, concurrency refers to the decomposability of a program, algorithm, or problem into order-independent or partially-ordered components or units of computation.

Model-based testing is an application of model-based design for designing and optionally also executing artifacts to perform software testing or system testing. Models can be used to represent the desired behavior of a system under test (SUT), or to represent testing strategies and a test environment. The picture on the right depicts the former approach.

Proof of work (PoW) is a form of cryptographic proof in which one party proves to others that a certain amount of a specific computational effort has been expended. Verifiers can subsequently confirm this expenditure with minimal effort on their part. The concept was invented by Moni Naor and Cynthia Dwork in 1993 as a way to deter denial-of-service attacks and other service abuses such as spam on a network by requiring some work from a service requester, usually meaning processing time by a computer. The term "proof of work" was first coined and formalized in a 1999 paper by Markus Jakobsson and Ari Juels.

NuSMV is a reimplementation and extension of SMV symbolic model checker, the first model checking tool based on binary decision diagrams (BDDs). The tool has been designed as an open architecture for model checking. It is aimed at reliable verification of industrially sized designs, for use as a backend for other verification tools and as a research tool for formal verification techniques.

In computing, reactive programming is a declarative programming paradigm concerned with data streams and the propagation of change. With this paradigm, it's possible to express static or dynamic data streams with ease, and also communicate that an inferred dependency within the associated execution model exists, which facilitates the automatic propagation of the changed data flow.

The Library of Efficient Data types and Algorithms (LEDA) is a proprietarily-licensed software library providing C++ implementations of a broad variety of algorithms for graph theory and computational geometry. It was originally developed by the Max Planck Institute for Informatics Saarbrücken. Since 2001, LEDA is further developed and distributed by the Algorithmic Solutions Software GmbH.

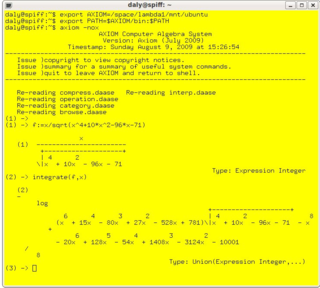

In mathematics and computer science, computer algebra, also called symbolic computation or algebraic computation, is a scientific area that refers to the study and development of algorithms and software for manipulating mathematical expressions and other mathematical objects. Although computer algebra could be considered a subfield of scientific computing, they are generally considered as distinct fields because scientific computing is usually based on numerical computation with approximate floating point numbers, while symbolic computation emphasizes exact computation with expressions containing variables that have no given value and are manipulated as symbols.

Concolic testing is a hybrid software verification technique that performs symbolic execution, a classical technique that treats program variables as symbolic variables, along a concrete execution path. Symbolic execution is used in conjunction with an automated theorem prover or constraint solver based on constraint logic programming to generate new concrete inputs with the aim of maximizing code coverage. Its main focus is finding bugs in real-world software, rather than demonstrating program correctness.

Extended static checking (ESC) is a collective name in computer science for a range of techniques for statically checking the correctness of various program constraints. ESC can be thought of as an extended form of type checking. As with type checking, ESC is performed automatically at compile time. This distinguishes it from more general approaches to the formal verification of software, which typically rely on human-generated proofs. Furthermore, it promotes practicality over soundness, in that it aims to dramatically reduce the number of false positives at the cost of introducing some false negatives. ESC can identify a range of errors which are currently outside the scope of a type checker, including division by zero, array out of bounds, integer overflow and null dereferences.

Join-patterns provides a way to write concurrent, parallel and distributed computer programs by message passing. Compared to the use of threads and locks, this is a high level programming model using communication constructs model to abstract the complexity of concurrent environment and to allow scalability. Its focus is on the execution of a chord between messages atomically consumed from a group of channels.

Gradual typing is a type system in which some variables and expressions may be given types and the correctness of the typing is checked at compile time and some expressions may be left untyped and eventual type errors are reported at runtime. Gradual typing allows software developers to choose either type paradigm as appropriate, from within a single language. In many cases gradual typing is added to an existing dynamic language, creating a derived language allowing but not requiring static typing to be used. In some cases a language uses gradual typing from the start.

David Lansing Dill is a computer scientist and academic noted for contributions to formal verification, electronic voting security, and computational systems biology.

References

- ↑ Hamez, Alexandre; Kordon, Fabrice; Thierry-Mieg, Yann (2007). "IibDMC: A Library to Operate Efficient Distributed Model Checking". 2007 IEEE International Parallel and Distributed Processing Symposium. pp. 1–8. doi:10.1109/IPDPS.2007.370647. ISBN 978-1-4244-0909-9. S2CID 12586847.

- ↑ Hamez, Alexandre; Kordon, Fabrice; Thierry-Mieg, Yann; Legond-Aubry, Fabrice (2007). "DMCG: A Distributed Symbolic Model Checker Based on GreatSPN". Petri Nets and Other Models of Concurrency – ICATPN 2007. Lecture Notes in Computer Science. Vol. 4546. pp. 495–504. doi:10.1007/978-3-540-73094-1_29. ISBN 978-3-540-73093-4.

- ↑ Accueil LIP6