The holographic principle is a property of string theories and a supposed property of quantum gravity that states that the description of a volume of space can be thought of as encoded on a lower-dimensional boundary to the region — such as a light-like boundary like a gravitational horizon. First proposed by Gerard 't Hooft, it was given a precise string theoretic interpretation by Leonard Susskind, who combined his ideas with previous ones of 't Hooft and Charles Thorn. Leonard Susskind said, "The three-dimensional world of ordinary experience––the universe filled with galaxies, stars, planets, houses, boulders, and people––is a hologram, an image of reality coded on a distant two-dimensional surface." As pointed out by Raphael Bousso, Thorn observed in 1978 that string theory admits a lower-dimensional description in which gravity emerges from it in what would now be called a holographic way. The prime example of holography is the AdS/CFT correspondence.

A quantum computer is a computer that takes advantage of quantum mechanical phenomena. On small scales, physical matter exhibits properties of both particles and waves, and quantum computing leverages this behavior, specifically quantum superposition and entanglement, using specialized hardware that supports the preparation and manipulation of quantum states.

Hypercomputation or super-Turing computation is a set of hypothetical models of computation that can provide outputs that are not Turing-computable. For example, a machine that could solve the halting problem would be a hypercomputer; so too would one that could correctly evaluate every statement in Peano arithmetic.

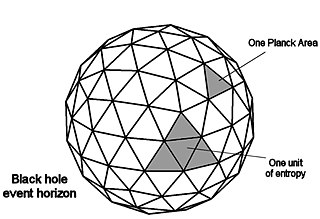

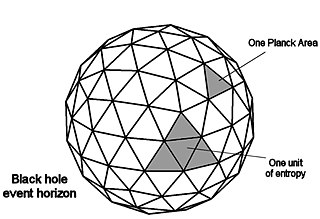

In physics, black hole thermodynamics is the area of study that seeks to reconcile the laws of thermodynamics with the existence of black hole event horizons. As the study of the statistical mechanics of black-body radiation led to the development of the theory of quantum mechanics, the effort to understand the statistical mechanics of black holes has had a deep impact upon the understanding of quantum gravity, leading to the formulation of the holographic principle.

In quantum computing, a quantum algorithm is an algorithm that runs on a realistic model of quantum computation, the most commonly used model being the quantum circuit model of computation. A classical algorithm is a finite sequence of instructions, or a step-by-step procedure for solving a problem, where each step or instruction can be performed on a classical computer. Similarly, a quantum algorithm is a step-by-step procedure, where each of the steps can be performed on a quantum computer. Although all classical algorithms can also be performed on a quantum computer, the term quantum algorithm is generally reserved for algorithms that seem inherently quantum, or use some essential feature of quantum computation such as quantum superposition or quantum entanglement.

An order of magnitude is usually a factor of ten. Thus, four orders of magnitude is a factor of 10,000 or 104.

The study of the physics of computation relates to understanding the fundamental physical limits of computers. This field has led to the investigation of how thermodynamics limits information processing, the understanding of chaos and dynamical systems, and a rapidly growing effort to invent new quantum computers.

The black hole information paradox is a paradox that appears when the predictions of quantum mechanics and general relativity are combined. The theory of general relativity predicts the existence of black holes that are regions of spacetime from which nothing—not even light—can escape. In the 1970s, Stephen Hawking applied the semiclassical approach of quantum field theory in curved spacetime to such systems and found that an isolated black hole would emit a form of radiation. He also argued that the detailed form of the radiation would be independent of the initial state of the black hole, and depend only on its mass, electric charge and angular momentum.

A Tsirelson bound is an upper limit to quantum mechanical correlations between distant events. Given that quantum mechanics violates Bell inequalities, a natural question to ask is how large can the violation be. The answer is precisely the Tsirelson bound for the particular Bell inequality in question. In general, this bound is lower than the bound that would be obtained if more general theories, only constrained by "no-signalling", were considered, and much research has been dedicated to the question of why this is the case.

Seth Lloyd is a professor of mechanical engineering and physics at the Massachusetts Institute of Technology.

In physics, the Bekenstein bound is an upper limit on the thermodynamic entropy S, or Shannon entropy H, that can be contained within a given finite region of space which has a finite amount of energy—or conversely, the maximal amount of information required to perfectly describe a given physical system down to the quantum level. It implies that the information of a physical system, or the information necessary to perfectly describe that system, must be finite if the region of space and the energy are finite. In computer science this implies that non-finite models such as Turing machines are not realizable as finite devices.

Bremermann's limit, named after Hans-Joachim Bremermann, is a limit on the maximum rate of computation that can be achieved in a self-contained system in the material universe. It is derived from Einstein's mass-energy equivalency and the Heisenberg uncertainty principle, and is c2/h ≈ 1.3563925 × 1050 bits per second per kilogram.

Landauer's principle is a physical principle pertaining to the lower theoretical limit of energy consumption of computation. It holds that an irreversible change in information stored in a computer, such as merging two computational paths, dissipates a minimum amount of heat to its surroundings.

Adiabatic quantum computation (AQC) is a form of quantum computing which relies on the adiabatic theorem to perform calculations and is closely related to quantum annealing.

In quantum computing, the threshold theorem states that a quantum computer with a physical error rate below a certain threshold can, through application of quantum error correction schemes, suppress the logical error rate to arbitrarily low levels. This shows that quantum computers can be made fault-tolerant, as an analogue to von Neumann's threshold theorem for classical computation. This result was proven by the groups of Dorit Aharanov and Michael Ben-Or; Emanuel Knill, Raymond Laflamme, and Wojciech Zurek; and Alexei Kitaev independently. These results built on a paper of Peter Shor, which proved a weaker version of the threshold theorem.

Norman H. Margolus is a Canadian-American physicist and computer scientist, known for his work on cellular automata and reversible computing. He is a research affiliate with the Computer Science and Artificial Intelligence Laboratory at the Massachusetts Institute of Technology.

In quantum computing, quantum supremacy or quantum advantage is the goal of demonstrating that a programmable quantum computer can solve a problem that no classical computer can solve in any feasible amount of time, irrespective of the usefulness of the problem. The term was coined by John Preskill in 2012, but the concept dates to Yuri Manin's 1980 and Richard Feynman's 1981 proposals of quantum computing.

Continuous-variable (CV) quantum information is the area of quantum information science that makes use of physical observables, like the strength of an electromagnetic field, whose numerical values belong to continuous intervals. One primary application is quantum computing. In a sense, continuous-variable quantum computation is "analog", while quantum computation using qubits is "digital." In more technical terms, the former makes use of Hilbert spaces that are infinite-dimensional, while the Hilbert spaces for systems comprising collections of qubits are finite-dimensional. One motivation for studying continuous-variable quantum computation is to understand what resources are necessary to make quantum computers more powerful than classical ones.

In quantum mechanics, a quantum speed limit (QSL) is a limitation on the minimum time for a quantum system to evolve between two distinguishable (orthogonal) states. QSL theorems are closely related to time-energy uncertainty relations. In 1945, Leonid Mandelstam and Igor Tamm derived a time-energy uncertainty relation that bounds the speed of evolution in terms of the energy dispersion. Over half a century later, Norman Margolus and Lev Levitin showed that the speed of evolution cannot exceed the mean energy, a result known as the Margolus–Levitin theorem. Realistic physical systems in contact with an environment are known as open quantum systems and their evolution is also subject to QSL. Quite remarkably it was shown that environmental effects, such as non-Markovian dynamics can speed up quantum processes, which was verified in a cavity QED experiment.

This glossary of quantum computing is a list of definitions of terms and concepts used in quantum computing, its sub-disciplines, and related fields.