Related Research Articles

Language acquisition is the process by which humans acquire the capacity to perceive and comprehend language. In other words, it is how human beings gain the ability to be aware of language, to understand it, and to produce and use words and sentences to communicate.

Sign languages are languages that use the visual-manual modality to convey meaning, instead of spoken words. Sign languages are expressed through manual articulation in combination with non-manual markers. Sign languages are full-fledged natural languages with their own grammar and lexicon. Sign languages are not universal and are usually not mutually intelligible, although there are also similarities among different sign languages.

Baby sign language is the use of manual signing allowing infants and toddlers to communicate emotions, desires, and objects prior to spoken language development. With guidance and encouragement, signing develops from a natural stage in infant development known as gesture. These gestures are taught in conjunction with speech to hearing children, and are not the same as a sign language. Some common benefits that have been found through the use of baby sign programs include an increased parent-child bond and communication, decreased frustration, and improved self-esteem for both the parent and child. Researchers have found that baby sign neither benefits nor harms the language development of infants. Promotional products and ease of information access have increased the attention that baby sign receives, making it pertinent that caregivers become educated before making the decision to use baby sign.

A gesture is a form of non-verbal communication or non-vocal communication in which visible bodily actions communicate particular messages, either in place of, or in conjunction with, speech. Gestures include movement of the hands, face, or other parts of the body. Gestures differ from physical non-verbal communication that does not communicate specific messages, such as purely expressive displays, proxemics, or displays of joint attention. Gestures allow individuals to communicate a variety of feelings and thoughts, from contempt and hostility to approval and affection, often together with body language in addition to words when they speak. Gesticulation and speech work independently of each other, but join to provide emphasis and meaning.

Babbling is a stage in child development and a state in language acquisition during which an infant appears to be experimenting with uttering articulate sounds, but does not yet produce any recognizable words. Babbling begins shortly after birth and progresses through several stages as the infant's repertoire of sounds expands and vocalizations become more speech-like. Infants typically begin to produce recognizable words when they are around 12 months of age, though babbling may continue for some time afterward.

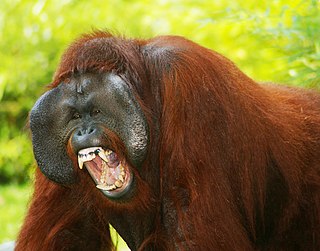

Research into great ape language has involved teaching chimpanzees, bonobos, gorillas and orangutans to communicate with humans and each other using sign language, physical tokens, lexigrams, and imitative human speech. Some primatologists argue that the use of these communication methods indicate primate "language" ability, though this depends on one's definition of language. The cognitive tradeoff hypothesis suggests that human language skills evolved at the expense of the short-term and working memory capabilities observed in other hominids.

A language delay is a language disorder in which a child fails to develop language abilities at the usual age-appropriate period in their developmental timetable. It is most commonly seen in children ages two to seven years-old and can continue into adulthood. The reported prevalence of language delay ranges from 2.3 to 19 percent.

Home sign is a gestural communication system, often invented spontaneously by a deaf child who lacks accessible linguistic input. Home sign systems often arise in families where a deaf child is raised by hearing parents and is isolated from the Deaf community. Because the deaf child does not receive signed or spoken language input, these children are referred to as linguistically isolated.

Developmental linguistics is the study of the development of linguistic ability in an individual, particularly the acquisition of language in childhood. It involves research into the different stages in language acquisition, language retention, and language loss in both first and second languages, in addition to the area of bilingualism. Before infants can speak, the neural circuits in their brains are constantly being influenced by exposure to language. Developmental linguistics supports the idea that linguistic analysis is not timeless, as claimed in other approaches, but time-sensitive, and is not autonomous – social-communicative as well as bio-neurological aspects have to be taken into account in determining the causes of linguistic developments.

Gestures in language acquisition are a form of non-verbal communication involving movements of the hands, arms, and/or other parts of the body. Children can use gesture to communicate before they have the ability to use spoken words and phrases. In this way gestures can prepare children to learn a spoken language, creating a bridge from pre-verbal communication to speech. The onset of gesture has also been shown to predict and facilitate children's spoken language acquisition. Once children begin to use spoken words their gestures can be used in conjunction with these words to form phrases and eventually to express thoughts and complement vocalized ideas.

Laura-Ann Petitto is a cognitive neuroscientist and a developmental cognitive neuroscientist known for her research and scientific discoveries involving the language capacity of chimpanzees, the biological bases of language in humans, especially early language acquisition, early reading, and bilingualism, bilingual reading, and the bilingual brain. Significant scientific discoveries include the existence of linguistic babbling on the hands of deaf babies and the equivalent neural processing of signed and spoken languages in the human brain. She is recognized for her contributions to the creation of the new scientific discipline, called educational neuroscience. Petitto chaired a new undergraduate department at Dartmouth College, called "Educational Neuroscience and Human Development" (2002-2007), and was a Co-Principal Investigator in the National Science Foundation and Dartmouth's Science of Learning Center, called the "Center for Cognitive and Educational Neuroscience" (2004-2007). At Gallaudet University (2011–present), Petitto led a team in the creation of the first PhD in Educational Neuroscience program in the United States. Petitto is the Co-Principal Investigator as well as Science Director of the National Science Foundation and Gallaudet University’s Science of Learning Center, called the "Visual Language and Visual Learning Center (VL2)". Petitto is also founder and Scientific Director of the Brain and Language Laboratory for Neuroimaging (“BL2”) at Gallaudet University.

John D. Bonvillian (1948-2018) was a psychologist and associate professor - emeritus in the Department of Psychology and the Interdepartmental Program in Linguistics at the University of Virginia in Charlottesville, Virginia. He is the principal developer of Simplified Signs, a manual sign communication system designed to be easy to form, easy to understand and easy to remember. He is also known for his research contributions to the study of sign language, child development, psycholinguistics, and language acquisition.

John L. Locke is an American biolinguist who has contributed to the understanding of language development and the evolution of language. His work has focused on how language emerges in the social context of interaction between infants, children and caregivers, how speech and language disorders can shed light on the normal developmental process and vice versa, how brain and cognitive science can help illuminate language capability and learning, and on how the special life history of humans offers perspectives on why humans are so much more intensely social and vocally communicative than their primate relatives. In recent time he has authored widely accessible volumes designed for the general public on the nature of human communication and its origins.

Prelingual deafness refers to deafness that occurs before learning speech or language. Speech and language typically begin to develop very early with infants saying their first words by age one. Therefore, prelingual deafness is considered to occur before the age of one, where a baby is either born deaf or loses hearing before the age of one. This hearing loss may occur for a variety of reasons and impacts cognitive, social, and language development.

In sign languages, the term classifier construction refers to a morphological system that can express events and states. They use handshape classifiers to represent movement, location, and shape. Classifiers differ from signs in their morphology, namely that signs consist of a single morpheme. Signs are composed of three meaningless phonological features: handshape, location, and movement. Classifiers, on the other hand, consist of many morphemes. Specifically, the handshape, location, and movement are all meaningful on their own. The handshape represents an entity and the hand's movement iconically represents the movement of that entity. The relative location of multiple entities can be represented iconically in two-handed constructions.

Language acquisition is a natural process in which infants and children develop proficiency in the first language or languages that they are exposed to. The process of language acquisition is varied among deaf children. Deaf children born to deaf parents are typically exposed to a sign language at birth and their language acquisition follows a typical developmental timeline. However, at least 90% of deaf children are born to hearing parents who use a spoken language at home. Hearing loss prevents many deaf children from hearing spoken language to the degree necessary for language acquisition. For many deaf children, language acquisition is delayed until the time that they are exposed to a sign language or until they begin using amplification devices such as hearing aids or cochlear implants. Deaf children who experience delayed language acquisition, sometimes called language deprivation, are at risk for lower language and cognitive outcomes. However, profoundly deaf children who receive cochlear implants and auditory habilitation early in life often achieve expressive and receptive language skills within the norms of their hearing peers; age at implantation is strongly and positively correlated with speech recognition ability. Early access to language through signed language or technology have both been shown to prepare children who are deaf to achieve fluency in literacy skills.

Language deprivation in deaf and hard-of-hearing children is a delay in language development that occurs when sufficient exposure to language, spoken or signed, is not provided in the first few years of a deaf or hard of hearing child's life, often called the critical or sensitive period. Early intervention, parental involvement, and other resources all work to prevent language deprivation. Children who experience limited access to language—spoken or signed—may not develop the necessary skills to successfully assimilate into the academic learning environment. There are various educational approaches for teaching deaf and hard of hearing individuals. Decisions about language instruction is dependent upon a number of factors including extent of hearing loss, availability of programs, and family dynamics.

Language exposure for children is the act of making language readily available and accessible during the critical period for language acquisition. Deaf and hard of hearing children, when compared to their hearing peers, tend to face more hardships when it comes to ensuring that they will receive accessible language during their formative years. Therefore, deaf and hard of hearing children are more likely to have language deprivation which causes cognitive delays. Early exposure to language enables the brain to fully develop cognitive and linguistic skills as well as language fluency and comprehension later in life. Hearing parents of deaf and hard of hearing children face unique barriers when it comes to providing language exposure for their children. Yet, there is a lot of research, advice, and services available to those parents of deaf and hard of hearing children who may not know how to start in providing language.

Pointing is a gesture specifying a direction from a person's body, usually indicating a location, person, event, thing or idea. It typically is formed by extending the arm, hand, and index finger, although it may be functionally similar to other hand gestures. Types of pointing may be subdivided according to the intention of the person, as well as by the linguistic function it serves.

Jana Marie Iverson is a developmental psychologist known for her research on the development of gestures and motor skills in relation to communicative development. She has worked with various populations including children at high risk of autism spectrum disorder (ASD), blind individuals, and preterm infants. She is currently a professor of psychology at Boston University.

References

- ↑ Marschark, Marc; Spencer, Patricia Elizabeth (11 January 2011). The Oxford Handbook of Deaf Studies, Language, and Education. Oxford University Press. p. 230. ISBN 978-0-19-975098-6 . Retrieved 13 April 2012.

- 1 2 3 4 5 Petitto, L.; Marentette, P. (1991). "Babbling in the manual mode: evidence for the ontogeny of language" (PDF). Science. 251 (5000): 1493–1496. doi:10.1126/science.2006424. ISSN 0036-8075. PMID 2006424. S2CID 9812277. Archived from the original (PDF) on July 25, 2010. Retrieved April 13, 2012.

- ↑ Marschark, Marc and Patricia Elizabeth Spencer. Oxford Handbook of Deaf Studies, Language, and Education. Oxford University Press, USA, 2003. 219-231.

- 1 2 Cormier, Kearsy; Mauk, Claude; Repp, Ann (1998). "Manual Babbling in Deaf and Hearing Infants: A Longitudinal Study" (PDF). Proceedings of the Twenty-Ninth Annual Child Language Research Forum: 55–61. Retrieved 20 November 2015.

- 1 2 3 Chamberlain, Charlene, Jill P. Morford, and Rachel I. Mayberry. Language Acquisition By Eye. Psychology Press, 1999. 14; 26; 41-48.

- ↑ Cutler, Anne. Twenty-First Century Psycholinguistics: Four Cornerstones. Routledge, 2017. 294-296.

- ↑ Seal, Brenda C.; DePaolis, Rory A. (2014-09-05). "Manual Activity and Onset of First Words in Babies Exposed and Not Exposed to Baby Signing". Sign Language Studies. 14 (4): 444–465. doi:10.1353/sls.2014.0015. ISSN 1533-6263. S2CID 144534523.

- ↑ Swanwick, Ruth. Issues in Deaf Education. Routledge, 2012. 59-61.

- ↑ Marentette, Paula F. “Babbling in Sign Language: Implications for Maturational Processes of Language in the Developing Brain.” McGill University, 1989.

- 1 2 Cheek, Adrianne; Cormier, Kearsy; Rathmann, Christian; Repp, Ann; Meier, Richard (April 1998). "Motoric Constraints Link Manual Babbling and Early Signs". Infant Behavior and Development. 21: 340. doi:10.1016/s0163-6383(98)91553-3.

- ↑ Cormier, Mauk, Repp, Kearsy, Claude, Ann. "Manual babbling in deaf and hearing Infants: A longitudinal study" (PDF). Proceedings of the Twenty-Ninth Annual Child Language Research Forum: 55–61.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ↑ Petitto, Laura Ann, Siobhan Holowka, Lauren E Sergio, Bronna Levy, and David J Ostry. “Baby Hands That Move to the Rhythm of Language: Hearing Babies Acquiring Sign Languages Babble Silently on the Hands.” Cognition 93, no. 1 (August 2004): 43–73. doi : 10.1016/j.cognition.2003.10.007.