Scientific career

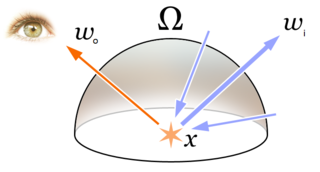

At Cornell University's Program of Computer Graphics, Cohen served as an assistant professor of architecture from 1985–1989. His first major research contributions were in the area of photorealistic rendering, in particular, in the study of radiosity: the use of finite elements to solve the rendering equation for environments with diffusely reflecting surfaces. His most significant results included the hemicube (1985), [6] for computing form factors in the presence of occlusion; an experimental evaluation framework (1986), one of the first studies to quantitatively compare real and synthetic imagery; extending radiosity to non-diffuse environments (1986); integrating ray tracing with radiosity (1987); progressive refinement (1988), to make interactive rendering possible.

After completing his PhD, he joined the Computer Science faculty at Princeton University, where he continued his work on Radiosity, including wavelet radiosity (1993), a more general framework for hierarchical approaches; and “radioptimization” (1993), an inverse method to solve for lighting parameters based on user-specified objectives. All of this work culminated in a 1993 textbook with John Wallace, Radiosity and Realistic Image Synthesis.

In a very different research area, Cohen made significant contributions to motion simulation and editing, most significantly: dynamic simulation with kinematic constraints (1987), which for the first time allowed animators to combine kinematic and dynamic specifications; interactive spacetime control for animation (1992), which combined physically-based and user-defined constraints for controlling motion.

In 1994, Cohen moved to Microsoft Research (MSR) where he stayed for 21 years. There he continued work on motion synthesis; motion transitions using spacetime constraints (1996), which allowed seamless and plausible transitions between motion segments for systems with many degrees of freedom such as the human body; motion interpolation (1998), a system for real-time interpolation of parameterized motions; and artist-directed inverse kinematics (2001), which allowed a user to position an articulated character at high frame rates, for real-time applications such as games.

In addition, at Microsoft Research, in his groundbreaking and most-cited work, Cohen and colleagues introduced the Lumigraph (1996), [7] a method for capturing and representing the complete appearance—from all points of view—of either a synthetic or real-world object or scene. Building on this work, Cohen published important follow-on papers in view-based rendering (1997), which used geometric information to create views of a scene from a sparse set of images; and unstructured Lumigraph rendering (2001), which generalized light field and view-dependent texture mapping methods in a single framework.

In subsequent work, Cohen significantly advanced the state of the art in matting & compositing, with papers on image and video segmentation based on anisotropic kernel mean-shift (2004); video cutout (2004), which preserved smoothness across space and time; optimized color sampling (2005), which improved previous approaches to image matting by analyzing the confidence of foreground and background color samples; and soft scissors (2007), the first interactive tool for high-quality image matting and compositing.

Most recently, Cohen has turned his attention to computational photography, publishing numerous highly creative, landmark papers: interactive digital photomontage (2004), [8] for combining parts of photographs in various novel ways; flash/no-flash image pairs (2004), for combining images taken with and without flash to synthesize new higher-quality results than either image alone; panoramic video textures (2005), for converting a panning video over a dynamic scene to a high-resolution continuously playing video; gaze-based photo cropping (2006); multi-viewpoint panoramas (2006), for photographing and rendering very long scenes; the Moment Camera (2007) outlining general principles for capturing subjective moments; joint bilateral upsampling (2007), for fast image enhancement using a down-sampled image; gigapixel images (2007), a means to acquire extremely high-resolution images with an ordinary camera on a specialized mount; deep photo (2008), a system for enhancing casual outdoor photos by combining them with existing digital terrain data; image deblurring (2010), to deblur images captured with camera shake; GradientShop (2010), which unified previous gradient-domain solutions under a single optimization framework; and ShadowDraw (2011), a system for guiding the freeform drawing of objects.

In 2015, Cohen moved to Facebook where he directed the Computational Photography group. His group's best known product is 3D Photos first appearing in the Facebook feed in 2018. [9] In 2023, he moved to a new Generative AI group which has released Generative AI image creation at imagine.meta.com . Cohen is a longtime volunteer in the ACM SIGGRAPH community. He served on the SIGGRAPH Papers Committee eleven times, and as SIGGRAPH Papers Chair in 1998. Cohen also served as SIGGRAPH Awards Chair from 2013 until 2018. He was a keynote speaker at SIGGRAPH Asia 2018. Cohen also serves as an Affiliate Professor of Computer Science and Engineering at the University of Washington, [10] and at Dartmouth College. [11]