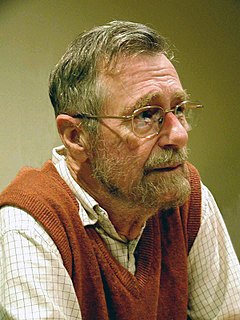

Michael Lee Scott (born 1959) is a professor of computer science at the University of Rochester in Rochester, New York.

Michael Lee Scott (born 1959) is a professor of computer science at the University of Rochester in Rochester, New York.

Scott received a PhD from the University of Wisconsin–Madison in 1985. He joined the faculty at Rochester the same year as an assistant professor of computer science. Scott was chair of the computer science department from 1996 until 1999, when he was succeeded by Mitsunori Ogihara. He served again as interim chair from July to December 2007 and from July to December 2017.

In 2001, Scott received the University of Rochester’s Robert and Pamela Goergen Award for Distinguished Achievement and Artistry in Undergraduate Teaching.

Scott published the text Programming Language Pragmatics in 2000. A second edition was published in 2005, a third in 2009, and a fourth in 2015. Translations have been made to Greek and simplified Chinese.

In 2006, Scott and John Mellor-Crummey were awarded the Edsger W. Dijkstra Prize in Distributed Computing for a paper they wrote in 1991, "Algorithms for Scalable Synchronization on Shared-Memory Multiprocessors."

In 2005, Scott, along with William Scherer III and Doug Lea developed a set of algorithms to handle lock-free concurrent exchanges and synchronous queues. These algorithms are included in the Java 6 concurrency library.

In 2006 he was inducted as a Fellow of the Association for Computing Machinery.

Scott is a Unitarian Universalist. He served as secretary of the New York State Convention of Universalists from 1991 to 1999 and as President from 2001 to 2005. In June 2004, he spoke at the Unitarian Universalist Association General Assembly in favor of electronic voting machines, so long as they retained a paper backup.

Distributed computing is a field of computer science that studies distributed systems. A distributed system is a system whose components are located on different networked computers, which communicate and coordinate their actions by passing messages to one another from any system. The components interact with one another in order to achieve a common goal. Three significant characteristics of distributed systems are: concurrency of components, lack of a global clock, and independent failure of components. Examples of distributed systems vary from SOA-based systems to massively multiplayer online games to peer-to-peer applications.

Edsger Wybe Dijkstra was a Dutch computer scientist, programmer, software engineer, systems scientist, science essayist, and pioneer in computing science. A theoretical physicist by training, he worked as a programmer at the Mathematisch Centrum (Amsterdam) from 1952 to 1962. A university professor for much of his life, Dijkstra held the Schlumberger Centennial Chair in Computer Sciences at the University of Texas at Austin from 1984 until his retirement in 1999. He was a professor of mathematics at the Eindhoven University of Technology (1962–1984) and a research fellow at the Burroughs Corporation (1973–1984). In 1972, he became the first non-American, non-British, and continental European winner of the Turing Award.

Multiprocessing is the use of two or more central processing units (CPUs) within a single computer system. The term also refers to the ability of a system to support more than one processor or the ability to allocate tasks between them. There are many variations on this basic theme, and the definition of multiprocessing can vary with context, mostly as a function of how CPUs are defined.

Parallel computing is a type of computation where many calculations or the execution of processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling. As power consumption by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

In computer science, an algorithm is called non-blocking if failure or suspension of any thread cannot cause failure or suspension of another thread; for some operations, these algorithms provide a useful alternative to traditional blocking implementations. A non-blocking algorithm is lock-free if there is guaranteed system-wide progress, and wait-free if there is also guaranteed per-thread progress. "Non-blocking" was used as a synonym for "lock-free" in the literature until the introduction of obstruction-freedom in 2003.

Per Brinch Hansen was a Danish-American computer scientist known for his work in operating systems, concurrent programming and parallel and distributed computing.

In computer science, concurrency is the ability of different parts or units of a program, algorithm, or problem to be executed out-of-order or in partial order, without affecting the final outcome. This allows for parallel execution of the concurrent units, which can significantly improve overall speed of the execution in multi-processor and multi-core systems. In more technical terms, concurrency refers to the decomposability of a program, algorithm, or problem into order-independent or partially-ordered components or units of computation.

In parallel computing, an embarrassingly parallel workload or problem is one where little or no effort is needed to separate the problem into a number of parallel tasks. This is often the case where there is little or no dependency or need for communication between those parallel tasks, or for results between them.

Automatic parallelization, also auto parallelization, or autoparallelization refers to converting sequential code into multi-threaded and/or vectorized code in order to use multiple processors simultaneously in a shared-memory multiprocessor (SMP) machine. Fully automatic parallelization of sequential programs is a challenge because it requires complex program analysis and the best approach may depend upon parameter values that are not known at compilation time.

Concurrent computing is a form of computing in which several computations are executed concurrently—during overlapping time periods—instead of sequentially, with one completing before the next starts.

In computer science, load-linked/store-conditional (LL/SC), sometimes known as load-reserved/store-conditional (LR/SC), are a pair of instructions used in multithreading to achieve synchronization. Load-link returns the current value of a memory location, while a subsequent store-conditional to the same memory location will store a new value only if no updates have occurred to that location since the load-link. Together, this implements a lock-free atomic read-modify-write operation.

In computer science, a readers–writer is a synchronization primitive that solves one of the readers–writers problems. An RW lock allows concurrent access for read-only operations, while write operations require exclusive access. This means that multiple threads can read the data in parallel but an exclusive lock is needed for writing or modifying data. When a writer is writing the data, all other writers or readers will be blocked until the writer is finished writing. A common use might be to control access to a data structure in memory that cannot be updated atomically and is invalid until the update is complete.

In computer science, synchronization refers to one of two distinct but related concepts: synchronization of processes, and synchronization of data. Process synchronization refers to the idea that multiple processes are to join up or handshake at a certain point, in order to reach an agreement or commit to a certain sequence of action. Data synchronization refers to the idea of keeping multiple copies of a dataset in coherence with one another, or to maintain data integrity. Process synchronization primitives are commonly used to implement data synchronization.

The bulk synchronous parallel (BSP) abstract computer is a bridging model for designing parallel algorithms. It serves a purpose similar to the parallel random access machine (PRAM) model. BSP differs from PRAM by not taking communication and synchronization for granted. An important part of analyzing a BSP algorithm rests on quantifying the synchronization and communication needed.

Maurice Peter Herlihy is a computer scientist active in the field of multiprocessor synchronization. Herlihy has contributed to areas including theoretical foundations of wait-free synchronization, linearizable data structures, applications of combinatorial topology to distributed computing, as well as hardware and software transactional memory. He is the An Wang Professor of Computer Science at Brown University, where he has been a member of the faculty since 1994.

Clyde P. Kruskal is an American computer scientist, working on parallel computing architectures, models, and algorithms. As part of the ultracomputer project, he was one of the inventors of the read–modify–write concept in parallel and distributed computing. He is an associate professor of computer science at the University of Maryland, College Park.

Nir Shavit is an Israeli computer scientist. He is a Professor in the Computer Science Department at Tel Aviv University and a Professor of Electrical Engineering and Computer Science at the Massachusetts Institute of Technology.

In computer science, a concurrent data structure is a particular way of storing and organizing data for access by multiple computing threads on a computer.

Stanford DASH was a cache coherent multiprocessor developed in the late 1980s by a group led by Anoop Gupta, John L. Hennessy, Mark Horowitz, and Monica S. Lam at Stanford University. It was based on adding a pair of directory boards designed at Stanford to up to 16 SGI IRIS 4D Power Series machines and then cabling the systems in a mesh topology using a Stanford-modified version of the Torus Routing Chip. The boards designed at Stanford implemented a directory-based cache coherence protocol allowing Stanford DASH to support distributed shared memory for up to 64 processors. Stanford DASH was also notable for both supporting and helping to formalize weak memory consistency models, including release consistency. Because Stanford DASH was the first operational machine to include scalable cache coherence, it influenced subsequent computer science research as well as the commercially available SGI Origin 2000. Stanford DASH is included in the 25th anniversary retrospective of selected papers from the International Symposium on Computer Architecture and several computer science books, has been simulated by the University of Edinburgh, and is used as a case study in contemporary computer science classes.

A concurrent hash table is an implementation of hash tables allowing concurrent access by multiple threads using a hash function.