Entropy is a scientific concept that is most commonly associated with a state of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynamics, where it was first recognized, to the microscopic description of nature in statistical physics, and to the principles of information theory. It has found far-ranging applications in chemistry and physics, in biological systems and their relation to life, in cosmology, economics, sociology, weather science, climate change, and information systems including the transmission of information in telecommunication.

In physics, statistical mechanics is a mathematical framework that applies statistical methods and probability theory to large assemblies of microscopic entities. It does not assume or postulate any natural laws, but explains the macroscopic behavior of nature from the behavior of such ensembles.

Solvation describes the interaction of a solvent with dissolved molecules. Both ionized and uncharged molecules interact strongly with a solvent, and the strength and nature of this interaction influence many properties of the solute, including solubility, reactivity, and color, as well as influencing the properties of the solvent such as its viscosity and density. If the attractive forces between the solvent and solute particles are greater than the attractive forces holding the solute particles together, the solvent particles pull the solute particles apart and surround them. The surrounded solute particles then move away from the solid solute and out into the solution. Ions are surrounded by a concentric shell of solvent. Solvation is the process of reorganizing solvent and solute molecules into solvation complexes and involves bond formation, hydrogen bonding, and van der Waals forces. Solvation of a solute by water is called hydration.

A timeline of events in the history of thermodynamics.

An ideal gas is a theoretical gas composed of many randomly moving point particles that are not subject to interparticle interactions. The ideal gas concept is useful because it obeys the ideal gas law, a simplified equation of state, and is amenable to analysis under statistical mechanics. The requirement of zero interaction can often be relaxed if, for example, the interaction is perfectly elastic or regarded as point-like collisions.

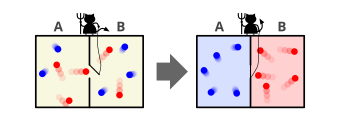

Maxwell's demon is a thought experiment that would hypothetically violate the second law of thermodynamics. It was proposed by the physicist James Clerk Maxwell in 1867. In his first letter, Maxwell referred to the entity as a "finite being" or a "being who can play a game of skill with the molecules". Lord Kelvin would later call it a "demon".

T-symmetry or time reversal symmetry is the theoretical symmetry of physical laws under the transformation of time reversal,

In classical statistical mechanics, the H-theorem, introduced by Ludwig Boltzmann in 1872, describes the tendency to decrease in the quantity H in a nearly-ideal gas of molecules. As this quantity H was meant to represent the entropy of thermodynamics, the H-theorem was an early demonstration of the power of statistical mechanics as it claimed to derive the second law of thermodynamics—a statement about fundamentally irreversible processes—from reversible microscopic mechanics. It is thought to prove the second law of thermodynamics, albeit under the assumption of low-entropy initial conditions.

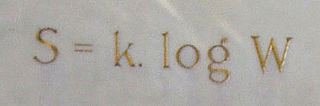

Ludwig Eduard Boltzmann was an Austrian physicist and philosopher. His greatest achievements were the development of statistical mechanics, and the statistical explanation of the second law of thermodynamics. In 1877 he provided the current definition of entropy, , where Ω is the number of microstates whose energy equals the system's energy, interpreted as a measure of statistical disorder of a system. Max Planck named the constant kB the Boltzmann constant.

In physics, Loschmidt's paradox, also known as the reversibility paradox, irreversibility paradox, or Umkehreinwand, is the objection that it should not be possible to deduce an irreversible process from time-symmetric dynamics. This puts the time reversal symmetry of (almost) all known low-level fundamental physical processes at odds with any attempt to infer from them the second law of thermodynamics which describes the behaviour of macroscopic systems. Both of these are well-accepted principles in physics, with sound observational and theoretical support, yet they seem to be in conflict, hence the paradox.

In physics, an entropic force acting in a system is an emergent phenomenon resulting from the entire system's statistical tendency to increase its entropy, rather than from a particular underlying force on the atomic scale.

Landauer's principle is a physical principle pertaining to the lower theoretical limit of energy consumption of computation. It holds that an irreversible change in information stored in a computer, such as merging two computational paths, dissipates a minimum amount of heat to its surroundings.

The mathematical expressions for thermodynamic entropy in the statistical thermodynamics formulation established by Ludwig Boltzmann and J. Willard Gibbs in the 1870s are similar to the information entropy by Claude Shannon and Ralph Hartley, developed in the 1940s.

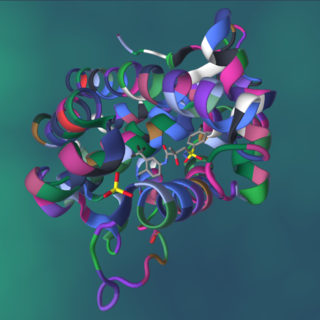

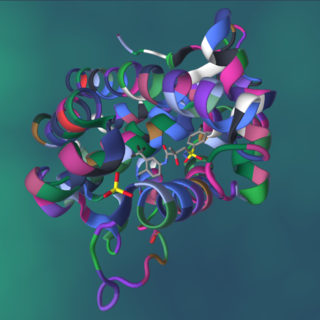

In chemical thermodynamics, conformational entropy is the entropy associated with the number of conformations of a molecule. The concept is most commonly applied to biological macromolecules such as proteins and RNA, but also be used for polysaccharides and other molecules. To calculate the conformational entropy, the possible conformations of the molecule may first be discretized into a finite number of states, usually characterized by unique combinations of certain structural parameters, each of which has been assigned an energy. In proteins, backbone dihedral angles and side chain rotamers are commonly used as parameters, and in RNA the base pairing pattern may be used. These characteristics are used to define the degrees of freedom. The conformational entropy associated with a particular structure or state, such as an alpha-helix, a folded or an unfolded protein structure, is then dependent on the probability of the occupancy of that structure.

In thermodynamics, entropy is often associated with the amount of order or disorder in a thermodynamic system. This stems from Rudolf Clausius' 1862 assertion that any thermodynamic process always "admits to being reduced [reduction] to the alteration in some way or another of the arrangement of the constituent parts of the working body" and that internal work associated with these alterations is quantified energetically by a measure of "entropy" change, according to the following differential expression:

In statistical mechanics, Boltzmann's equation is a probability equation relating the entropy , also written as , of an ideal gas to the multiplicity, the number of real microstates corresponding to the gas's macrostate:

Chance and Necessity: Essay on the Natural Philosophy of Modern Biology is a 1970 book by Nobel Prize winner Jacques Monod, interpreting the processes of evolution to show that life is only the result of natural processes by "pure chance". The basic tenet of this book is that systems in nature with molecular biology, such as enzymatic biofeedback loops, can be explained without having to invoke final causality.

Temperature is a physical quantity that quantitatively expresses the attribute of hotness or coldness. Temperature is measured with a thermometer. It reflects the kinetic energy of the vibrating and colliding atoms making up a substance.

A depletion force is an effective attractive force that arises between large colloidal particles that are suspended in a dilute solution of depletants, which are smaller solutes that are preferentially excluded from the vicinity of the large particles. One of the earliest reports of depletion forces that lead to particle coagulation is that of Bondy, who observed the separation or "creaming" of rubber latex upon addition of polymer depletant molecules to solution. More generally, depletants can include polymers, micelles, osmolytes, ink, mud, or paint dispersed in a continuous phase.

A protein–ligand complex is a complex of a protein bound with a ligand that is formed following molecular recognition between proteins that interact with each other or with other molecules. Formation of a protein-ligand complex is based on molecular recognition between biological macromolecules and ligands, where ligand means any molecule that binds the protein with high affinity and specificity. Molecular recognition is not a process by itself since it is part of a functionally important mechanism involving the essential elements of life like in self-replication, metabolism, and information processing. For example DNA-replication depends on recognition and binding of DNA double helix by helicase, DNA single strand by DNA-polymerase and DNA segments by ligase. Molecular recognition depends on affinity and specificity. Specificity means that proteins distinguish the highly specific binding partner from less specific partners and affinity allows the specific partner with high affinity to remain bound even if there are high concentrations of less specific partners with lower affinity.