This article may be confusing or unclear to readers.(March 2011) |

An ontology chart is a type of chart used in semiotics and software engineering to illustrate an ontology.

This article may be confusing or unclear to readers.(March 2011) |

An ontology chart is a type of chart used in semiotics and software engineering to illustrate an ontology.

The nodes of an ontology chart represent universal affordances and rarely represent particulars. The exception is the root which is a particular agent often labelled ‘society’ and located on the extreme left of an ontology chart. The root is often dropped in practice but is implied in every ontology chart. If any other particular is present in an ontology chart it is recognised by the ‘#’ sign prefix and upper case letters. In our ontology chart the node labelled #IBM is a particular organisation.

The arcs represent ontological dependency relations directed from left to right. The right affordance is ontologically dependent on the left affordance. The left affordance is the ontological antecedent of the right affordance. A special category of affordances are determiners. They are recognised by the ‘#’ sign prefix. The two examples above are #hourly rate and #name. All determiners have a second antecedent – the measurement standard. They are usually dropped from the ontology chart but they are implied and obvious. In the case of hourly rate and name they are currency and language respectively. The names on the arcs are role names of the carrier, the left node, in the relationship node on the right. For example, the ‘employee’ is the role name of a person while in employment. No ontology chart node has more than two ontological antecedents. Where you find an arc on the ontology chart between a role name and a node, read that as an arc between the right hand side of the role name. So the arc from employee to works at is an arc between employment and works at

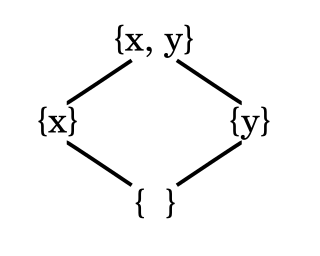

Mathematically, ontology charts are a graphical representation of semi-lattice structures; specifically they are Hasse diagrams of a single root and no cycles. Ontological dependency is a relationship known mathematically as a partial order set relation (poset). Posets are an object of study in the mathematical discipline of order theory. They belong to the class of binary relations but they have three additional properties: reflexivity, anti-symmetry and transitivity.

Ontological dependency is a special poset because it is a binary relation, every thing is ontologically dependent on itself for its existence, two things that are mutually ontologically dependent must be the same thing and if a depends on b and b depends on c then a depends on c. The last of these properties - the transitive property of posets - was exploited by Helmut Hasse to give us the Hasse diagram - a diagram of incredible power, simplicity and if drawn well elegant as well. Because ontology charts have a root that all affordances (realisations/things) are ultimately dependent upon for their existence, they are graphical representation of semi-lattices.

In mathematics, especially order theory, a partial order on a set is an arrangement such that, for certain pairs of elements, one precedes the other. The word partial is used to indicate that not every pair of elements needs to be comparable; that is, there may be pairs for which neither element precedes the other. Partial orders thus generalize total orders, in which every pair is comparable.

In linguistics and grammar, a pronoun is a word or a group of words that one may substitute for a noun or noun phrase.

In linguistics, syntax is the study of how words and morphemes combine to form larger units such as phrases and sentences. Central concerns of syntax include word order, grammatical relations, hierarchical sentence structure (constituency), agreement, the nature of crosslinguistic variation, and the relationship between form and meaning (semantics). There are numerous approaches to syntax that differ in their central assumptions and goals.

A parse tree or parsing tree or derivation tree or concrete syntax tree is an ordered, rooted tree that represents the syntactic structure of a string according to some context-free grammar. The term parse tree itself is used primarily in computational linguistics; in theoretical syntax, the term syntax tree is more common.

In linguistics, a determiner phrase (DP) is a type of phrase headed by a determiner such as many. Controversially, many approaches, take a phrase like not very many apples to be a DP, headed, in this case, by the determiner many. This is called the DP analysis or the DP hypothesis. Others reject this analysis in favor of the more traditional NP analysis where apples would be the head of the phrase in which the DP not very many is merely a dependent. Thus, there are competing analyses concerning heads and dependents in nominal groups. The DP analysis developed in the late 1970s and early 1980s, and it is the majority view in generative grammar today.

In order theory, a Hasse diagram is a type of mathematical diagram used to represent a finite partially ordered set, in the form of a drawing of its transitive reduction. Concretely, for a partially ordered set one represents each element of as a vertex in the plane and draws a line segment or curve that goes upward from one vertex to another vertex whenever covers . These curves may cross each other but must not touch any vertices other than their endpoints. Such a diagram, with labeled vertices, uniquely determines its partial order.

Link grammar (LG) is a theory of syntax by Davy Temperley and Daniel Sleator which builds relations between pairs of words, rather than constructing constituents in a phrase structure hierarchy. Link grammar is similar to dependency grammar, but dependency grammar includes a head-dependent relationship, whereas link grammar makes the head-dependent relationship optional. Colored Multiplanar Link Grammar (CMLG) is an extension of LG allowing crossing relations between pairs of words. The relationship between words is indicated with link types, thus making the Link grammar closely related to certain categorial grammars.

In set theory, a tree is a partially ordered set (T, <) such that for each t ∈ T, the set {s ∈ T : s < t} is well-ordered by the relation <. Frequently trees are assumed to have only one root (i.e. minimal element), as the typical questions investigated in this field are easily reduced to questions about single-rooted trees.

In linguistics, the head or nucleus of a phrase is the word that determines the syntactic category of that phrase. For example, the head of the noun phrase boiling hot water is the noun water. Analogously, the head of a compound is the stem that determines the semantic category of that compound. For example, the head of the compound noun handbag is bag, since a handbag is a bag, not a hand. The other elements of the phrase or compound modify the head, and are therefore the head's dependents. Headed phrases and compounds are called endocentric, whereas exocentric ("headless") phrases and compounds lack a clear head. Heads are crucial to establishing the direction of branching. Head-initial phrases are right-branching, head-final phrases are left-branching, and head-medial phrases combine left- and right-branching.

In linguistics, branching refers to the shape of the parse trees that represent the structure of sentences. Assuming that the language is being written or transcribed from left to right, parse trees that grow down and to the right are right-branching, and parse trees that grow down and to the left are left-branching. The direction of branching reflects the position of heads in phrases, and in this regard, right-branching structures are head-initial, whereas left-branching structures are head-final. English has both right-branching (head-initial) and left-branching (head-final) structures, although it is more right-branching than left-branching. Some languages such as Japanese and Turkish are almost fully left-branching (head-final). Some languages are mostly right-branching (head-initial).

Dependency grammar (DG) is a class of modern grammatical theories that are all based on the dependency relation and that can be traced back primarily to the work of Lucien Tesnière. Dependency is the notion that linguistic units, e.g. words, are connected to each other by directed links. The (finite) verb is taken to be the structural center of clause structure. All other syntactic units (words) are either directly or indirectly connected to the verb in terms of the directed links, which are called dependencies. Dependency grammar differs from phrase structure grammar in that while it can identify phrases it tends to overlook phrasal nodes. A dependency structure is determined by the relation between a word and its dependents. Dependency structures are flatter than phrase structures in part because they lack a finite verb phrase constituent, and they are thus well suited for the analysis of languages with free word order, such as Czech or Warlpiri.

In theoretical linguistics, a distinction is made between endocentric and exocentric constructions. A grammatical construction is said to be endocentric if it fulfils the same linguistic function as one of its parts, and exocentric if it does not. The distinction reaches back at least to Bloomfield's work of the 1930s, who based it on terms by Pāṇini and Patañjali in Sanskrit grammar. Such a distinction is possible only in phrase structure grammars, since in dependency grammars all constructions are necessarily endocentric.

Exceptional case-marking (ECM), in linguistics, is a phenomenon in which the subject of an embedded infinitival verb seems to appear in a superordinate clause and, if it is a pronoun, is unexpectedly marked with object case morphology. The unexpected object case morphology is deemed "exceptional". The term ECM itself was coined in the Government and Binding grammar framework although the phenomenon is closely related to the accusativus cum infinitivo constructions of Latin. ECM-constructions are also studied within the context of raising. The verbs that license ECM are known as raising-to-object verbs. Many languages lack ECM-predicates, and even in English, the number of ECM-verbs is small. The structural analysis of ECM-constructions varies in part according to whether one pursues a relatively flat structure or a more layered one.

DOGMA, short for Developing Ontology-Grounded Methods and Applications, is the name of research project in progress at Vrije Universiteit Brussel's STARLab, Semantics Technology and Applications Research Laboratory. It is an internally funded project, concerned with the more general aspects of extracting, storing, representing and browsing information.

Semantic analysis is a method for eliciting and representing knowledge about organisations.

Mathematical diagrams, such as charts and graphs, are mainly designed to convey mathematical relationships—for example, comparisons over time.

In mathematics, and more specifically in graph theory, a directed graph is a graph that is made up of a set of vertices connected by directed edges, often called arcs.

Ronald K. (Ron) Stamper is a British computer scientist, formerly a researcher in the LSE and emeritus professor at the University of Twente, known for his pioneering work in Organisational semiotics, and the creation of the MEASUR methodology and the SEDITA framework.

In mathematics, a relation on a set may, or may not, hold between two given members of the set. As an example, "is less than" is a relation on the set of natural numbers; it holds, for instance, between the values 1 and 3, and likewise between 3 and 4, but not between the values 3 and 1 nor between 4 and 4, that is, 3 < 1 and 4 < 4 both evaluate to false. As another example, "is sister of" is a relation on the set of all people, it holds e.g. between Marie Curie and Bronisława Dłuska, and likewise vice versa. Set members may not be in relation "to a certain degree" – either they are in relation or they are not.

Araucaria is an argument mapping software tool developed in 2001 by Chris Reed and Glenn Rowe, in the Argumentation Research Group at the School of Computing in the University of Dundee, Scotland. It is designed to visually represent arguments through diagrams that can be used for analysis and stored in Argument Markup Language (AML), based on XML. As free software, it is available under the GNU General Public License and may be downloaded for free on the internet.

This article needs additional citations for verification .(March 2009) |