A minimum spanning tree (MST) or minimum weight spanning tree is a subset of the edges of a connected, edge-weighted undirected graph that connects all the vertices together, without any cycles and with the minimum possible total edge weight. That is, it is a spanning tree whose sum of edge weights is as small as possible. More generally, any edge-weighted undirected graph has a minimum spanning forest, which is a union of the minimum spanning trees for its connected components.

Kruskal's algorithm finds a minimum spanning forest of an undirected edge-weighted graph. If the graph is connected, it finds a minimum spanning tree. It is a greedy algorithm in graph theory as in each step it adds the next lowest-weight edge that will not form a cycle to the minimum spanning forest.

In computer science, Prim's algorithm is a greedy algorithm that finds a minimum spanning tree for a weighted undirected graph. This means it finds a subset of the edges that forms a tree that includes every vertex, where the total weight of all the edges in the tree is minimized. The algorithm operates by building this tree one vertex at a time, from an arbitrary starting vertex, at each step adding the cheapest possible connection from the tree to another vertex.

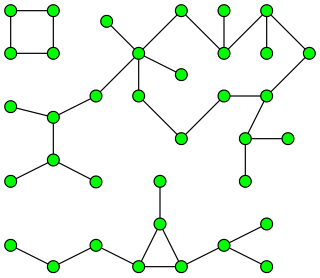

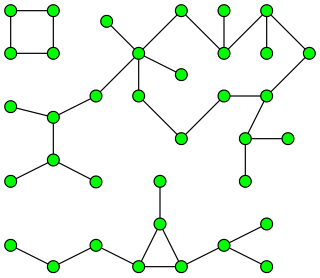

In graph theory, a component of an undirected graph is a connected subgraph that is not part of any larger connected subgraph. The components of any graph partition its vertices into disjoint sets, and are the induced subgraphs of those sets. A graph that is itself connected has exactly one component, consisting of the whole graph. Components are sometimes called connected components.

In graph theory, an Eulerian trail is a trail in a finite graph that visits every edge exactly once. Similarly, an Eulerian circuit or Eulerian cycle is an Eulerian trail that starts and ends on the same vertex. They were first discussed by Leonhard Euler while solving the famous Seven Bridges of Königsberg problem in 1736. The problem can be stated mathematically like this:

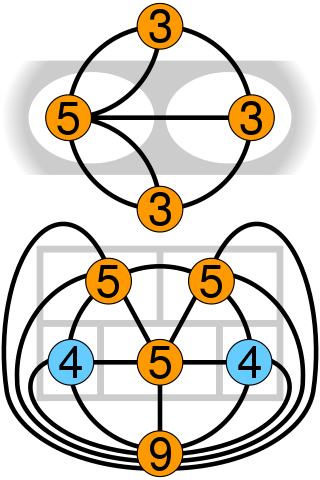

In mathematics, the discrete Laplace operator is an analog of the continuous Laplace operator, defined so that it has meaning on a graph or a discrete grid. For the case of a finite-dimensional graph, the discrete Laplace operator is more commonly called the Laplacian matrix.

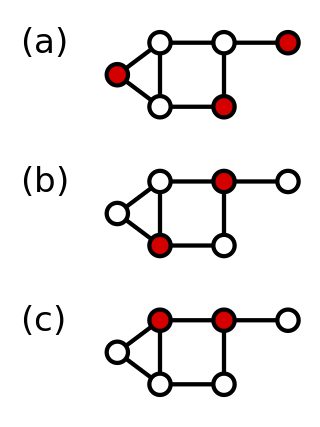

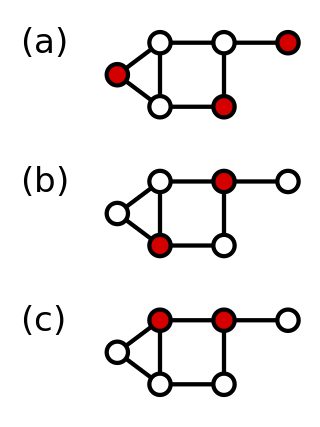

In graph theory, a dominating set for a graph G is a subset D of its vertices, such that any vertex of G is either in D, or has a neighbor in D. The domination numberγ(G) is the number of vertices in a smallest dominating set for G.

In graph theory, reachability refers to the ability to get from one vertex to another within a graph. A vertex can reach a vertex if there exists a sequence of adjacent vertices which starts with and ends with .

The distributed minimum spanning tree (MST) problem involves the construction of a minimum spanning tree by a distributed algorithm, in a network where nodes communicate by message passing. It is radically different from the classical sequential problem, although the most basic approach resembles Borůvka's algorithm. One important application of this problem is to find a tree that can be used for broadcasting. In particular, if the cost for a message to pass through an edge in a graph is significant, an MST can minimize the total cost for a source process to communicate with all the other processes in the network.

In the mathematical discipline of graph theory, the expander walk sampling theorem intuitively states that sampling vertices in an expander graph by doing relatively short random walk can simulate sampling the vertices independently from a uniform distribution. The earliest version of this theorem is due to Ajtai, Komlós & Szemerédi (1987), and the more general version is typically attributed to Gillman (1998).

In computer science and graph theory, Karger's algorithm is a randomized algorithm to compute a minimum cut of a connected graph. It was invented by David Karger and first published in 1993.

In graph theory, a random geometric graph (RGG) is the mathematically simplest spatial network, namely an undirected graph constructed by randomly placing N nodes in some metric space and connecting two nodes by a link if and only if their distance is in a given range, e.g. smaller than a certain neighborhood radius, r.

In mathematics, a packing in a hypergraph is a partition of the set of the hypergraph's edges into a number of disjoint subsets such that no pair of edges in each subset share any vertex. There are two famous algorithms to achieve asymptotically optimal packing in k-uniform hypergraphs. One of them is a random greedy algorithm which was proposed by Joel Spencer. He used a branching process to formally prove the optimal achievable bound under some side conditions. The other algorithm is called the Rödl nibble and was proposed by Vojtěch Rödl et al. They showed that the achievable packing by the Rödl nibble is in some sense close to that of the random greedy algorithm.

In mathematics, the Kontsevich quantization formula describes how to construct a generalized ★-product operator algebra from a given arbitrary finite-dimensional Poisson manifold. This operator algebra amounts to the deformation quantization of the corresponding Poisson algebra. It is due to Maxim Kontsevich.

In coding theory, expander codes form a class of error-correcting codes that are constructed from bipartite expander graphs. Along with Justesen codes, expander codes are of particular interest since they have a constant positive rate, a constant positive relative distance, and a constant alphabet size. In fact, the alphabet contains only two elements, so expander codes belong to the class of binary codes. Furthermore, expander codes can be both encoded and decoded in time proportional to the block length of the code.

The expected linear time MST algorithm is a randomized algorithm for computing the minimum spanning forest of a weighted graph with no isolated vertices. It was developed by David Karger, Philip Klein, and Robert Tarjan. The algorithm relies on techniques from Borůvka's algorithm along with an algorithm for verifying a minimum spanning tree in linear time. It combines the design paradigms of divide and conquer algorithms, greedy algorithms, and randomized algorithms to achieve expected linear performance.

In coding theory, Zemor's algorithm, designed and developed by Gilles Zemor, is a recursive low-complexity approach to code construction. It is an improvement over the algorithm of Sipser and Spielman.

A hyperbolic geometric graph (HGG) or hyperbolic geometric network (HGN) is a special type of spatial network where (1) latent coordinates of nodes are sprinkled according to a probability density function into a hyperbolic space of constant negative curvature and (2) an edge between two nodes is present if they are close according to a function of the metric (typically either a Heaviside step function resulting in deterministic connections between vertices closer than a certain threshold distance, or a decaying function of hyperbolic distance yielding the connection probability). A HGG generalizes a random geometric graph (RGG) whose embedding space is Euclidean.

Maximal entropy random walk (MERW) is a popular type of biased random walk on a graph, in which transition probabilities are chosen accordingly to the principle of maximum entropy, which says that the probability distribution which best represents the current state of knowledge is the one with largest entropy. While standard random walk chooses for every vertex uniform probability distribution among its outgoing edges, locally maximizing entropy rate, MERW maximizes it globally by assuming uniform probability distribution among all paths in a given graph.

A central problem in algorithmic graph theory is the shortest path problem. One of the generalizations of the shortest path problem is known as the single-source-shortest-paths (SSSP) problem, which consists of finding the shortest paths from a source vertex to all other vertices in the graph. There are classical sequential algorithms which solve this problem, such as Dijkstra's algorithm. In this article, however, we present two parallel algorithms solving this problem.