Rational choice theory refers to a set of guidelines that help understand economic and social behaviour. The theory originated in the eighteenth century and can be traced back to the political economist and philosopher Adam Smith. The theory postulates that an individual will perform a cost–benefit analysis to determine whether an option is right for them. It also suggests that an individual's self-driven rational actions will help better the overall economy. Rational choice theory looks at three concepts: rational actors, self interest and the invisible hand.

Rationality is the quality of being guided by or based on reason. In this regard, a person acts rationally if they have a good reason for what they do or a belief is rational if it is based on strong evidence. This quality can apply to an ability, as in a rational animal, to a psychological process, like reasoning, to mental states, such as beliefs and intentions, or to persons who possess these other forms of rationality. A thing that lacks rationality is either arational, if it is outside the domain of rational evaluation, or irrational, if it belongs to this domain but does not fulfill its standards.

Satisficing is a decision-making strategy or cognitive heuristic that entails searching through the available alternatives until an acceptability threshold is met. The term satisficing, a portmanteau of satisfy and suffice, was introduced by Herbert A. Simon in 1956, although the concept was first posited in his 1947 book Administrative Behavior. Simon used satisficing to explain the behavior of decision makers under circumstances in which an optimal solution cannot be determined. He maintained that many natural problems are characterized by computational intractability or a lack of information, both of which preclude the use of mathematical optimization procedures. He observed in his Nobel Prize in Economics speech that "decision makers can satisfice either by finding optimum solutions for a simplified world, or by finding satisfactory solutions for a more realistic world. Neither approach, in general, dominates the other, and both have continued to co-exist in the world of management science".

Behavioral economics is the study of the psychological, cognitive, emotional, cultural and social factors involved in the decisions of individuals or institutions, and how these decisions deviate from those implied by classical economic theory.

Prospect theory is a theory of behavioral economics, judgment and decision making that was developed by Daniel Kahneman and Amos Tversky in 1979. The theory was cited in the decision to award Kahneman the 2002 Nobel Memorial Prize in Economics.

The theory of belief functions, also referred to as evidence theory or Dempster–Shafer theory (DST), is a general framework for reasoning with uncertainty, with understood connections to other frameworks such as probability, possibility and imprecise probability theories. First introduced by Arthur P. Dempster in the context of statistical inference, the theory was later developed by Glenn Shafer into a general framework for modeling epistemic uncertainty—a mathematical theory of evidence. The theory allows one to combine evidence from different sources and arrive at a degree of belief that takes into account all the available evidence.

Decision theory is a branch of applied probability theory and analytic philosophy concerned with the theory of making decisions based on assigning probabilities to various factors and assigning numerical consequences to the outcome.

The expected utility hypothesis is a foundational assumption in mathematical economics concerning decision making under uncertainty. It postulates that rational agents maximize utility, meaning the subjective desirability of their actions. Rational choice theory, a cornerstone of microeconomics, builds this postulate to model aggregate social behaviour.

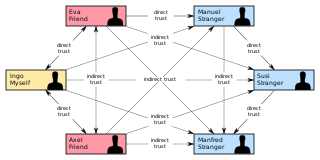

In psychology and sociology, a trust metric is a measurement or metric of the degree to which one social actor trusts another social actor. Trust metrics may be abstracted in a manner that can be implemented on computers, making them of interest for the study and engineering of virtual communities, such as Friendster and LiveJournal.

In gambling, economics, and the philosophy of probability, a Dutch book or lock is a set of odds and bets that ensures a guaranteed profit. It is generally used as a thought experiment to motivate Von Neumann–Morgenstern axioms or the axioms of probability by showing they are equivalent to philosophical coherence or Pareto efficiency.

In game theory, a Bayesian game is a strategic decision-making model which assumes players have incomplete information. Players hold private information relevant to the game, meaning that the payoffs are not common knowledge. Bayesian games model the outcome of player interactions using aspects of Bayesian probability. They are notable because they allowed, for the first time in game theory, for the specification of the solutions to games with incomplete information.

In decision theory, the Ellsberg paradox is a paradox in which people's decisions are inconsistent with subjective expected utility theory. John Maynard Keynes published a version of the paradox in 1921. Daniel Ellsberg popularized the paradox in his 1961 paper, "Risk, Ambiguity, and the Savage Axioms". It is generally taken to be evidence of ambiguity aversion, in which a person tends to prefer choices with quantifiable risks over those with unknown, incalculable risks.

Formal epistemology uses formal methods from decision theory, logic, probability theory and computability theory to model and reason about issues of epistemological interest. Work in this area spans several academic fields, including philosophy, computer science, economics, and statistics. The focus of formal epistemology has tended to differ somewhat from that of traditional epistemology, with topics like uncertainty, induction, and belief revision garnering more attention than the analysis of knowledge, skepticism, and issues with justification.

Conditional random fields (CRFs) are a class of statistical modeling methods often applied in pattern recognition and machine learning and used for structured prediction. Whereas a classifier predicts a label for a single sample without considering "neighbouring" samples, a CRF can take context into account. To do so, the predictions are modelled as a graphical model, which represents the presence of dependencies between the predictions. What kind of graph is used depends on the application. For example, in natural language processing, "linear chain" CRFs are popular, for which each prediction is dependent only on its immediate neighbours. In image processing, the graph typically connects locations to nearby and/or similar locations to enforce that they receive similar predictions.

In decision theory and economics, ambiguity aversion is a preference for known risks over unknown risks. An ambiguity-averse individual would rather choose an alternative where the probability distribution of the outcomes is known over one where the probabilities are unknown. This behavior was first introduced through the Ellsberg paradox.

The transferable belief model (TBM) is an elaboration on the Dempster–Shafer theory (DST), which is a mathematical model used to evaluate the probability that a given proposition is true from other propositions that are assigned probabilities. It was developed by Philippe Smets who proposed his approach as a response to Zadeh’s example against Dempster's rule of combination. In contrast to the original DST the TBM propagates the open-world assumption that relaxes the assumption that all possible outcomes are known. Under the open world assumption Dempster's rule of combination is adapted such that there is no normalization. The underlying idea is that the probability mass pertaining to the empty set is taken to indicate an unexpected outcome, e.g. the belief in a hypothesis outside the frame of discernment. This adaptation violates the probabilistic character of the original DST and also Bayesian inference. Therefore, the authors substituted notation such as probability masses and probability update with terms such as degrees of belief and transfer giving rise to the name of the method: The transferable belief model.

The psychology of reasoning is the study of how people reason, often broadly defined as the process of drawing conclusions to inform how people solve problems and make decisions. It overlaps with psychology, philosophy, linguistics, cognitive science, artificial intelligence, logic, and probability theory.

In economics, and in other social sciences, preference refers to an order by which an agent, while in search of an "optimal choice", ranks alternatives based on their respective utility. Preferences are evaluations that concern matters of value, in relation to practical reasoning. Individual preferences are determined by taste, need, ..., as opposed to price, availability or personal income. Classical economics assumes that people act in their best (rational) interest. In this context, rationality would dictate that, when given a choice, an individual will select an option that maximizes their self-interest. But preferences are not always transitive, both because real humans are far from always being rational and because in some situations preferences can form cycles, in which case there exists no well-defined optimal choice. An example of this is Efron dice.

A probability box is a characterization of uncertain numbers consisting of both aleatoric and epistemic uncertainties that is often used in risk analysis or quantitative uncertainty modeling where numerical calculations must be performed. Probability bounds analysis is used to make arithmetic and logical calculations with p-boxes.

Bayesian epistemology is a formal approach to various topics in epistemology that has its roots in Thomas Bayes' work in the field of probability theory. One advantage of its formal method in contrast to traditional epistemology is that its concepts and theorems can be defined with a high degree of precision. It is based on the idea that beliefs can be interpreted as subjective probabilities. As such, they are subject to the laws of probability theory, which act as the norms of rationality. These norms can be divided into static constraints, governing the rationality of beliefs at any moment, and dynamic constraints, governing how rational agents should change their beliefs upon receiving new evidence. The most characteristic Bayesian expression of these principles is found in the form of Dutch books, which illustrate irrationality in agents through a series of bets that lead to a loss for the agent no matter which of the probabilistic events occurs. Bayesians have applied these fundamental principles to various epistemological topics but Bayesianism does not cover all topics of traditional epistemology. The problem of confirmation in the philosophy of science, for example, can be approached through the Bayesian principle of conditionalization by holding that a piece of evidence confirms a theory if it raises the likelihood that this theory is true. Various proposals have been made to define the concept of coherence in terms of probability, usually in the sense that two propositions cohere if the probability of their conjunction is higher than if they were neutrally related to each other. The Bayesian approach has also been fruitful in the field of social epistemology, for example, concerning the problem of testimony or the problem of group belief. Bayesianism still faces various theoretical objections that have not been fully solved.