Artificial intelligence (AI), in its broadest sense, is intelligence exhibited by machines, particularly computer systems. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and uses learning and intelligence to take actions that maximize their chances of achieving defined goals. Such machines may be called AIs.

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of statistical algorithms that can learn from data and generalize to unseen data, and thus perform tasks without explicit instructions. Recently, artificial neural networks have been able to surpass many previous approaches in performance.

A facial recognition system is a technology potentially capable of matching a human face from a digital image or a video frame against a database of faces. Such a system is typically employed to authenticate users through ID verification services, and works by pinpointing and measuring facial features from a given image.

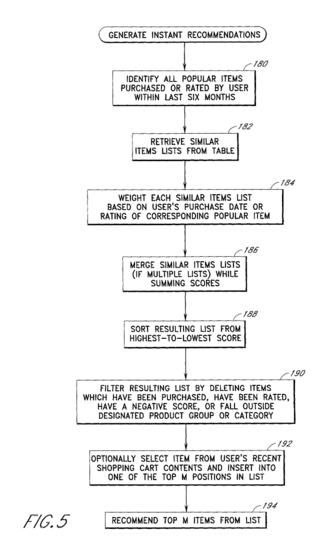

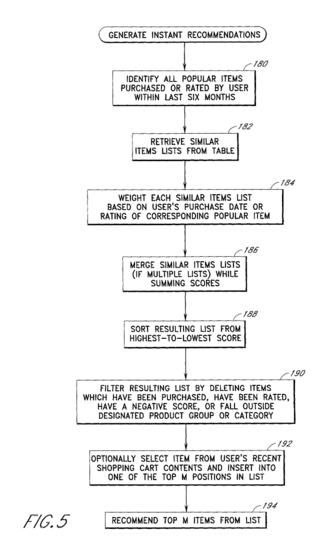

Predictive analytics is a form of business analytics applying machine learning to generate a predictive model for certain business applications. As such, it encompasses a variety of statistical techniques from predictive modeling and machine learning that analyze current and historical facts to make predictions about future or otherwise unknown events. It represents a major subset of machine learning applications; in some contexts, it is synonymous with machine learning.

Disease Informatics (also infectious disease informatics) studies the knowledge production, sharing, modeling, and management of infectious diseases. It became a more studied field as a by-product of the rapid increases in the amount of biomedical and clinical data widely available, and to meet the demands for useful data analyses of such data.

Hierarchical temporal memory (HTM) is a biologically constrained machine intelligence technology developed by Numenta. Originally described in the 2004 book On Intelligence by Jeff Hawkins with Sandra Blakeslee, HTM is primarily used today for anomaly detection in streaming data. The technology is based on neuroscience and the physiology and interaction of pyramidal neurons in the neocortex of the mammalian brain.

Artificial intelligence marketing (AIM) is a form of marketing that uses artificial intelligence concepts and models such as machine learning, Natural process Languages, and Bayesian Networks to achieve marketing goals. The main difference between AIM and traditional forms of marketing resides in the reasoning, which is performed by a computer algorithm rather than a human.

Artificial intelligence (AI) has been used in applications throughout industry and academia. Similar to electricity or computers, AI serves as a general-purpose technology that has numerous applications. Its applications span language translation, image recognition, decision-making, credit scoring, e-commerce and various other domains. AI which accommodates such technologies as machines being equipped perceive, understand, act and learning a scientific discipline.

In network theory, link analysis is a data-analysis technique used to evaluate relationships between nodes. Relationships may be identified among various types of nodes (100k), including organizations, people and transactions. Link analysis has been used for investigation of criminal activity, computer security analysis, search engine optimization, market research, medical research, and art.

Deep learning is the subset of machine learning methods based on neural networks with representation learning. The adjective "deep" refers to the use of multiple layers in the network. Methods used can be either supervised, semi-supervised or unsupervised.

In the United States, the practice of predictive policing has been implemented by police departments in several states such as California, Washington, South Carolina, Alabama, Arizona, Tennessee, New York, and Illinois. Predictive policing refers to the usage of mathematical, predictive analytics, and other analytical techniques in law enforcement to identify potential criminal activity. Predictive policing methods fall into four general categories: methods for predicting crimes, methods for predicting offenders, methods for predicting perpetrators' identities, and methods for predicting victims of crime.

Unanimous AI is an American technology company provides artificial swarm intelligence (ASI) technology. Unanimous AI provides a "human swarming" platform "swarm.ai" that allows distributed groups of users to collectively predict answers to questions. This process has resulted in successful predictions of major events such as the Kentucky Derby, the Oscars, the Stanley Cup, Presidential Elections, and the World Series.

Artificial intelligence in healthcare is a term used to describe the use of machine-learning algorithms and software, or artificial intelligence (AI), to copy human cognition in the analysis, presentation, and understanding of complex medical and health care data, or to exceed human capabilities by providing new ways to diagnose, treat, or prevent disease. Specifically, AI is the ability of computer algorithms to arrive at approximate conclusions based solely on input data.

Explainable AI (XAI), often overlapping with Interpretable AI, or Explainable Machine Learning (XML), either refers to an artificial intelligence (AI) system over which it is possible for humans to retain intellectual oversight, or refers to the methods to achieve this. The main focus is usually on the reasoning behind the decisions or predictions made by the AI which are made more understandable and transparent. XAI counters the "black box" tendency of machine learning, where even the AI's designers cannot explain why it arrived at a specific decision.

Algorithmic bias describes systematic and repeatable errors in a computer system that create "unfair" outcomes, such as "privileging" one category over another in ways different from the intended function of the algorithm.

Merative L.P., formerly IBM Watson Health, is an American medical technology company that provides products and services that help clients facilitate medical research, clinical research, real world evidence, and healthcare services, through the use of artificial intelligence, data analytics, cloud computing, and other advanced information technology. Merative is owned by Francisco Partners, an American private equity firm headquartered in San Francisco, California. In 2022, IBM divested and spun-off their Watson Health division into Merative. As of 2023, it remains a standalone company headquartered in Ann Arbor with innovation centers in Hyderabad, Bengaluru, and Chennai.

Government by algorithm is an alternative form of government or social ordering where the usage of computer algorithms is applied to regulations, law enforcement, and generally any aspect of everyday life such as transportation or land registration. The term "government by algorithm" has appeared in academic literature as an alternative for "algorithmic governance" in 2013. A related term, algorithmic regulation, is defined as setting the standard, monitoring and modifying behaviour by means of computational algorithms – automation of judiciary is in its scope. In the context of blockchain, it is also known as blockchain governance.

Artificial intelligence (AI) in hiring involves the use of technology to automate aspects of the hiring process. Advances in artificial intelligence, such as the advent of machine learning and the growth of big data, enable AI to be utilized to recruit, screen, and predict the success of applicants. Proponents of artificial intelligence in hiring claim it reduces bias, assists with finding qualified candidates, and frees up human resource workers' time for other tasks, while opponents worry that AI perpetuates inequalities in the workplace and will eliminate jobs. Despite the potential benefits, the ethical implications of AI in hiring remain a subject of debate, with concerns about algorithmic transparency, accountability, and the need for ongoing oversight to ensure fair and unbiased decision-making throughout the recruitment process.

Himabindu "Hima" Lakkaraju is an Indian-American computer scientist who works on machine learning, artificial intelligence, algorithmic bias, and AI accountability. She is currently an Assistant Professor at the Harvard Business School and is also affiliated with the Department of Computer Science at Harvard University. Lakkaraju is known for her work on explainable machine learning. More broadly, her research focuses on developing machine learning models and algorithms that are interpretable, transparent, fair, and reliable. She also investigates the practical and ethical implications of deploying machine learning models in domains involving high-stakes decisions such as healthcare, criminal justice, business, and education. Lakkaraju was named as one of the world's top Innovators Under 35 by both Vanity Fair and the MIT Technology Review.

Automated decision-making (ADM) involves the use of data, machines and algorithms to make decisions in a range of contexts, including public administration, business, health, education, law, employment, transport, media and entertainment, with varying degrees of human oversight or intervention. ADM involves large-scale data from a range of sources, such as databases, text, social media, sensors, images or speech, that is processed using various technologies including computer software, algorithms, machine learning, natural language processing, artificial intelligence, augmented intelligence and robotics. The increasing use of automated decision-making systems (ADMS) across a range of contexts presents many benefits and challenges to human society requiring consideration of the technical, legal, ethical, societal, educational, economic and health consequences.