In computing, a compiler is a computer program that translates computer code written in one programming language into another language. The name "compiler" is primarily used for programs that translate source code from a high-level programming language to a low-level programming language to create an executable program.

In computing, object code or object module is the product of an assembler or compiler.

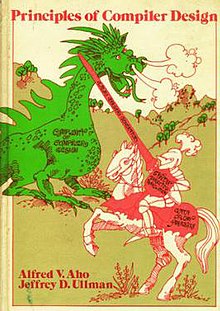

The Dragon Book may refer to:

In computer science, a recursive descent parser is a kind of top-down parser built from a set of mutually recursive procedures where each such procedure implements one of the nonterminals of the grammar. Thus the structure of the resulting program closely mirrors that of the grammar it recognizes.

Alfred Vaino Aho is a Canadian computer scientist best known for his work on programming languages, compilers, and related algorithms, and his textbooks on the art and science of computer programming.

Compilers: Principles, Techniques, and Tools is a computer science textbook by Alfred V. Aho, Monica S. Lam, Ravi Sethi, and Jeffrey D. Ullman about compiler construction for programming languages. First published in 1986, it is widely regarded as the classic definitive compiler technology text.

In computing, code generation is part of the process chain of a compiler, in which an intermediate representation of source code is converted into a form that can be readily executed by the target system.

In compiler construction, a basic block is a straight-line code sequence with no branches in except to the entry and no branches out except at the exit. This restricted form makes a basic block highly amenable to analysis. Compilers usually decompose programs into their basic blocks as a first step in the analysis process. Basic blocks form the vertices or nodes in a control-flow graph.

In computer science, three-address code is an intermediate code used by optimizing compilers to aid in the implementation of code-improving transformations. Each TAC instruction has at most three operands and is typically a combination of assignment and a binary operator. For example, t1 := t2 + t3. The name derives from the use of three operands in these statements even though instructions with fewer operands may occur.

Jeffrey David Ullman is an American computer scientist and the Stanford W. Ascherman Professor of Engineering, Emeritus, at Stanford University. His textbooks on compilers, theory of computation, data structures, and databases are regarded as standards in their fields. He and his long-time collaborator Alfred Aho are the recipients of the 2020 Turing Award, generally recognized as the highest distinction in computer science.

In compiler theory, copy propagation is the process of replacing the occurrences of targets of direct assignments with their values. A direct assignment is an instruction of the form x = y, which simply assigns the value of y to x.

An operator precedence grammar is a kind of grammar for formal languages.

Peephole optimization is an optimization technique performed on a small set of compiler-generated instructions, known as a peephole or window, that involves replacing the instructions with a logically equivalent set that has better performance.

In computer science, an induction variable is a variable that gets increased or decreased by a fixed amount on every iteration of a loop or is a linear function of another induction variable.

The Amsterdam Compiler Kit (ACK) is a retargetable compiler suite and toolchain written by Andrew Tanenbaum and Ceriel Jacobs, since 2005 maintained by David Given. It has frontends for the following programming languages: C, Pascal, Modula-2, Occam, and BASIC.

Monica Sin-Ling Lam is an American computer scientist. She is a professor in the Computer Science Department at Stanford University.

In computing, compiler correctness is the branch of computer science that deals with trying to show that a compiler behaves according to its language specification. Techniques include developing the compiler using formal methods and using rigorous testing on an existing compiler.

A lookahead LR parser (LALR) generator is a software tool that reads a context-free grammar (CFG) and creates an LALR parser which is capable of parsing files written in the context-free language defined by the CFG. LALR parsers are desirable because they are very fast and small in comparison to other types of parsers.

Charm is a computer programming language devised in the early 1990s with similarities to the RTL/2, Pascal and C languages in addition to containing some unique features of its own. The Charm language is defined by a context-free grammar amenable to being processed by recursive descent parser as described in seminal books on compiler design.

In computer science, Thompson's construction algorithm, also called the McNaughton–Yamada–Thompson algorithm, is a method of transforming a regular expression into an equivalent nondeterministic finite automaton (NFA). This NFA can be used to match strings against the regular expression. This algorithm is credited to Ken Thompson.