Related Research Articles

Causality is influence by which one event, process, state, or object contributes to the production of another event, process, state, or object where the cause is partly responsible for the effect, and the effect is partly dependent on the cause. In general, a process has many causes, which are also said to be causal factors for it, and all lie in its past. An effect can in turn be a cause of, or causal factor for, many other effects, which all lie in its future. Some writers have held that causality is metaphysically prior to notions of time and space.

The phrase "correlation does not imply causation" refers to the inability to legitimately deduce a cause-and-effect relationship between two events or variables solely on the basis of an observed association or correlation between them. The idea that "correlation implies causation" is an example of a questionable-cause logical fallacy, in which two events occurring together are taken to have established a cause-and-effect relationship. This fallacy is also known by the Latin phrase cum hoc ergo propter hoc. This differs from the fallacy known as post hoc ergo propter hoc, in which an event following another is seen as a necessary consequence of the former event, and from conflation, the errant merging of two events, ideas, databases, etc., into one.

Determinism is a philosophical view where all events are determined completely by previously existing causes. Deterministic theories throughout the history of philosophy have developed from diverse and sometimes overlapping motives and considerations. The opposite of determinism is some kind of indeterminism or randomness. Determinism is often contrasted with free will, although some philosophers claim that the two are compatible.

Causality is the relationship between causes and effects. While causality is also a topic studied from the perspectives of philosophy and physics, it is operationalized so that causes of an event must be in the past light cone of the event and ultimately reducible to fundamental interactions. Similarly, a cause cannot have an effect outside its future light cone.

Indeterminism is the idea that events are not caused, or do not cause deterministically.

Libertarianism is one of the main philosophical positions related to the problems of free will and determinism which are part of the larger domain of metaphysics. In particular, libertarianism is an incompatibilist position which argues that free will is logically incompatible with a deterministic universe. Libertarianism states that since agents have free will, determinism must be false.

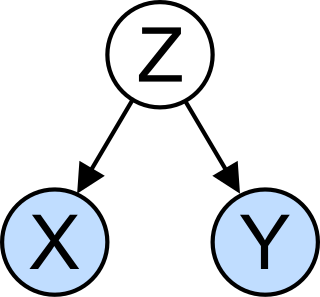

The Markov condition, sometimes called the Markov assumption, is an assumption made in Bayesian probability theory, that every node in a Bayesian network is conditionally independent of its nondescendants, given its parents. Stated loosely, it is assumed that a node has no bearing on nodes which do not descend from it. In a DAG, this local Markov condition is equivalent to the global Markov condition, which states that d-separations in the graph also correspond to conditional independence relations. This also means that a node is conditionally independent of the entire network, given its Markov blanket.

The Granger causality test is a statistical hypothesis test for determining whether one time series is useful in forecasting another, first proposed in 1969. Ordinarily, regressions reflect "mere" correlations, but Clive Granger argued that causality in economics could be tested for by measuring the ability to predict the future values of a time series using prior values of another time series. Since the question of "true causality" is deeply philosophical, and because of the post hoc ergo propter hoc fallacy of assuming that one thing preceding another can be used as a proof of causation, econometricians assert that the Granger test finds only "predictive causality". Using the term "causality" alone is a misnomer, as Granger-causality is better described as "precedence", or, as Granger himself later claimed in 1977, "temporally related". Rather than testing whether Xcauses Y, the Granger causality tests whether X forecastsY.

The deductive-nomological model of scientific explanation, also known as Hempel's model, the Hempel–Oppenheim model, the Popper–Hempel model, or the covering law model, is a formal view of scientifically answering questions asking, "Why...?". The DN model poses scientific explanation as a deductive structure, one where truth of its premises entails truth of its conclusion, hinged on accurate prediction or postdiction of the phenomenon to be explained.

Wesley Charles Salmon was an American philosopher of science renowned for his work on the nature of scientific explanation. He also worked on confirmation theory, trying to explicate how probability theory via inductive logic might help confirm and choose hypotheses. Yet most prominently, Salmon was a realist about causality in scientific explanation, although his realist explanation of causality drew ample criticism. Still, his books on scientific explanation itself were landmarks of the 20th century's philosophy of science, and solidified recognition of causality's important roles in scientific explanation, whereas causality itself has evaded satisfactory elucidation by anyone.

In statistics, a confounder is a variable that influences both the dependent variable and independent variable, causing a spurious association. Confounding is a causal concept, and as such, cannot be described in terms of correlations or associations. The existence of confounders is an important quantitative explanation why correlation does not imply causation.

In the philosophy of science, a causal model is a conceptual model that describes the causal mechanisms of a system. Causal models can improve study designs by providing clear rules for deciding which independent variables need to be included/controlled for.

Causal reasoning is the process of identifying causality: the relationship between a cause and its effect. The study of causality extends from ancient philosophy to contemporary neuropsychology; assumptions about the nature of causality may be shown to be functions of a previous event preceding a later one. The first known protoscientific study of cause and effect occurred in Aristotle's Physics. Causal inference is an example of causal reasoning.

Causation refers to the existence of "cause and effect" relationships between multiple variables. Causation presumes that variables, which act in a predictable manner, can produce change in related variables and that this relationship can be deduced through direct and repeated observation. Theories of causation underpin social research as it aims to deduce causal relationships between structural phenomena and individuals and explain these relationships through the application and development of theory. Due to divergence amongst theoretical and methodological approaches, different theories, namely functionalism, all maintain varying conceptions on the nature of causality and causal relationships. Similarly, a multiplicity of causes have led to the distinction between necessary and sufficient causes.

Causal analysis is the field of experimental design and statistics pertaining to establishing cause and effect. Typically it involves establishing four elements: correlation, sequence in time, a plausible physical or information-theoretical mechanism for an observed effect to follow from a possible cause, and eliminating the possibility of common and alternative ("special") causes. Such analysis usually involves one or more artificial or natural experiments.

The Bradford Hill criteria, otherwise known as Hill's criteria for causation, are a group of nine principles that can be useful in establishing epidemiologic evidence of a causal relationship between a presumed cause and an observed effect and have been widely used in public health research. They were established in 1965 by the English epidemiologist Sir Austin Bradford Hill.

Causal inference is the process of determining the independent, actual effect of a particular phenomenon that is a component of a larger system. The main difference between causal inference and inference of association is that causal inference analyzes the response of an effect variable when a cause of the effect variable is changed. The science of why things occur is called etiology. Causal inference is said to provide the evidence of causality theorized by causal reasoning.

The discipline of forensic epidemiology (FE) is a hybrid of principles and practices common to both forensic medicine and epidemiology. FE is directed at filling the gap between clinical judgment and epidemiologic data for determinations of causality in civil lawsuits and criminal prosecution and defense.

Causal analysis is the field of experimental design and statistics pertaining to establishing cause and effect. Exploratory causal analysis (ECA), also known as data causality or causal discovery is the use of statistical algorithms to infer associations in observed data sets that are potentially causal under strict assumptions. ECA is a type of causal inference distinct from causal modeling and treatment effects in randomized controlled trials. It is exploratory research usually preceding more formal causal research in the same way exploratory data analysis often precedes statistical hypothesis testing in data analysis

The Book of Why: The New Science of Cause and Effect is a 2018 nonfiction book by computer scientist Judea Pearl and writer Dana Mackenzie. The book explores the subject of causality and causal inference from statistical and philosophical points of view for a general audience.

References

- Hitchcock, Christopher. "Probabilistic Causation". In Zalta, Edward N. (ed.). Stanford Encyclopedia of Philosophy .