Fuzzy logic is a form of many-valued logic in which the truth value of variables may be any real number between 0 and 1. It is employed to handle the concept of partial truth, where the truth value may range between completely true and completely false. By contrast, in Boolean logic, the truth values of variables may only be the integer values 0 or 1.

Uncertainty or Incertitude refers to epistemic situations involving imperfect or unknown information. It applies to predictions of future events, to physical measurements that are already made, or to the unknown. Uncertainty arises in partially observable or stochastic environments, as well as due to ignorance, indolence, or both. It arises in any number of fields, including insurance, philosophy, physics, statistics, economics, finance, medicine, psychology, sociology, engineering, metrology, meteorology, ecology and information science.

The theory of belief functions, also referred to as evidence theory or Dempster–Shafer theory (DST), is a general framework for reasoning with uncertainty, with understood connections to other frameworks such as probability, possibility and imprecise probability theories. First introduced by Arthur P. Dempster in the context of statistical inference, the theory was later developed by Glenn Shafer into a general framework for modeling epistemic uncertainty—a mathematical theory of evidence. The theory allows one to combine evidence from different sources and arrive at a degree of belief that takes into account all the available evidence.

In logic, a predicate is a symbol that represents a property or a relation. For instance, in the first-order formula , the symbol is a predicate that applies to the individual constant . Similarly, in the formula , the symbol is a predicate that applies to the individual constants and .

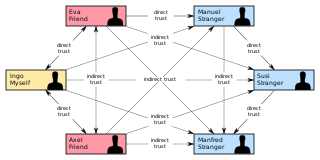

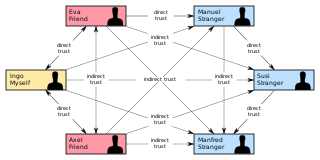

In psychology and sociology, a trust metric is a measurement or metric of the degree to which one social actor trusts another social actor. Trust metrics may be abstracted in a manner that can be implemented on computers, making them of interest for the study and engineering of virtual communities, such as Friendster and LiveJournal.

Possibility theory is a mathematical theory for dealing with certain types of uncertainty and is an alternative to probability theory. It uses measures of possibility and necessity between 0 and 1, ranging from impossible to possible and unnecessary to necessary, respectively. Professor Lotfi Zadeh first introduced possibility theory in 1978 as an extension of his theory of fuzzy sets and fuzzy logic. Didier Dubois and Henri Prade further contributed to its development. Earlier, in the 1950s, economist G. L. S. Shackle proposed the min/max algebra to describe degrees of potential surprise.

A Markov logic network (MLN) is a probabilistic logic which applies the ideas of a Markov network to first-order logic, defining probability distributions on possible worlds on any given domain.

Probabilistic logic involves the use of probability and logic to deal with uncertain situations. Probabilistic logic extends traditional logic truth tables with probabilistic expressions. A difficulty of probabilistic logics is their tendency to multiply the computational complexities of their probabilistic and logical components. Other difficulties include the possibility of counter-intuitive results, such as in case of belief fusion in Dempster–Shafer theory. Source trust and epistemic uncertainty about the probabilities they provide, such as defined in subjective logic, are additional elements to consider. The need to deal with a broad variety of contexts and issues has led to many different proposals.

Subjective logic is a type of probabilistic logic that explicitly takes epistemic uncertainty and source trust into account. In general, subjective logic is suitable for modeling and analysing situations involving uncertainty and relatively unreliable sources. For example, it can be used for modeling and analysing trust networks and Bayesian networks.

Ben Goertzel is a computer scientist, artificial intelligence researcher, and businessman. He helped popularize the term 'artificial general intelligence'.

A semantic reasoner, reasoning engine, rules engine, or simply a reasoner, is a piece of software able to infer logical consequences from a set of asserted facts or axioms. The notion of a semantic reasoner generalizes that of an inference engine, by providing a richer set of mechanisms to work with. The inference rules are commonly specified by means of an ontology language, and often a description logic language. Many reasoners use first-order predicate logic to perform reasoning; inference commonly proceeds by forward chaining and backward chaining. There are also examples of probabilistic reasoners, including non-axiomatic reasoning systems, and probabilistic logic networks.

Valuation-based system (VBS) is a framework for knowledge representation and inference. Real-world problems are modeled in this framework by a network of interrelated entities, called variables. The relationships between variables are represented by the functions called valuations. The two basic operations for performing inference in a VBS are combination and marginalization. Combination corresponds to the aggregation of knowledge, while marginalization refers to the focusing (coarsening) of it. VBSs were introduced by Prakash P. Shenoy in 1989 as general frameworks for managing uncertainty in expert systems.

OpenCog is a project that aims to build an open source artificial intelligence framework. OpenCog Prime is an architecture for robot and virtual embodied cognition that defines a set of interacting components designed to give rise to human-equivalent artificial general intelligence (AGI) as an emergent phenomenon of the whole system. OpenCog Prime's design is primarily the work of Ben Goertzel while the OpenCog framework is intended as a generic framework for broad-based AGI research. Research utilizing OpenCog has been published in journals and presented at conferences and workshops including the annual Conference on Artificial General Intelligence. OpenCog is released under the terms of the GNU Affero General Public License.

Uncertain inference was first described by C. J. van Rijsbergen as a way to formally define a query and document relationship in Information retrieval. This formalization is a logical implication with an attached measure of uncertainty.

In information technology a reasoning system is a software system that generates conclusions from available knowledge using logical techniques such as deduction and induction. Reasoning systems play an important role in the implementation of artificial intelligence and knowledge-based systems.

Probabilistic Soft Logic (PSL) is a statistical relational learning (SRL) framework for modeling probabilistic and relational domains. It is applicable to a variety of machine learning problems, such as collective classification, entity resolution, link prediction, and ontology alignment. PSL combines two tools: first-order logic, with its ability to succinctly represent complex phenomena, and probabilistic graphical models, which capture the uncertainty and incompleteness inherent in real-world knowledge. More specifically, PSL uses "soft" logic as its logical component and Markov random fields as its statistical model. PSL provides sophisticated inference techniques for finding the most likely answer (i.e. the maximum a posteriori (MAP) state). The "softening" of the logical formulas makes inference a polynomial time operation rather than an NP-hard operation.

Knowledge crystals are web-based information objects that are used in scientific information production. Especially, they are used in open assessments designed to support societal decisions. They act as current best answers to specific research questions. They are produced and distributed openly using crowdsourcing and scientific criticism.

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence, its sub-disciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.

Probabilistic logic programming is a programming paradigm that combines logic programming with probabilities.