Related Research Articles

Bash is a Unix shell and command language written by Brian Fox for the GNU Project as a free software replacement for the Bourne shell. First released in 1989, it has been used as the default login shell for most Linux distributions. Bash was one of the first programs Linus Torvalds ported to Linux, alongside GCC. A version is also available for Windows 10 and Windows 11 via the Windows Subsystem for Linux. It is also the default user shell in Solaris 11. Bash was also the default shell in versions of Apple macOS from 10.3 to the 2019 release of macOS Catalina, which changed the default shell to zsh, although Bash remains available as an alternative shell.

A shell script is a computer program designed to be run by a Unix shell, a command-line interpreter. The various dialects of shell scripts are considered to be scripting languages. Typical operations performed by shell scripts include file manipulation, program execution, and printing text. A script which sets up the environment, runs the program, and does any necessary cleanup or logging, is called a wrapper.

The Bourne shell (sh) is a shell command-line interpreter for computer operating systems.

The C shell is a Unix shell created by Bill Joy while he was a graduate student at University of California, Berkeley in the late 1970s. It has been widely distributed, beginning with the 2BSD release of the Berkeley Software Distribution (BSD) which Joy first distributed in 1978. Other early contributors to the ideas or the code were Michael Ubell, Eric Allman, Mike O'Brien and Jim Kulp.

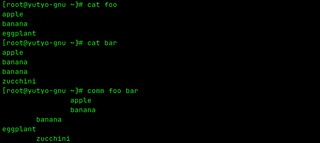

The comm command in the Unix family of computer operating systems is a utility that is used to compare two files for common and distinct lines. comm is specified in the POSIX standard. It has been widely available on Unix-like operating systems since the mid to late 1980s.

xargs is a command on Unix and most Unix-like operating systems used to build and execute commands from standard input. It converts input from standard input into arguments to a command.

In computing, redirection is a form of interprocess communication, and is a function common to most command-line interpreters, including the various Unix shells that can redirect standard streams to user-specified locations.

In Unix and Unix-like computer operating systems, a file descriptor is a process-unique identifier (handle) for a file or other input/output resource, such as a pipe or network socket.

In computing, a named pipe is an extension to the traditional pipe concept on Unix and Unix-like systems, and is one of the methods of inter-process communication (IPC). The concept is also found in OS/2 and Microsoft Windows, although the semantics differ substantially. A traditional pipe is "unnamed" and lasts only as long as the process. A named pipe, however, can last as long as the system is up, beyond the life of the process. It can be deleted if no longer used. Usually a named pipe appears as a file, and generally processes attach to it for IPC.

pax is an archiving utility available for various operating systems and defined since 1995. Rather than sort out the incompatible options that have crept up between tar and cpio, along with their implementations across various versions of Unix, the IEEE designed new archive utility pax that could support various archive formats with useful options from both archivers. The pax command is available on Unix and Unix-like operating systems and on IBM i, Microsoft Windows NT, and Windows 2000.

In Unix-like computer operating systems, a pipeline is a mechanism for inter-process communication using message passing. A pipeline is a set of processes chained together by their standard streams, so that the output text of each process (stdout) is passed directly as input (stdin) to the next one. The second process is started as the first process is still executing, and they are executed concurrently. The concept of pipelines was championed by Douglas McIlroy at Unix's ancestral home of Bell Labs, during the development of Unix, shaping its toolbox philosophy. It is named by analogy to a physical pipeline. A key feature of these pipelines is their "hiding of internals". This in turn allows for more clarity and simplicity in the system.

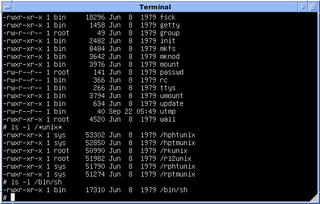

The seven standard Unix file types are regular, directory, symbolic link, FIFO special, block special, character special, and socket as defined by POSIX. Different OS-specific implementations allow more types than what POSIX requires. A file's type can be identified by the ls -l command, which displays the type in the first character of the file-system permissions field.

The file command is a standard program of Unix and Unix-like operating systems for recognizing the type of data contained in a computer file.

In computing, a here document is a file literal or input stream literal: it is a section of a source code file that is treated as if it were a separate file. The term is also used for a form of multiline string literals that use similar syntax, preserving line breaks and other whitespace in the text.

In Unix-like and some other operating systems, find is a command-line utility that locates files based on some user-specified criteria and either prints the pathname of each matched object or, if another action is requested, performs that action on each matched object.

In computing, tee is a command in command-line interpreters (shells) using standard streams which reads standard input and writes it to both standard output and one or more files, effectively duplicating its input. It is primarily used in conjunction with pipes and filters. The command is named after the T-splitter used in plumbing.

In computing, sort is a standard command line program of Unix and Unix-like operating systems, that prints the lines of its input or concatenation of all files listed in its argument list in sorted order. Sorting is done based on one or more sort keys extracted from each line of input. By default, the entire input is taken as sort key. Blank space is the default field separator. The command supports a number of command-line options that can vary by implementation. For instance the "-r" flag will reverse the sort order.

In Unix and Unix-like operating systems, job control refers to control of jobs by a shell, especially interactively, where a "job" is a shell's representation for a process group. Basic job control features are the suspending, resuming, or terminating of all processes in the job/process group; more advanced features can be performed by sending signals to the job. Job control is of particular interest in Unix due to its multiprocessing, and should be distinguished from job control generally, which is frequently applied to sequential execution.

In computing, command substitution is a facility that allows a command to be run and its output to be pasted back on the command line as arguments to another command. Command substitution first appeared in the Bourne shell, introduced with Version 7 Unix in 1979, and has remained a characteristic of all later Unix shells. The feature has since been adopted in other programming languages as well, including Perl, PHP, Ruby and Microsoft's Powershell under Windows. It also appears in Microsoft's CMD.EXE in the FOR command and the ( ) command.

A command-line interpreter or command-line processor uses a command-line interface (CLI) to receive commands from a user in the form of lines of text. This provides a means of setting parameters for the environment, invoking executables and providing information to them as to what actions they are to perform. In some cases the invocation is conditional based on conditions established by the user or previous executables. Such access was first provided by computer terminals starting in the mid-1960s. This provided an interactive environment not available with punched cards or other input methods.

References

- ↑ Rosenblatt, Bill; Robbins, Arnold (April 2002). "Appendix A.2". Learning the Korn Shell (2nd ed.). O'Reilly & Associates. ISBN 0-596-00195-9.

- ↑ Duff, Tom (1990). Rc — A Shell for Plan 9 and UNIX Systems. CiteSeerX 10.1.1.41.3287 .

- ↑ Ramey, Chet (August 18, 1994). Bash 1.14 release notes. Free Software Foundation. Available in the Gnu source archive of version 1.14.7 as of 12 February 2016.

- ↑ "ProcessSubstitution". Greg's Wiki. 22 Sep 2016. Retrieved 2021-02-06.