In computing, floating-point arithmetic (FP) is arithmetic that represents real numbers approximately, using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. For example, 12.345 can be represented as a base-ten floating-point number:

In mathematics, a square root of a number x is a number y such that ; in other words, a number y whose square is x. For example, 4 and −4 are square roots of 16 because .

In probability theory, the central limit theorem (CLT) establishes that, in many situations, for identically distributed independent samples, the standardized sample mean tends towards the standard normal distribution even if the original variables themselves are not normally distributed.

In mathematics, a Gaussian function, often simply referred to as a Gaussian, is a function of the base form

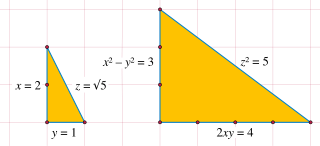

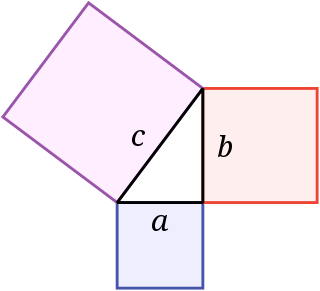

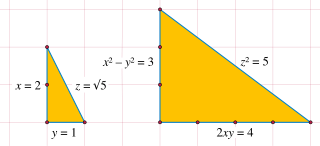

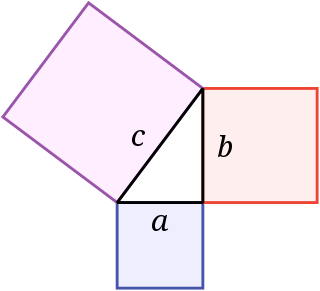

In geometry, a hypotenuse is the longest side of a right-angled triangle, the side opposite the right angle. The length of the hypotenuse can be found using the Pythagorean theorem, which states that the square of the length of the hypotenuse equals the sum of the squares of the lengths of the other two sides. For example, if one of the other sides has a length of 3 and the other has a length of 4, then their squares add up to 25. The length of the hypotenuse is the square root of 25, that is, 5.

In mathematics, a proof by infinite descent, also known as Fermat's method of descent, is a particular kind of proof by contradiction used to show that a statement cannot possibly hold for any number, by showing that if the statement were to hold for a number, then the same would be true for a smaller number, leading to an infinite descent and ultimately a contradiction. It is a method which relies on the well-ordering principle, and is often used to show that a given equation, such as a Diophantine equation, has no solutions.

In numerical analysis, the Kahan summation algorithm, also known as compensated summation, significantly reduces the numerical error in the total obtained by adding a sequence of finite-precision floating-point numbers, compared to the obvious approach. This is done by keeping a separate running compensation, in effect extending the precision of the sum by the precision of the compensation variable.

The IEEE Standard for Floating-Point Arithmetic is a technical standard for floating-point arithmetic established in 1985 by the Institute of Electrical and Electronics Engineers (IEEE). The standard addressed many problems found in the diverse floating-point implementations that made them difficult to use reliably and portably. Many hardware floating-point units use the IEEE 754 standard.

In physics, a squeezed coherent state is a quantum state that is usually described by two non-commuting observables having continuous spectra of eigenvalues. Examples are position and momentum of a particle, and the (dimension-less) electric field in the amplitude and in the mode of a light wave. The product of the standard deviations of two such operators obeys the uncertainty principle:

Dilution of precision (DOP), or geometric dilution of precision (GDOP), is a term used in satellite navigation and geomatics engineering to specify the error propagation as a mathematical effect of navigation satellite geometry on positional measurement precision.

A special right triangle is a right triangle with some regular feature that makes calculations on the triangle easier, or for which simple formulas exist. For example, a right triangle may have angles that form simple relationships, such as 45°–45°–90°. This is called an "angle-based" right triangle. A "side-based" right triangle is one in which the lengths of the sides form ratios of whole numbers, such as 3 : 4 : 5, or of other special numbers such as the golden ratio. Knowing the relationships of the angles or ratios of sides of these special right triangles allows one to quickly calculate various lengths in geometric problems without resorting to more advanced methods.

A Pythagorean prime is a prime number of the form . Pythagorean primes are exactly the odd prime numbers that are the sum of two squares; this characterization is Fermat's theorem on sums of two squares.

In number theory, a congruum is the difference between successive square numbers in an arithmetic progression of three squares. That is, if , , and are three square numbers that are equally spaced apart from each other, then the spacing between them, , is called a congruum.

In mathematics, sine and cosine are trigonometric functions of an angle. The sine and cosine of an acute angle are defined in the context of a right triangle: for the specified angle, its sine is the ratio of the length of the side that is opposite that angle to the length of the longest side of the triangle, and the cosine is the ratio of the length of the adjacent leg to that of the hypotenuse. For an angle , the sine and cosine functions are denoted simply as and .

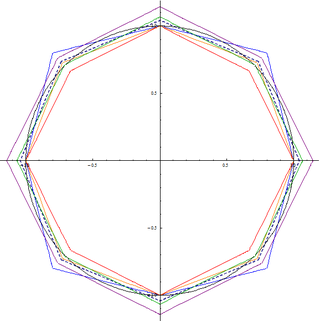

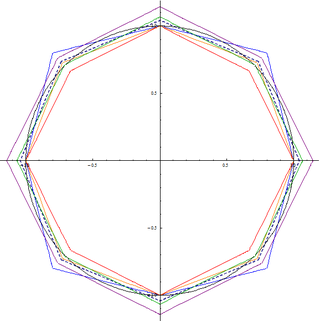

The alpha max plus beta min algorithm is a high-speed approximation of the square root of the sum of two squares. The square root of the sum of two squares, also known as Pythagorean addition, is a useful function, because it finds the hypotenuse of a right triangle given the two side lengths, the norm of a 2-D vector, or the magnitude of a complex number z = a + bi given the real and imaginary parts.

In the mathematical field of numerical analysis, monotone cubic interpolation is a variant of cubic interpolation that preserves monotonicity of the data set being interpolated.

A digital differential analyzer (DDA), also sometimes called a digital integrating computer, is a digital implementation of a differential analyzer. The integrators in a DDA are implemented as accumulators, with the numeric result converted back to a pulse rate by the overflow of the accumulator.

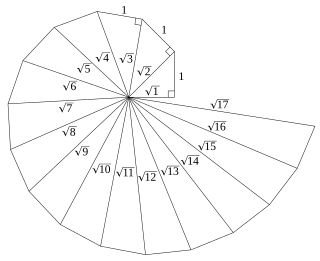

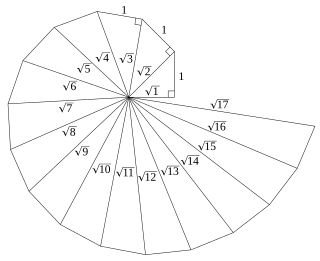

In geometry, the spiral of Theodorus is a spiral composed of right triangles, placed edge-to-edge. It was named after Theodorus of Cyrene.

In mathematics, the Pythagorean theorem or Pythagoras' theorem is a fundamental relation in Euclidean geometry between the three sides of a right triangle. It states that the area of the square whose side is the hypotenuse is equal to the sum of the areas of the squares on the other two sides.

2Sum is a floating-point algorithm for computing the exact round-off error in a floating-point addition operation.