In vector calculus, the gradient of a scalar-valued differentiable function of several variables is the vector field whose value at a point gives the direction and the rate of fastest increase. The gradient transforms like a vector under change of basis of the space of variables of . If the gradient of a function is non-zero at a point , the direction of the gradient is the direction in which the function increases most quickly from , and the magnitude of the gradient is the rate of increase in that direction, the greatest absolute directional derivative. Further, a point where the gradient is the zero vector is known as a stationary point. The gradient thus plays a fundamental role in optimization theory, where it is used to minimize a function by gradient descent. In coordinate-free terms, the gradient of a function may be defined by:

In mathematics, a spherical coordinate system is a coordinate system for three-dimensional space where the position of a given point in space is specified by three numbers, : the radial distance of the radial liner connecting the point to the fixed point of origin ; the polar angle θ of the radial line r; and the azimuthal angle φ of the radial line r.

In mathematics and physics, Laplace's equation is a second-order partial differential equation named after Pierre-Simon Laplace, who first studied its properties. This is often written as

The Navier–Stokes equations are partial differential equations which describe the motion of viscous fluid substances. They were named after French engineer and physicist Claude-Louis Navier and the Irish physicist and mathematician George Gabriel Stokes. They were developed over several decades of progressively building the theories, from 1822 (Navier) to 1842–1850 (Stokes).

In mathematics, the Laplace operator or Laplacian is a differential operator given by the divergence of the gradient of a scalar function on Euclidean space. It is usually denoted by the symbols , (where is the nabla operator), or . In a Cartesian coordinate system, the Laplacian is given by the sum of second partial derivatives of the function with respect to each independent variable. In other coordinate systems, such as cylindrical and spherical coordinates, the Laplacian also has a useful form. Informally, the Laplacian Δf (p) of a function f at a point p measures by how much the average value of f over small spheres or balls centered at p deviates from f (p).

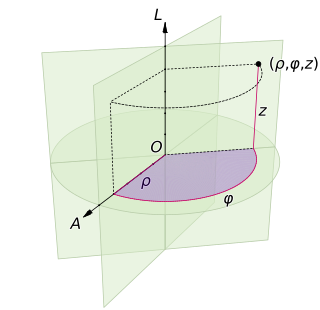

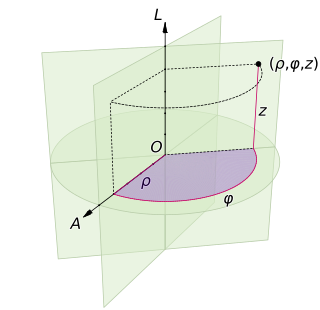

A cylindrical coordinate system is a three-dimensional coordinate system that specifies point positions by the distance from a chosen reference axis (axis L in the image opposite), the direction from the axis relative to a chosen reference direction (axis A), and the distance from a chosen reference plane perpendicular to the axis (plane containing the purple section). The latter distance is given as a positive or negative number depending on which side of the reference plane faces the point.

In vector calculus, the Jacobian matrix of a vector-valued function of several variables is the matrix of all its first-order partial derivatives. When this matrix is square, that is, when the function takes the same number of variables as input as the number of vector components of its output, its determinant is referred to as the Jacobian determinant. Both the matrix and the determinant are often referred to simply as the Jacobian in literature.

In physics, Hamiltonian mechanics is a reformulation of Lagrangian mechanics that emerged in 1833. Introduced by Sir William Rowan Hamilton, Hamiltonian mechanics replaces (generalized) velocities used in Lagrangian mechanics with (generalized) momenta. Both theories provide interpretations of classical mechanics and describe the same physical phenomena.

Electron density or electronic density is the measure of the probability of an electron being present at an infinitesimal element of space surrounding any given point. It is a scalar quantity depending upon three spatial variables and is typically denoted as either or . The density is determined, through definition, by the normalised -electron wavefunction which itself depends upon variables. Conversely, the density determines the wave function modulo up to a phase factor, providing the formal foundation of density functional theory.

In the calculus of variations, a field of mathematical analysis, the functional derivative relates a change in a functional to a change in a function on which the functional depends.

This is a list of some vector calculus formulae for working with common curvilinear coordinate systems.

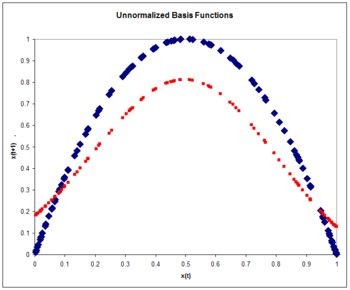

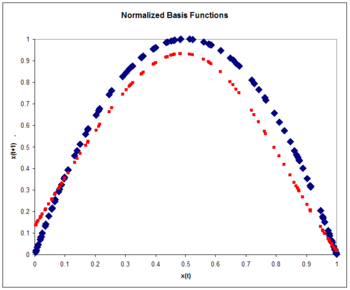

In mathematics a radial basis function (RBF) is a real-valued function whose value depends only on the distance between the input and some fixed point, either the origin, so that , or some other fixed point , called a center, so that . Any function that satisfies the property is a radial function. The distance is usually Euclidean distance, although other metrics are sometimes used. They are often used as a collection which forms a basis for some function space of interest, hence the name.

In mathematics (specifically multivariable calculus), a multiple integral is a definite integral of a function of several real variables, for instance, f(x, y) or f(x, y, z). Physical (natural philosophy) interpretation: S any surface, V any volume, etc.. Incl. variable to time, position, etc.

Ewald summation, named after Paul Peter Ewald, is a method for computing long-range interactions in periodic systems. It was first developed as the method for calculating the electrostatic energies of ionic crystals, and is now commonly used for calculating long-range interactions in computational chemistry. Ewald summation is a special case of the Poisson summation formula, replacing the summation of interaction energies in real space with an equivalent summation in Fourier space. In this method, the long-range interaction is divided into two parts: a short-range contribution, and a long-range contribution which does not have a singularity. The short-range contribution is calculated in real space, whereas the long-range contribution is calculated using a Fourier transform. The advantage of this method is the rapid convergence of the energy compared with that of a direct summation. This means that the method has high accuracy and reasonable speed when computing long-range interactions, and it is thus the de facto standard method for calculating long-range interactions in periodic systems. The method requires charge neutrality of the molecular system to accurately calculate the total Coulombic interaction. A study of the truncation errors introduced in the energy and force calculations of disordered point-charge systems is provided by Kolafa and Perram.

In applied mathematics, polyharmonic splines are used for function approximation and data interpolation. They are very useful for interpolating and fitting scattered data in many dimensions. Special cases include thin plate splines and natural cubic splines in one dimension.

There are various mathematical descriptions of the electromagnetic field that are used in the study of electromagnetism, one of the four fundamental interactions of nature. In this article, several approaches are discussed, although the equations are in terms of electric and magnetic fields, potentials, and charges with currents, generally speaking.

The derivation of the Navier–Stokes equations as well as their application and formulation for different families of fluids, is an important exercise in fluid dynamics with applications in mechanical engineering, physics, chemistry, heat transfer, and electrical engineering. A proof explaining the properties and bounds of the equations, such as Navier–Stokes existence and smoothness, is one of the important unsolved problems in mathematics.

In mathematics, vector spherical harmonics (VSH) are an extension of the scalar spherical harmonics for use with vector fields. The components of the VSH are complex-valued functions expressed in the spherical coordinate basis vectors.

In machine learning, the radial basis function kernel, or RBF kernel, is a popular kernel function used in various kernelized learning algorithms. In particular, it is commonly used in support vector machine classification.

Lagrangian field theory is a formalism in classical field theory. It is the field-theoretic analogue of Lagrangian mechanics. Lagrangian mechanics is used to analyze the motion of a system of discrete particles each with a finite number of degrees of freedom. Lagrangian field theory applies to continua and fields, which have an infinite number of degrees of freedom.