Related Research Articles

A globular cluster is a spheroidal conglomeration of stars that is bound together by gravity, with a higher concentration of stars towards their centers. They can contain anywhere from tens of thousands to many millions of member stars, all orbiting in a stable, compact formation. Globular clusters are similar in form to dwarf spheroidal galaxies, and the distinction between the two is not always clear. Their name is derived from Latin globulus. Globular clusters are occasionally known simply as "globulars".

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, supercomputers have existed, which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling. As power consumption by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

MareNostrum is the main supercomputer in the Barcelona Supercomputing Center. It is the most powerful supercomputer in Spain, one of thirteen supercomputers in the Spanish Supercomputing Network and one of the seven supercomputers of the European infrastructure PRACE.

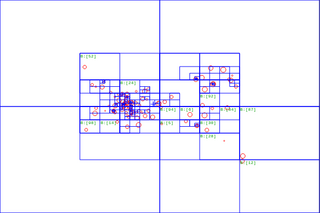

In physics and astronomy, an N-body simulation is a simulation of a dynamical system of particles, usually under the influence of physical forces, such as gravity. N-body simulations are widely used tools in astrophysics, from investigating the dynamics of few-body systems like the Earth-Moon-Sun system to understanding the evolution of the large-scale structure of the universe. In physical cosmology, N-body simulations are used to study processes of non-linear structure formation such as galaxy filaments and galaxy halos from the influence of dark matter. Direct N-body simulations are used to study the dynamical evolution of star clusters.

The Astronomical Calculation Institute is a research institute in Heidelberg, Germany, dating from the 1700s. Beginning in 2005, the ARI became part of the Center for Astronomy at Heidelberg University. Previously, the institute directly belonged to the state of Baden-Württemberg.

The Barnes–Hut simulation (named after Josh Barnes and Piet Hut) is an approximation algorithm for performing an n-body simulation. It is notable for having order O(n log n) compared to a direct-sum algorithm which would be O(n2).

Gravity Pipe is a project which uses hardware acceleration to perform gravitational computations. Integrated with Beowulf-style commodity computers, the GRAPE system calculates the force of gravity that a given mass, such as a star, exerts on others. The project resides at Tokyo University.

A PlayStation 3 cluster is a distributed system computer composed primarily of PlayStation 3 video game consoles.

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing.

gravitySimulator is a novel supercomputer that incorporates special-purpose GRAPE hardware to solve the gravitational n-body problem. It is housed in the Center for Computational Relativity and Gravitation (CCRG) at the Rochester Institute of Technology. It became operational in 2005.

SciNet is a consortium of the University of Toronto and affiliated Ontario hospitals. It has received funding from both the federal and provincial government, Faculties at the University of Toronto, and affiliated hospitals.

In physics, the n-body problem is the problem of predicting the individual motions of a group of celestial objects interacting with each other gravitationally. Solving this problem has been motivated by the desire to understand the motions of the Sun, Moon, planets, and visible stars. In the 20th century, understanding the dynamics of globular cluster star systems became an important n-body problem. The n-body problem in general relativity is considerably more difficult to solve due to additional factors like time and space distortions.

Japan operates a number of centers for supercomputing which hold world records in speed, with the K computer being the world's fastest from June 2011 to June 2012, and Fugaku holding the lead from June 2020 until June 2022.

Computational astrophysics refers to the methods and computing tools developed and used in astrophysics research. Like computational chemistry or computational physics, it is both a specific branch of theoretical astrophysics and an interdisciplinary field relying on computer science, mathematics, and wider physics. Computational astrophysics is most often studied through an applied mathematics or astrophysics programme at PhD level.

Approaches to supercomputer architecture have taken dramatic turns since the earliest systems were introduced in the 1960s. Early supercomputer architectures pioneered by Seymour Cray relied on compact innovative designs and local parallelism to achieve superior computational peak performance. However, in time the demand for increased computational power ushered in the age of massively parallel systems.

The high performance supercomputing program started in mid-to-late 1980s in Pakistan. Supercomputing is a recent area of Computer science in which Pakistan has made progress, driven in part by the growth of the information technology age in the country. Developing on the ingenious supercomputer program started in 1980s when the deployment of the Cray supercomputers was initially denied.

The Bolshoi simulation, a computer model of the universe run in 2010 on the Pleiades supercomputer at the NASA Ames Research Center, was the most accurate cosmological simulation to that date of the evolution of the large-scale structure of the universe. The Bolshoi simulation used the now-standard ΛCDM (Lambda-CDM) model of the universe and the WMAP five-year and seven-year cosmological parameters from NASA's Wilkinson Microwave Anisotropy Probe team. "The principal purpose of the Bolshoi simulation is to compute and model the evolution of dark matter halos, thereby rendering the invisible visible for astronomers to study, and to predict visible structure that astronomers can seek to observe." “Bolshoi” is a Russian word meaning “big.”

The Illustris project is an ongoing series of astrophysical simulations run by an international collaboration of scientists. The aim was to study the processes of galaxy formation and evolution in the universe with a comprehensive physical model. Early results were described in a number of publications following widespread press coverage. The project publicly released all data produced by the simulations in April, 2015. Key developers of the Illustris simulation have been Volker Springel and Mark Vogelsberger. The Illustris simulation framework and galaxy formation model has been used for a wide range of spin-off projects, starting with Auriga and IllustrisTNG followed by Thesan (2021), MillenniumTNG (2022) and TNG-Cluster.

In cosmology, Gurzadyan-Savvidy (GS) relaxation is a theory developed by Vahe Gurzadyan and George Savvidy to explain the relaxation over time of the dynamics of N-body gravitating systems such as star clusters and galaxies. Stellar systems observed in the Universe – globular clusters and elliptical galaxies – reveal their relaxed state reflected in the high degree of regularity of some of their physical characteristics such as surface luminosity, velocity dispersion, geometric shapes, etc. The basic mechanism of relaxation of stellar systems has been considered the 2-body encounters, to lead to the observed fine-grained equilibrium. The coarse-grained phase of evolution of gravitating systems is described by violent relaxation developed by Donald Lynden-Bell. The 2-body mechanism of relaxation is known in plasma physics. The difficulties with description of collective effects in N-body gravitating systems arise due to the long-range character of gravitational interaction, as distinct of plasma where due to two different signs of charges the Debye screening takes place. The 2-body relaxation mechanism e.g. for elliptical galaxies predicts around years i.e. time scales exceeding the age of the Universe. The problem of relaxation and evolution of stellar systems and the role of collective effects are studied by various techniques, see. Among the efficient methods of study of N-body gravitating systems are the numerical simulations, particularly, Sverre Aarseth's N-body codes are widely used.

References

- ↑ Spurzem, R. (2007), How to build and use special purpose PC clusters in stellar dynamics

- ↑ Spurzem, R. and Aarseth, S. J. (1996), Direct collisional simulation of 10000 particles past core collapse, Mon. Not. R. Astron. Soc.282, 19

- ↑ S. Harfst et al. (2007), Performance analysis of direct N-body algorithms on special-purpose supercomputers, New Astronomy, 12, 357