The Domain Name System (DNS) is a hierarchical and distributed naming system for computers, services, and other resources in the Internet or other Internet Protocol (IP) networks. It associates various information with domain names assigned to each of the associated entities. Most prominently, it translates readily memorized domain names to the numerical IP addresses needed for locating and identifying computer services and devices with the underlying network protocols. The Domain Name System has been an essential component of the functionality of the Internet since 1985.

The Hypertext Transfer Protocol (HTTP) is an application layer protocol in the Internet protocol suite model for distributed, collaborative, hypermedia information systems. HTTP is the foundation of data communication for the World Wide Web, where hypertext documents include hyperlinks to other resources that the user can easily access, for example by a mouse click or by tapping the screen in a web browser.

Hypertext Transfer Protocol Secure (HTTPS) is an extension of the Hypertext Transfer Protocol (HTTP). It uses encryption for secure communication over a computer network, and is widely used on the Internet. In HTTPS, the communication protocol is encrypted using Transport Layer Security (TLS) or, formerly, Secure Sockets Layer (SSL). The protocol is therefore also referred to as HTTP over TLS, or HTTP over SSL.

An email client, email reader or, more formally, message user agent (MUA) or mail user agent is a computer program used to access and manage a user's email.

In computing, load balancing is the process of distributing a set of tasks over a set of resources, with the aim of making their overall processing more efficient. Load balancing can optimize the response time and avoid unevenly overloading some compute nodes while other compute nodes are left idle.

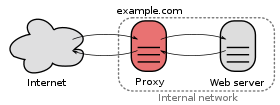

In computer networking, a proxy server is a server application that acts as an intermediary between a client requesting a resource and the server providing that resource. It improves privacy, security, and performance in the process.

Transport Layer Security (TLS) is a cryptographic protocol designed to provide communications security over a computer network. The protocol is widely used in applications such as email, instant messaging, and voice over IP, but its use in securing HTTPS remains the most publicly visible.

Squid is a caching and forwarding HTTP web proxy. It has a wide variety of uses, including speeding up a web server by caching repeated requests, caching World Wide Web (WWW), Domain Name System (DNS), and other network lookups for a group of people sharing network resources, and aiding security by filtering traffic. Although used for mainly HTTP and File Transfer Protocol (FTP), Squid includes limited support for several other protocols including Internet Gopher, Secure Sockets Layer (SSL), Transport Layer Security (TLS), and Hypertext Transfer Protocol Secure (HTTPS). Squid does not support the SOCKS protocol, unlike Privoxy, with which Squid can be used in order to provide SOCKS support.

SOCKS is an Internet protocol that exchanges network packets between a client and server through a proxy server. SOCKS5 optionally provides authentication so only authorized users may access a server. Practically, a SOCKS server proxies TCP connections to an arbitrary IP address, and provides a means for UDP packets to be forwarded. A SOCKS server accepts incoming client connection on TCP port 1080, as defined in RFC 1928.

In computer networks, a tunneling protocol is a communication protocol which allows for the movement of data from one network to another. It can, for example, allow private network communications to be sent across a public network, or for one network protocol to be carried over an incompatible network, through a process called encapsulation.

Microsoft Forefront Threat Management Gateway, formerly known as Microsoft Internet Security and Acceleration Server, is a discontinued network router, firewall, antivirus program, VPN server and web cache from Microsoft Corporation. It ran on Windows Server and works by inspecting all network traffic that passes through it.

Round-robin DNS is a technique of load distribution, load balancing, or fault-tolerance provisioning multiple, redundant Internet Protocol service hosts, e.g., Web server, FTP servers, by managing the Domain Name System's (DNS) responses to address requests from client computers according to an appropriate statistical model.

HTTP compression is a capability that can be built into web servers and web clients to improve transfer speed and bandwidth utilization.

The X-Forwarded-For (XFF) HTTP header field is a common method for identifying the originating IP address of a client connecting to a web server through an HTTP proxy or load balancer.

Server Name Indication (SNI) is an extension to the Transport Layer Security (TLS) computer networking protocol by which a client indicates which hostname it is attempting to connect to at the start of the handshaking process. The extension allows a server to present one of multiple possible certificates on the same IP address and TCP port number and hence allows multiple secure (HTTPS) websites to be served by the same IP address without requiring all those sites to use the same certificate. It is the conceptual equivalent to HTTP/1.1 name-based virtual hosting, but for HTTPS. This also allows a proxy to forward client traffic to the right server during TLS/SSL handshake. The desired hostname is not encrypted in the original SNI extension, so an eavesdropper can see which site is being requested. The SNI extension was specified in 2003 in RFC 3546

A TLS termination proxy is a proxy server that acts as an intermediary point between client and server applications, and is used to terminate and/or establish TLS tunnels by decrypting and/or encrypting communications. This is different from TLS pass-through proxies that forward encrypted (D)TLS traffic between clients and servers without terminating the tunnel.

Domain fronting is a technique for Internet censorship circumvention that uses different domain names in different communication layers of an HTTPS connection to discreetly connect to a different target domain than is discernable to third parties monitoring the requests and connections.

Cloudbleed was a Cloudflare buffer overflow disclosed by Project Zero on February 17, 2017. Cloudflare's code disclosed the contents of memory that contained the private information of other customers, such as HTTP cookies, authentication tokens, HTTP POST bodies, and other sensitive data. As a result, data from Cloudflare customers was leaked to all other Cloudflare customers that had access to server memory. This occurred, according to numbers provided by Cloudflare at the time, more than 18,000,000 times before the problem was corrected. Some of the leaked data was cached by search engines.

DNS over HTTPS (DoH) is a protocol for performing remote Domain Name System (DNS) resolution via the HTTPS protocol. A goal of the method is to increase user privacy and security by preventing eavesdropping and manipulation of DNS data by man-in-the-middle attacks by using the HTTPS protocol to encrypt the data between the DoH client and the DoH-based DNS resolver. By March 2018, Google and the Mozilla Foundation had started testing versions of DNS over HTTPS. In February 2020, Firefox switched to DNS over HTTPS by default for users in the United States. In May 2020, Chrome switched to DNS over HTTPS by default.