In mathematics, a tensor is an algebraic object that describes a multilinear relationship between sets of algebraic objects related to a vector space. Tensors may map between different objects such as vectors, scalars, and even other tensors. There are many types of tensors, including scalars and vectors, dual vectors, multilinear maps between vector spaces, and even some operations such as the dot product. Tensors are defined independent of any basis, although they are often referred to by their components in a basis related to a particular coordinate system.

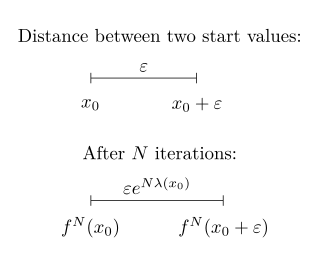

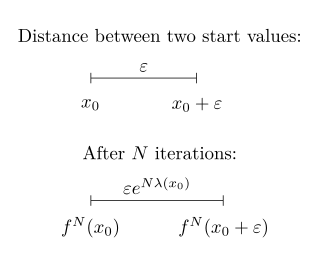

In mathematics, the Lyapunov exponent or Lyapunov characteristic exponent of a dynamical system is a quantity that characterizes the rate of separation of infinitesimally close trajectories. Quantitatively, two trajectories in phase space with initial separation vector diverge at a rate given by

Nonlinear dimensionality reduction, also known as manifold learning, refers to various related techniques that aim to project high-dimensional data onto lower-dimensional latent manifolds, with the goal of either visualizing the data in the low-dimensional space, or learning the mapping itself. The techniques described below can be understood as generalizations of linear decomposition methods used for dimensionality reduction, such as singular value decomposition and principal component analysis.

Multidimensional scaling (MDS) is a means of visualizing the level of similarity of individual cases of a dataset. MDS is used to translate "information about the pairwise 'distances' among a set of objects or individuals" into a configuration of points mapped into an abstract Cartesian space.

Dimensionality reduction, or dimension reduction, is the transformation of data from a high-dimensional space into a low-dimensional space so that the low-dimensional representation retains some meaningful properties of the original data, ideally close to its intrinsic dimension. Working in high-dimensional spaces can be undesirable for many reasons; raw data are often sparse as a consequence of the curse of dimensionality, and analyzing the data is usually computationally intractable. Dimensionality reduction is common in fields that deal with large numbers of observations and/or large numbers of variables, such as signal processing, speech recognition, neuroinformatics, and bioinformatics.

Linear discriminant analysis (LDA), normal discriminant analysis (NDA), or discriminant function analysis is a generalization of Fisher's linear discriminant, a method used in statistics and other fields, to find a linear combination of features that characterizes or separates two or more classes of objects or events. The resulting combination may be used as a linear classifier, or, more commonly, for dimensionality reduction before later classification.

Photoelasticity describes changes in the optical properties of a material under mechanical deformation. It is a property of all dielectric media and is often used to experimentally determine the stress distribution in a material, where it gives a picture of stress distributions around discontinuities in materials. Photoelastic experiments are an important tool for determining critical stress points in a material, and are used for determining stress concentration in irregular geometries.

In matrix theory, the Perron–Frobenius theorem, proved by Oskar Perron (1907) and Georg Frobenius (1912), asserts that a real square matrix with positive entries has a unique largest real eigenvalue and that the corresponding eigenvector can be chosen to have strictly positive components, and also asserts a similar statement for certain classes of nonnegative matrices. This theorem has important applications to probability theory ; to the theory of dynamical systems ; to economics ; to demography ; to social networks ; to Internet search engines (PageRank); and even to ranking of football teams. The first to discuss the ordering of players within tournaments using Perron–Frobenius eigenvectors is Edmund Landau.

Nearest neighbor search (NNS), as a form of proximity search, is the optimization problem of finding the point in a given set that is closest to a given point. Closeness is typically expressed in terms of a dissimilarity function: the less similar the objects, the larger the function values.

Stress majorization is an optimization strategy used in multidimensional scaling (MDS) where, for a set of -dimensional data items, a configuration of points in -dimensional space is sought that minimizes the so-called stress function . Usually is or , i.e. the matrix lists points in or dimensional Euclidean space so that the result may be visualised. The function is a cost or loss function that measures the squared differences between ideal distances and actual distances in r-dimensional space. It is defined as:

In photogrammetry and computer stereo vision, bundle adjustment is simultaneous refining of the 3D coordinates describing the scene geometry, the parameters of the relative motion, and the optical characteristics of the camera(s) employed to acquire the images, given a set of images depicting a number of 3D points from different viewpoints. Its name refers to the geometrical bundles of light rays originating from each 3D feature and converging on each camera's optical center, which are adjusted optimally according to an optimality criterion involving the corresponding image projections of all points.

In fluid mechanics and mathematics, a capillary surface is a surface that represents the interface between two different fluids. As a consequence of being a surface, a capillary surface has no thickness in slight contrast with most real fluid interfaces.

Isomap is a nonlinear dimensionality reduction method. It is one of several widely used low-dimensional embedding methods. Isomap is used for computing a quasi-isometric, low-dimensional embedding of a set of high-dimensional data points. The algorithm provides a simple method for estimating the intrinsic geometry of a data manifold based on a rough estimate of each data point’s neighbors on the manifold. Isomap is highly efficient and generally applicable to a broad range of data sources and dimensionalities.

In numerical linear algebra, the alternating-direction implicit (ADI) method is an iterative method used to solve Sylvester matrix equations. It is a popular method for solving the large matrix equations that arise in systems theory and control, and can be formulated to construct solutions in a memory-efficient, factored form. It is also used to numerically solve parabolic and elliptic partial differential equations, and is a classic method used for modeling heat conduction and solving the diffusion equation in two or more dimensions. It is an example of an operator splitting method.

The iterative proportional fitting procedure is the operation of finding the fitted matrix which is the closest to an initial matrix but with the row and column totals of a target matrix . The fitted matrix being of the form , where and are diagonal matrices such that has the margins of . Some algorithms can be chosen to perform biproportion. We have also the entropy maximization, information loss minimization or RAS which consists of factoring the matrix rows to match the specified row totals, then factoring its columns to match the specified column totals; each step usually disturbs the previous step’s match, so these steps are repeated in cycles, re-adjusting the rows and columns in turn, until all specified marginal totals are satisfactorily approximated. However, all algorithms give the same solution. In three- or more-dimensional cases, adjustment steps are applied for the marginals of each dimension in turn, the steps likewise repeated in cycles.

In information geometry, a divergence is a kind of statistical distance: a binary function which establishes the separation from one probability distribution to another on a statistical manifold.

t-distributed stochastic neighbor embedding (t-SNE) is a statistical method for visualizing high-dimensional data by giving each datapoint a location in a two or three-dimensional map. It is based on Stochastic Neighbor Embedding originally developed by Sam Roweis and Geoffrey Hinton, where Laurens van der Maaten proposed the t-distributed variant. It is a nonlinear dimensionality reduction technique for embedding high-dimensional data for visualization in a low-dimensional space of two or three dimensions. Specifically, it models each high-dimensional object by a two- or three-dimensional point in such a way that similar objects are modeled by nearby points and dissimilar objects are modeled by distant points with high probability.

In network theory, multidimensional networks, a special type of multilayer network, are networks with multiple kinds of relations. Increasingly sophisticated attempts to model real-world systems as multidimensional networks have yielded valuable insight in the fields of social network analysis, economics, urban and international transport, ecology, psychology, medicine, biology, commerce, climatology, physics, computational neuroscience, operations management, and finance.

In signal processing, multidimensional empirical mode decomposition is an extension of the one-dimensional (1-D) EMD algorithm to a signal encompassing multiple dimensions. The Hilbert–Huang empirical mode decomposition (EMD) process decomposes a signal into intrinsic mode functions combined with the Hilbert spectral analysis, known as the Hilbert–Huang transform (HHT). The multidimensional EMD extends the 1-D EMD algorithm into multiple-dimensional signals. This decomposition can be applied to image processing, audio signal processing, and various other multidimensional signals.

Empirical dynamic modeling (EDM) is a framework for analysis and prediction of nonlinear dynamical systems. Applications include population dynamics, ecosystem service, medicine, neuroscience, dynamical systems, geophysics, and human-computer interaction. EDM was originally developed by Robert May and George Sugihara. It can be considered a methodology for data modeling, predictive analytics, dynamical system analysis, machine learning and time series analysis.