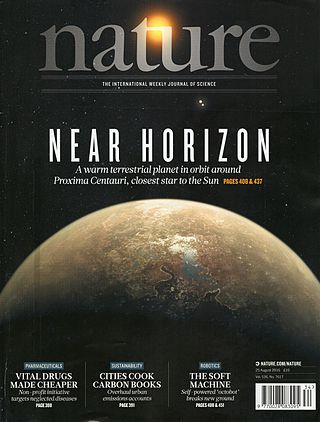

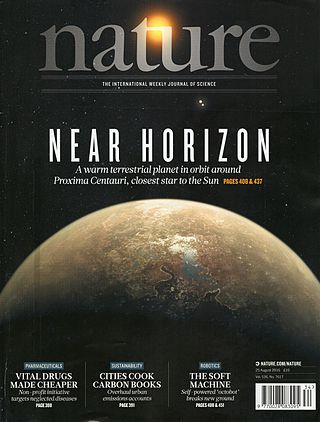

Nature is a British weekly scientific journal founded and based in London, England. As a multidisciplinary publication, Nature features peer-reviewed research from a variety of academic disciplines, mainly in science and technology. It has core editorial offices across the United States, continental Europe, and Asia under the international scientific publishing company Springer Nature. Nature was one of the world's most cited scientific journals by the Science Edition of the 2022 Journal Citation Reports, making it one of the world's most-read and most prestigious academic journals. As of 2012, it claimed an online readership of about three million unique readers per month.

A citation is a reference to a source. More precisely, a citation is an abbreviated alphanumeric expression embedded in the body of an intellectual work that denotes an entry in the bibliographic references section of the work for the purpose of acknowledging the relevance of the works of others to the topic of discussion at the spot where the citation appears.

Scientific citation is providing detailed reference in a scientific publication, typically a paper or book, to previous published communications which have a bearing on the subject of the new publication. The purpose of citations in original work is to allow readers of the paper to refer to cited work to assist them in judging the new work, source background information vital for future development, and acknowledge the contributions of earlier workers. Citations in, say, a review paper bring together many sources, often recent, in one place.

An academic journal or scholarly journal is a periodical publication in which scholarship relating to a particular academic discipline is published. Academic journals serve as permanent and transparent forums for the presentation, scrutiny, and discussion of research. They nearly universally require peer review or other scrutiny from contemporaries competent and established in their respective fields. Content typically takes the form of articles presenting original research, review articles, or book reviews. The purpose of an academic journal, according to Henry Oldenburg, is to give researchers a venue to "impart their knowledge to one another, and contribute what they can to the Grand design of improving natural knowledge, and perfecting all Philosophical Arts, and Sciences."

Open access (OA) is a set of principles and a range of practices through which research outputs are distributed online, free of access charges or other barriers. Under some models of open access publishing, barriers to copying or reuse are also reduced or removed by applying an open license for copyright.

The impact factor (IF) or journal impact factor (JIF) of an academic journal is a scientometric index calculated by Clarivate that reflects the yearly mean number of citations of articles published in the last two years in a given journal, as indexed by Clarivate's Web of Science.

Scientometrics is the field of study which concerns itself with measuring and analysing scholarly literature. Scientometrics is a sub-field of informetrics. Major research issues include the measurement of the impact of research papers and academic journals, the understanding of scientific citations, and the use of such measurements in policy and management contexts. In practice there is a significant overlap between scientometrics and other scientific fields such as information systems, information science, science of science policy, sociology of science, and metascience. Critics have argued that over-reliance on scientometrics has created a system of perverse incentives, producing a publish or perish environment that leads to low-quality research.

Citation impact or citation rate is a measure of how many times an academic journal article or book or author is cited by other articles, books or authors. Citation counts are interpreted as measures of the impact or influence of academic work and have given rise to the field of bibliometrics or scientometrics, specializing in the study of patterns of academic impact through citation analysis. The importance of journals can be measured by the average citation rate, the ratio of number of citations to number articles published within a given time period and in a given index, such as the journal impact factor or the citescore. It is used by academic institutions in decisions about academic tenure, promotion and hiring, and hence also used by authors in deciding which journal to publish in. Citation-like measures are also used in other fields that do ranking, such as Google's PageRank algorithm, software metrics, college and university rankings, and business performance indicators.

The h-index is an author-level metric that measures both the productivity and citation impact of the publications, initially used for an individual scientist or scholar. The h-index correlates with success indicators such as winning the Nobel Prize, being accepted for research fellowships and holding positions at top universities. The index is based on the set of the scientist's most cited papers and the number of citations that they have received in other publications. The index has more recently been applied to the productivity and impact of a scholarly journal as well as a group of scientists, such as a department or university or country. The index was suggested in 2005 by Jorge E. Hirsch, a physicist at UC San Diego, as a tool for determining theoretical physicists' relative quality and is sometimes called the Hirsch index or Hirsch number.

The reliability of Wikipedia and its user-generated editing model, particularly its English-language edition, has been questioned and tested. Wikipedia is written and edited by volunteer editors who generate online content with the editorial oversight of other volunteer editors via community-generated policies and guidelines. The reliability of the project has been tested statistically through comparative review, analysis of the historical patterns, and strengths and weaknesses inherent in its editing process. The online encyclopedia has been criticized for its factual unreliability, principally regarding its content, presentation, and editorial processes. Studies and surveys attempting to gauge the reliability of Wikipedia have mixed results. Wikipedia's reliability was frequently criticized in the 2000s but has been improved; it has been generally praised in the late 2010s and early 2020s.

Open science is the movement to make scientific research and its dissemination accessible to all levels of society, amateur or professional. Open science is transparent and accessible knowledge that is shared and developed through collaborative networks. It encompasses practices such as publishing open research, campaigning for open access, encouraging scientists to practice open-notebook science, broader dissemination and engagement in science and generally making it easier to publish, access and communicate scientific knowledge.

Journal ranking is widely used in academic circles in the evaluation of an academic journal's impact and quality. Journal rankings are intended to reflect the place of a journal within its field, the relative difficulty of being published in that journal, and the prestige associated with it. They have been introduced as official research evaluation tools in several countries.

Wikipedia has been studied extensively. Between 2001 and 2010, researchers published at least 1,746 peer-reviewed articles about the online encyclopedia. Such studies are greatly facilitated by the fact that Wikipedia's database can be downloaded without help from the site owner.

Scholarly peer review or academic peer review is the process of having a draft version of a researcher's methods and findings reviewed by experts in the same field. Peer review is widely used for helping the academic publisher decide whether the work should be accepted, considered acceptable with revisions, or rejected for official publication in an academic journal, a monograph or in the proceedings of an academic conference. If the identities of authors are not revealed to each other, the procedure is called dual-anonymous peer review.

The Wikipedia online encyclopedia has, since the late 2000s, served as a popular source for health information for both laypersons and, in many cases, health care practitioners. Health-related articles on Wikipedia are popularly accessed as results from search engines, which frequently deliver links to Wikipedia articles. Independent assessments have been made of the number and demographics of people who seek health information on Wikipedia, the scope of health information on Wikipedia, and the quality and reliability of the information on Wikipedia.

In scholarly and scientific publishing, altmetrics are non-traditional bibliometrics proposed as an alternative or complement to more traditional citation impact metrics, such as impact factor and h-index. The term altmetrics was proposed in 2010, as a generalization of article level metrics, and has its roots in the #altmetrics hashtag. Although altmetrics are often thought of as metrics about articles, they can be applied to people, journals, books, data sets, presentations, videos, source code repositories, web pages, etc.

Gender bias on Wikipedia is a term used to describe various sex-related facts about Wikipedia: its contributors are mostly male, relatively few biographies on Wikipedia are about women, and topics primarily of interest to women are less well-covered.

Metascience is the use of scientific methodology to study science itself. Metascience seeks to increase the quality of scientific research while reducing inefficiency. It is also known as "research on research" and "the science of science", as it uses research methods to study how research is done and find where improvements can be made. Metascience concerns itself with all fields of research and has been described as "a bird's eye view of science". In the words of John Ioannidis, "Science is the best thing that has happened to human beings ... but we can do it better."

There are a number of approaches to ranking academic publishing groups and publishers. Rankings rely on subjective impressions by the scholarly community, on analyses of prize winners of scientific associations, discipline, a publisher's reputation, and its impact factor.

Taha Yasseri is a physicist and sociologist known for his research on Wikipedia and computational social science. He is a professor at the School of Sociology at University College Dublin, Ireland. He was formerly a senior research fellow in computational social science at the Oxford Internet Institute (OII), University of Oxford, a Turing Fellow at the Alan Turing Institute for data science, and a research fellow in humanities and social sciences at Wolfson College, Oxford. Yasseri is one of the leading scholars in computational social science and his research has been widely covered in mainstream media. Yasseri obtained his PhD in theoretical physics of complex systems at the age of 25 from the University of Göttingen, Germany.