Rendering is the process of generating a photorealistic or non-photorealistic image from input data such as 3D models. The word "rendering" originally meant the task performed by an artist when depicting a real or imaginary thing. Today, to "render" commonly means to generate an image or video from a precise description using a computer program.

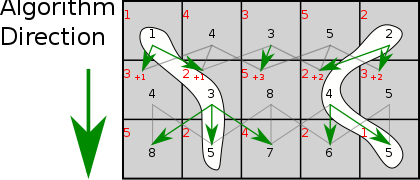

Flood fill, also called seed fill, is a flooding algorithm that determines and alters the area connected to a given node in a multi-dimensional array with some matching attribute. It is used in the "bucket" fill tool of paint programs to fill connected, similarly colored areas with a different color, and in games such as Go and Minesweeper for determining which pieces are cleared. A variant called boundary fill uses the same algorithms but is defined as the area connected to a given node that does not have a particular attribute.

In 3D computer graphics, ray tracing is a technique for modeling light transport for use in a wide variety of rendering algorithms for generating digital images.

In scientific visualization and computer graphics, volume rendering is a set of techniques used to display a 2D projection of a 3D discretely sampled data set, typically a 3D scalar field.

General-purpose computing on graphics processing units is the use of a graphics processing unit (GPU), which typically handles computation only for computer graphics, to perform computation in applications traditionally handled by the central processing unit (CPU). The use of multiple video cards in one computer, or large numbers of graphics chips, further parallelizes the already parallel nature of graphics processing.

The Saffron Type System is a system for rendering high-quality scalable type on digital displays. It was developed by Mitsubishi Electric Research Laboratories, and is built on a core of adaptively-sampled distance field (ADF) technology. Saffron has been licensed to Adobe and Monotype and is shipping in numerous products such as the Adobe Flash Player and Amazon Kindle. Saffron has been implemented in both software and hardware.

In parallel computing, an embarrassingly parallel workload or problem is one where little or no effort is needed to split the problem into a number of parallel tasks. This is due to minimal or no dependency upon communication between the parallel tasks, or for results between them.

Pixel art scaling algorithms are graphical filters that attempt to enhance the appearance of hand-drawn 2D pixel art graphics. These algorithms are a form of automatic image enhancement. Pixel art scaling algorithms employ methods significantly different than the common methods of image rescaling, which have the goal of preserving the appearance of images.

Texture synthesis is the process of algorithmically constructing a large digital image from a small digital sample image by taking advantage of its structural content. It is an object of research in computer graphics and is used in many fields, amongst others digital image editing, 3D computer graphics and post-production of films.

In computer graphics and digital imaging, imagescaling refers to the resizing of a digital image. In video technology, the magnification of digital material is known as upscaling or resolution enhancement.

Autostereoscopy is any method of displaying stereoscopic images without the use of special headgear, glasses, something that affects vision, or anything for eyes on the part of the viewer. Because headgear is not required, it is also called "glasses-free 3D" or "glassesless 3D".

In computer graphics, color quantization or color image quantization is quantization applied to color spaces; it is a process that reduces the number of distinct colors used in an image, usually with the intention that the new image should be as visually similar as possible to the original image. Computer algorithms to perform color quantization on bitmaps have been studied since the 1970s. Color quantization is critical for displaying images with many colors on devices that can only display a limited number of colors, usually due to memory limitations, and enables efficient compression of certain types of images.

Marc Stewart Levoy is a computer graphics researcher and Professor Emeritus of Computer Science and Electrical Engineering at Stanford University, a vice president and Fellow at Adobe Inc., and a Distinguished Engineer at Google. He is noted for pioneering work in volume rendering, light fields, and computational photography.

Livewire, is a segmentation technique which allows a user to select regions of interest to be extracted quickly and accurately, using simple mouse clicks. It is based on the lowest cost path algorithm, by Edsger W. Dijkstra. Firstly convolve the image with a Sobel filter to extract edges. Each pixel of the resulting image is a vertex of the graph and has edges going to the 4 pixels around it, as up, down, left, right. The edge costs are defined based on a cost function. In 1995, Eric N. Mortensen and William A. Barrett made some extension work on livewire segmentation tool, which is known as Intelligent Scissors.

ImageMagick, invoked from the command line as magick, is a free and open-source cross-platform software suite for displaying, creating, converting, modifying, and editing raster images. ImageMagick was created by John Cristy in 1987, it can read and write over 200 image file formats. It is widely used in open-source applications.

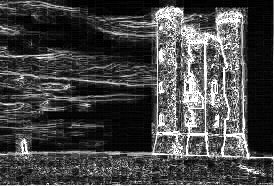

In the study of image processing, a watershed is a transformation defined on a grayscale image. The name refers metaphorically to a geological watershed, or drainage divide, which separates adjacent drainage basins. The watershed transformation treats the image it operates upon like a topographic map, with the brightness of each point representing its height, and finds the lines that run along the tops of ridges.

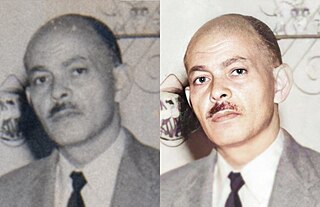

Inpainting is a conservation process where damaged, deteriorated, or missing parts of an artwork are filled in to present a complete image. This process is commonly used in image restoration. It can be applied to both physical and digital art mediums such as oil or acrylic paintings, chemical photographic prints, sculptures, or digital images and video.

Screen space ambient occlusion (SSAO) is a computer graphics technique for efficiently approximating the ambient occlusion effect in real time. It was developed by Vladimir Kajalin while working at Crytek and was used for the first time in 2007 by the video game Crysis, also developed by Crytek.

In graph algorithms, the widest path problem is the problem of finding a path between two designated vertices in a weighted graph, maximizing the weight of the minimum-weight edge in the path. The widest path problem is also known as the maximum capacity path problem. It is possible to adapt most shortest path algorithms to compute widest paths, by modifying them to use the bottleneck distance instead of path length. However, in many cases even faster algorithms are possible.

In computer vision, a saliency map is an image that highlights either the region on which people's eyes focus first or the most relevant regions for machine learning models. The goal of a saliency map is to reflect the degree of importance of a pixel to the human visual system or an otherwise opaque ML model.