In computer network engineering, an Internet Standard is a normative specification of a technology or methodology applicable to the Internet. Internet Standards are created and published by the Internet Engineering Task Force (IETF). They allow interoperation of hardware and software from different sources which allows internets to function. As the Internet became global, Internet Standards became the lingua franca of worldwide communications.

The Internet Engineering Task Force (IETF) is a standards organization for the Internet and is responsible for the technical standards that make up the Internet protocol suite (TCP/IP). It has no formal membership roster or requirements and all its participants are volunteers. Their work is usually funded by employers or other sponsors.

Joyce Kathleen Reynolds was an American computer scientist who played a significant role in developing protocols underlying the Internet. She authored or co-authored many RFCs, most notably those introducing and specifying the Telnet, FTP, and POP protocols.

A Request for Comments (RFC) is a publication in a series from the principal technical development and standards-setting bodies for the Internet, most prominently the Internet Engineering Task Force (IETF). An RFC is authored by individuals or groups of engineers and computer scientists in the form of a memorandum describing methods, behaviors, research, or innovations applicable to the working of the Internet and Internet-connected systems. It is submitted either for peer review or to convey new concepts, information, or, occasionally, engineering humor.

robots.txt is the filename used for implementing the Robots Exclusion Protocol, a standard used by websites to indicate to visiting web crawlers and other web robots which portions of the website they are allowed to visit.

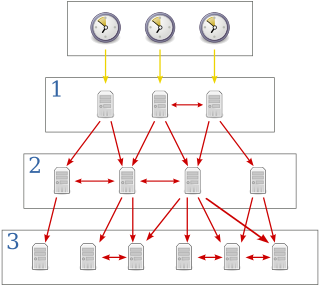

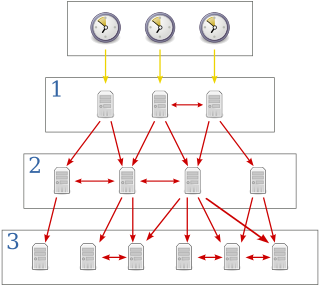

The Network Time Protocol (NTP) is a networking protocol for clock synchronization between computer systems over packet-switched, variable-latency data networks. In operation since before 1985, NTP is one of the oldest Internet protocols in current use. NTP was designed by David L. Mills of the University of Delaware.

The Internet Architecture Board (IAB) is "a committee of the Internet Engineering Task Force (IETF) and an advisory body of the Internet Society (ISOC). Its responsibilities include architectural oversight of IETF activities, Internet Standards Process oversight and appeal, and the appointment of the Request for Comments (RFC) Editor. The IAB is also responsible for the management of the IETF protocol parameter registries."

Transport Layer Security (TLS) is a cryptographic protocol designed to provide communications security over a computer network. The protocol is widely used in applications such as email, instant messaging, and voice over IP, but its use in securing HTTPS remains the most publicly visible.

The domain name gov is a sponsored top-level domain (sTLD) in the Domain Name System of the Internet. The name is derived from the word government, indicating its restricted use by government entities. The TLD is administered by the Cybersecurity and Infrastructure Security Agency (CISA), a component of the United States Department of Homeland Security.

Sender Policy Framework (SPF) is an email authentication method which ensures the sending mail server is authorized to originate mail from the email sender's domain. This authentication only applies to the email sender listed in the "envelope from" field during the initial SMTP connection. If the email is bounced, a message is sent to this address, and for downstream transmission it typically appears in the "Return-Path" header. To authenticate the email address which is actually visible to recipients on the "From:" line, other technologies such as DMARC must be used. Forgery of this address is known as email spoofing, and is often used in phishing and email spam.

In computing, syslog is a standard for message logging. It allows separation of the software that generates messages, the system that stores them, and the software that reports and analyzes them. Each message is labeled with a facility code, indicating the type of system generating the message, and is assigned a severity level.

Opportunistic encryption (OE) refers to any system that, when connecting to another system, attempts to encrypt communications channels, otherwise falling back to unencrypted communications. This method requires no pre-arrangement between the two systems.

DomainKeys Identified Mail (DKIM) is an email authentication method designed to detect forged sender addresses in email, a technique often used in phishing and email spam.

John C. Klensin is a political scientist and computer science professional who is active in Internet-related issues.

HTTP Strict Transport Security (HSTS) is a policy mechanism that helps to protect websites against man-in-the-middle attacks such as protocol downgrade attacks and cookie hijacking. It allows web servers to declare that web browsers should automatically interact with it using only HTTPS connections, which provide Transport Layer Security (TLS/SSL), unlike the insecure HTTP used alone. HSTS is an IETF standards track protocol and is specified in RFC 6797.

HTTP/2 is a major revision of the HTTP network protocol used by the World Wide Web. It was derived from the earlier experimental SPDY protocol, originally developed by Google. HTTP/2 was developed by the HTTP Working Group of the Internet Engineering Task Force (IETF). HTTP/2 is the first new version of HTTP since HTTP/1.1, which was standardized in RFC 2068 in 1997. The Working Group presented HTTP/2 to the Internet Engineering Steering Group (IESG) for consideration as a Proposed Standard in December 2014, and IESG approved it to publish as Proposed Standard on February 17, 2015. The initial HTTP/2 specification was published as RFC 7540 on May 14, 2015.

DNS Certification Authority Authorization (CAA) is an Internet security policy mechanism that allows domain name holders to indicate to certificate authorities whether they are authorized to issue digital certificates for a particular domain name. It does this by means of a "CAA" Domain Name System (DNS) resource record.

J. Alex Halderman is professor of computer science and engineering at the University of Michigan, where he is also director of the Center for Computer Security & Society. Halderman's research focuses on computer security and privacy, with an emphasis on problems that broadly impact society and public policy.

The Cybersecurity and Infrastructure Security Agency (CISA) is a component of the United States Department of Homeland Security (DHS) responsible for cybersecurity and infrastructure protection across all levels of government, coordinating cybersecurity programs with U.S. states, and improving the government's cybersecurity protections against private and nation-state hackers.

Murray S. Kucherawy is a computer scientist, mostly known for his work on email standardization and open source software.